In Kubernetes, are there hidden costs to running many cluster nodes?

Yes, since not all CPU and memory in your Kubernetes nodes can be used to run Pods.

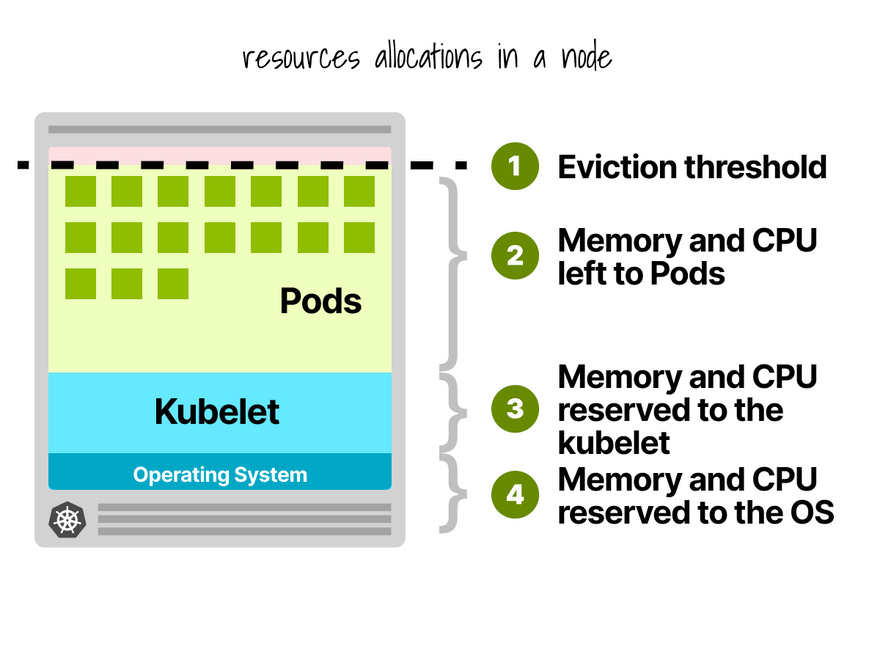

In a Kubernetes node, CPU and memory are divided into:

- Operating system.

- Kubelet, CNI, CRI, CSI (+ system daemons).

- Pods.

- Eviction threshold.

Those reservations depend on the size of the instance and could add up to a sizeable overhead.

Let's give a quick example.

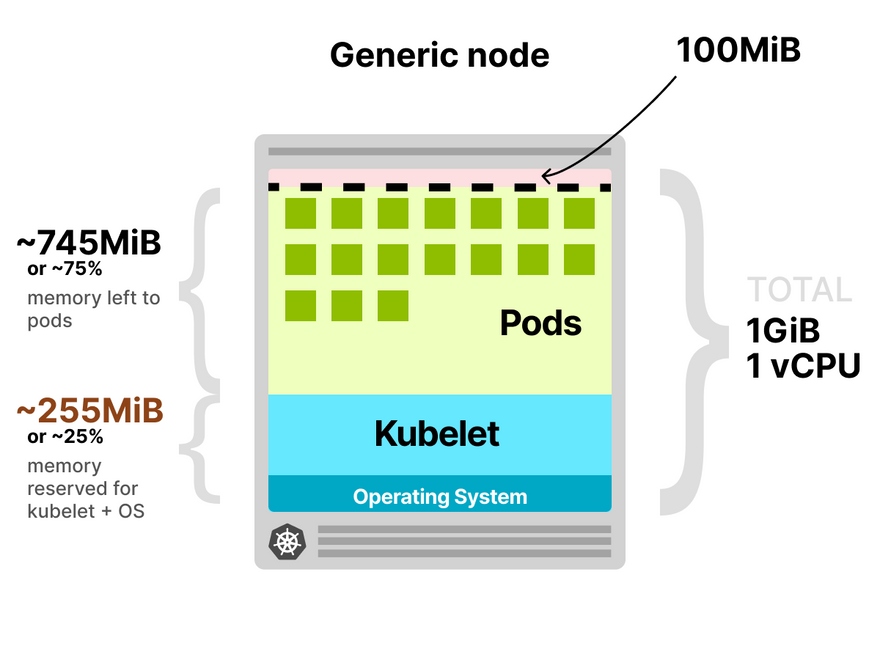

Imagine you have a cluster with a single 1GiB / 1vCPU node.

The following resources are reserved for the kubelet and operating system:

- 255MiB of memory.

- 60m of CPU.

On top of that, 100MB is reserved for the eviction threshold.

In total, that's 25% of memory and 6% of CPU that you can't use.

How does this work in a cloud provider?

EKS has some (interesting?) limits.

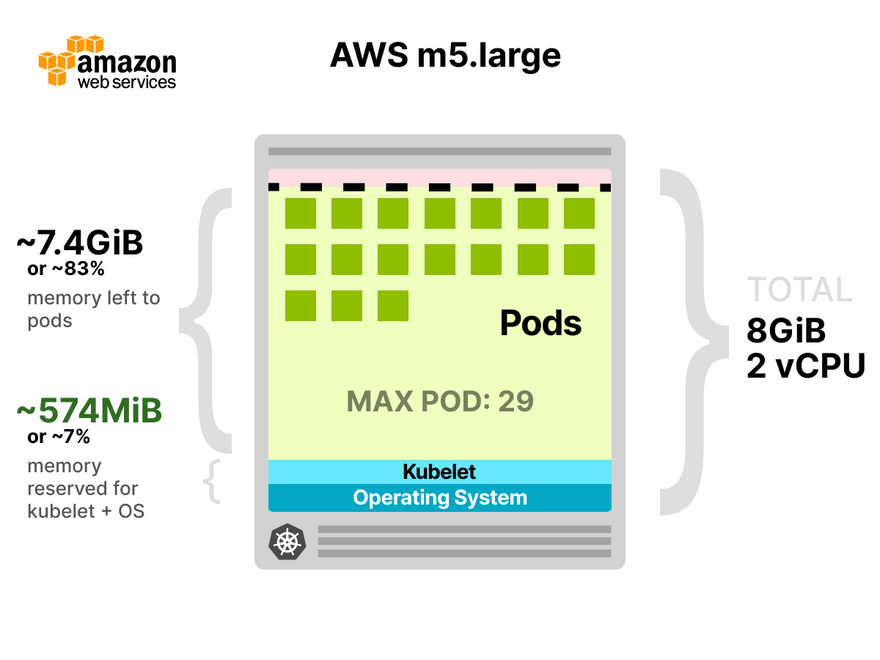

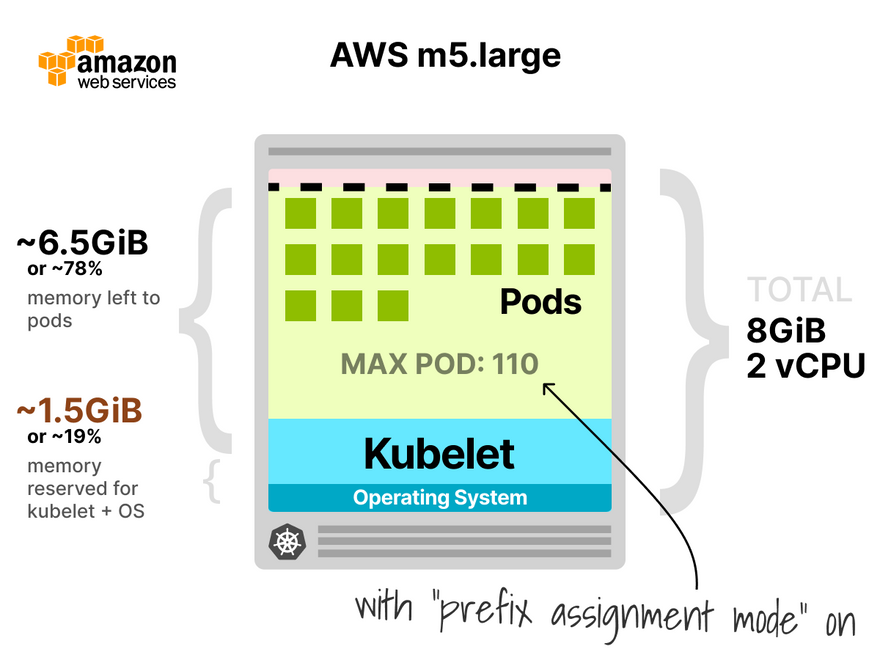

Let's pick an m5.large with 2vCPU and 8GiB of memory.

AWS reserves the following for the kubelet and operating system:

- 574MiB of memory.

- 70m of CPU.

This time, you are lucky.

You can use ~93% of the available memory.

But where do those numbers come from?

Every cloud provider has its way of defining limits, but for the CPU, they seem to all agree on the following values:

- 6% of the first core.

- 1% of the next core (up to 2 cores).

- 0.5% of the next 2 cores (up to 4 cores).

- 0.25% of any cores above four cores.

As for the memory limits, this varies a lot between providers.

Azure is the most conservative, and AWS the least.

The reserved memory for the kubelet in Azure is:

- 25% of the first 4 GB of memory.

- 20% of the following 4 GB of memory (up to 8 GB).

- 10% of the following 8 GB of memory (up to 16 GB).

- 6% of the next 112 GB of memory (up to 128 GB).

- 2% of any memory above 128 GB.

That's the same for GKE except for one value: the eviction threshold is 100MB in GKE and 750MiB in AKS.

In EKS, the memory is allocated using the following formula:

255MiB + (11MiB * MAX_NUMBER OF PODS)

This formula raises a few questions, though.

In the previous example, the m5.large reserved 574MiB of memory.

Does that mean the VM can have at most (574 - 255) / 11 = 29 pods?

This is correct if you don't enable the prefix assignment mode in your VPC-CNI.

If you do, the results are vastly different.

With up to 110 pods, AWS reserves:

- 1.4GiB of memory.

- (still) 70m of CPU.

Which sounds more reasonable and in line with the rest of the cloud providers.

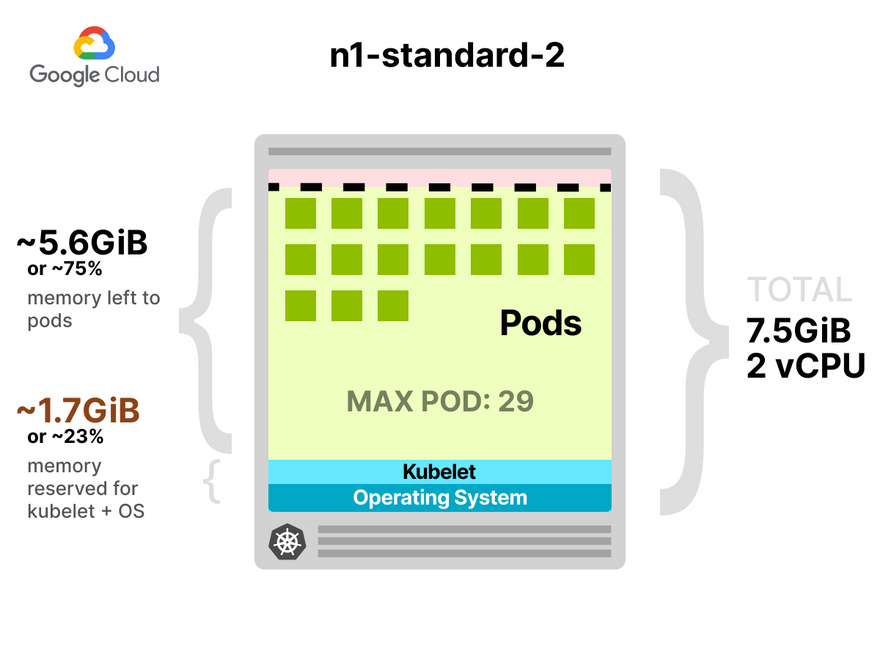

Let's have a look at GKE for a comparison.

With a similar instance type (i.e. n1-standard-2 with 7.5GB memory, 2vCPU), the reservations for the kubelet are as follows:

- 1.7GB of memory.

- 70m of CPU.

In other words, 23% of the instance memory cannot be allocated to run pods.

If the instance costs $48.54 per month, you spend $11.16 to run the kubelet.

What about the other cloud providers?

How do you inspect the values?

We've built a simple tool that inspects the config of the kubelet and pulls the relevant details.

You can find it here: https://github.com/learnk8s/kubernetes-resource-inspector.

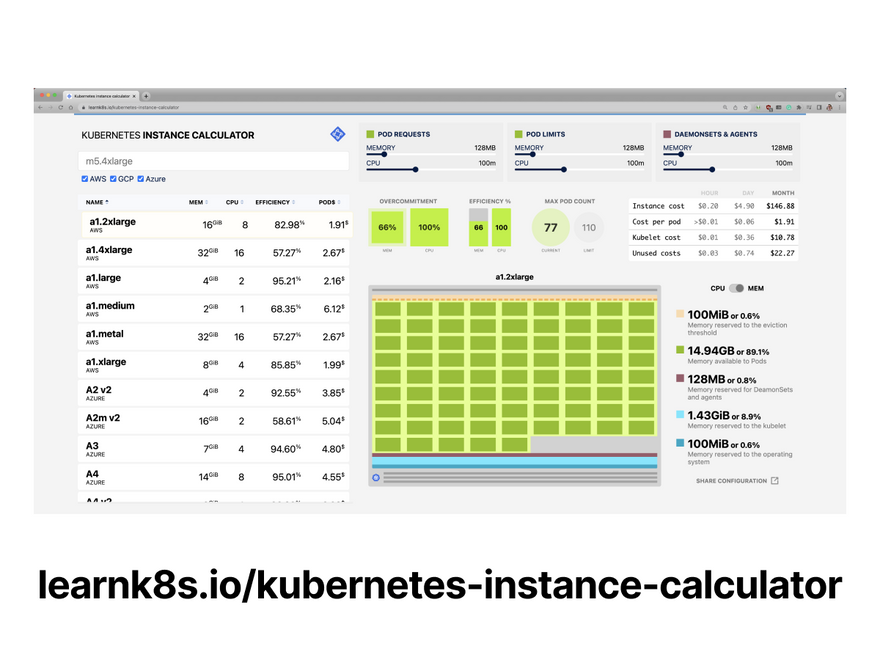

If you're interested in exploring more about node sizes, we also built a simple instance calculator where you can define the size of the workloads, and it will show all instances that can fit that (and their price).

https://learnk8s.io/kubernetes-instance-calculator

I hope you enjoyed this short article on Kubernetes resource reservations; here, you can find more links to explore the subject further.

- Kubernetes instance calculator.

- Allocatable memory and CPU in Kubernetes Nodes

- Allocatable memory and CPU resources on GKE.

- AWS EKS AMI reserved CPU and reserved memory.

- AKS resource reservations.

- Official docs https://kubernetes.io/docs/tasks/administer-cluster/reserve-compute-resources/)

- Enabling prefix assignment in EKS and VPC CNI.

And finally, if you've enjoyed this thread, you might also like the Kubernetes workshops that we run at Learnk8s https://learnk8s.io/training or this collection of past Twitter threads https://twitter.com/danielepolencic/status/1298543151901155330

Top comments (7)

In Kubernetes, reserved CPU and memory ensure critical system services have dedicated resources, preventing resource contention. A calculator can be used to estimate the right amount of CPU and memory to reserve based on the node's workload and management needs. These reserved resources guarantee that essential Kubernetes components like kubelet operate smoothly, even under heavy workload conditions.

Step into the Arcade of the Past!

From NES to SEGA Genesis, find all your favorite emulator games in one place.

Enjoy legendary retro games online, anywhere, anytime — only on ClassicGameZone.com.

From the 8-bit magic of the NES and the handheld glory of the GBA, to the console-war defining Sega Genesis and the game-changing PlayStation 1... an entire universe of classic gaming, ready to play in your browser, is all waiting at Retro Games.

Bloodmoney is a unique psychological horror clicker game that blends simple mechanics with deep moral questions. At first glance, the game Bloodmoney appears lighthearted, with soft pastel colors and cartoon-like visuals. However, underneath this innocent surface lies a dark and disturbing story about desperation, morality, and the human cost of survival.

Really insightful breakdown of how CPU and memory are reserved across different cloud providers in Kubernetes. It's easy to overlook how much of your resources aren't actually available for workloads.

On a related note — especially for teams or individuals managing costs — it’s also useful to get clarity on tax obligations. If you're based in South Africa, this Tax Return Calculator is a helpful tool to estimate what you might owe, particularly when balancing infrastructure spend with overall financial planning.

Thanks for sharing such a detailed write-up!

The PC version of geometry dash combines fun, speed, and precision in one addictive package. You’ll notice how seamless the jumps and music feel compared to smaller devices. It’s the best choice for both casual and hardcore fans.

If you are veering to the right, transition to Drift Hunters the left side, and vice versa. This drifting method is referred to as 'Manji'.