In Kubernetes, what should I use as CPU requests and limits?

Popular answers include:

- Always use limits!

- NEVER use limits, only requests!

- I don't use either; is it OK?

Let's dive into it.

In Kubernetes, you have two ways to specify how much CPU a pod can use:

- Requests are usually used to determine the average consumption.

- Limits set the max number of resources allowed.

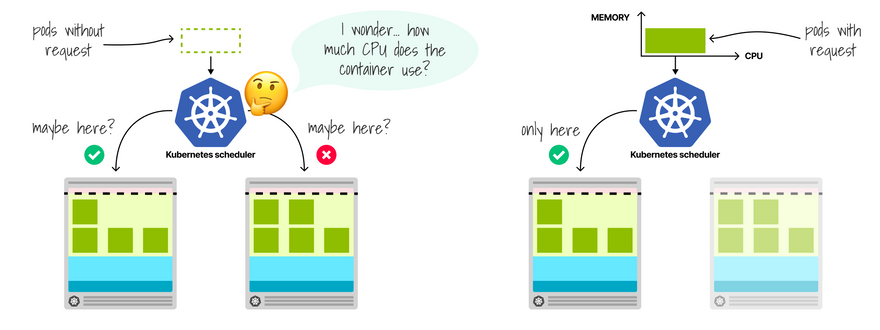

The Kubernetes scheduler uses requests to determine where the pod should be allocated in the cluster.

Since the scheduler doesn't know the consumption (the pod hasn't started yet), it needs a hint.

But it doesn't end there.

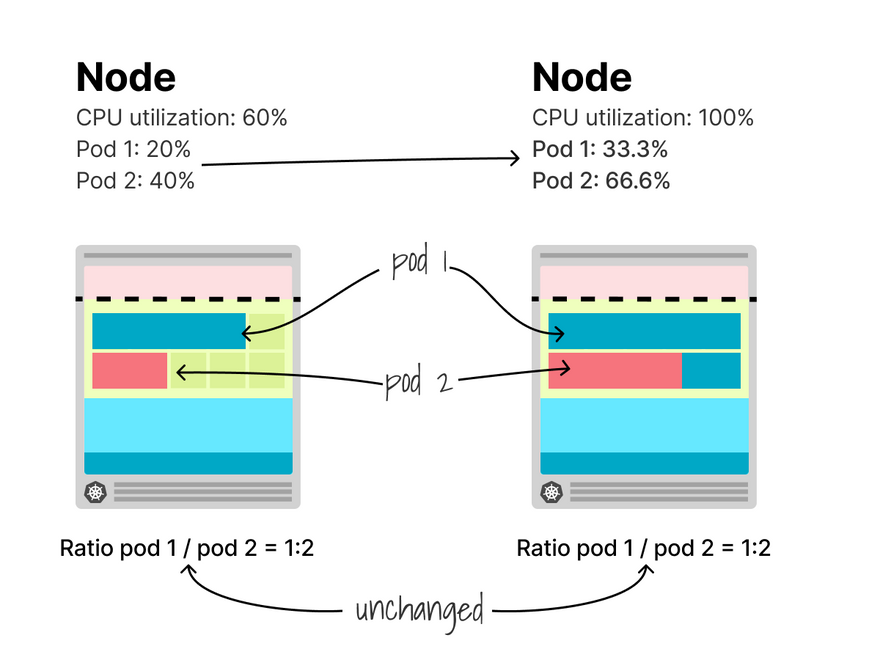

CPU requests are also used to repart the CPU to your containers.

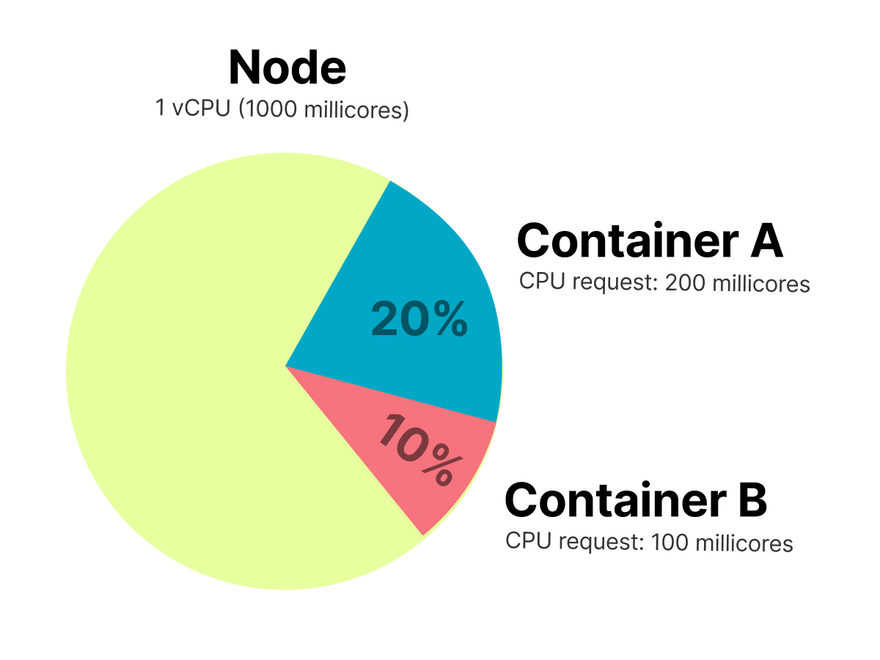

Let's have a look at an example:

- A node has a single CPU.

- Container A has requests equal to 0.1 vCPU.

- Container B has requests equal to 0.2 vCPU.

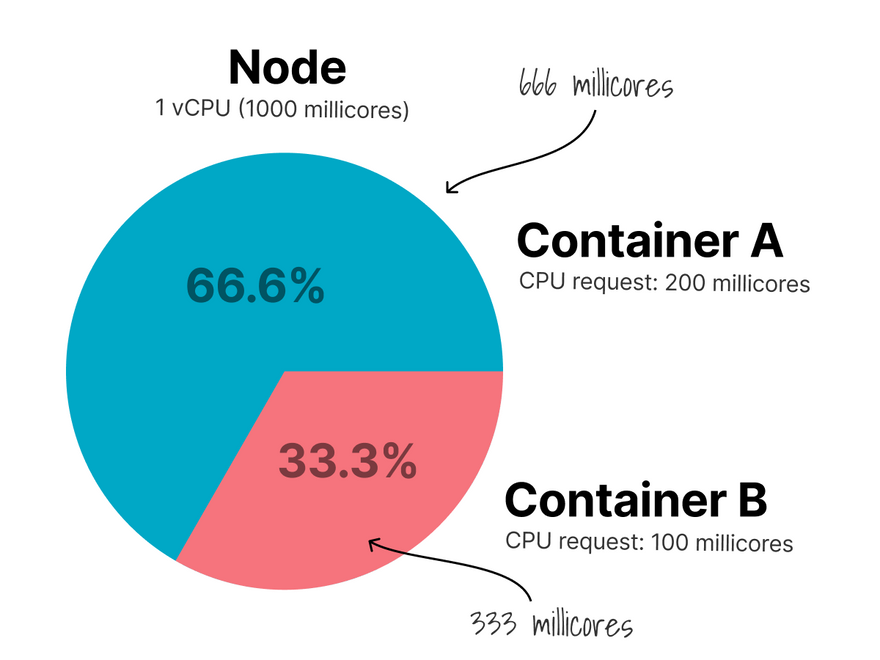

What happens when both containers try to use 100% of the available CPU?

Since the CPU request doesn't limit consumption, both containers will use all available CPUs.

However, since container B's request is doubled compared to the other, the final CPU distribution is: Container 1 uses 0.3vCPU and the other 0.6vCPU (double the amount).

Requests are suitable for:

- Setting a baseline (give me at least X amount of CPU).

- Setting relationships between pods (this pod A uses twice as much CPU as the other).

But do not help set hard limits.

For that, you need CPU limits.

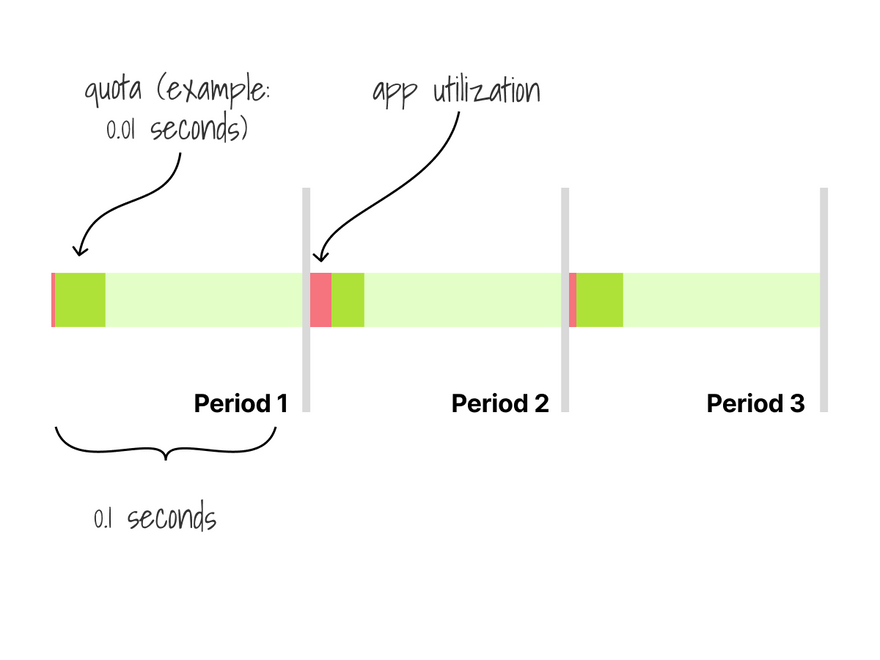

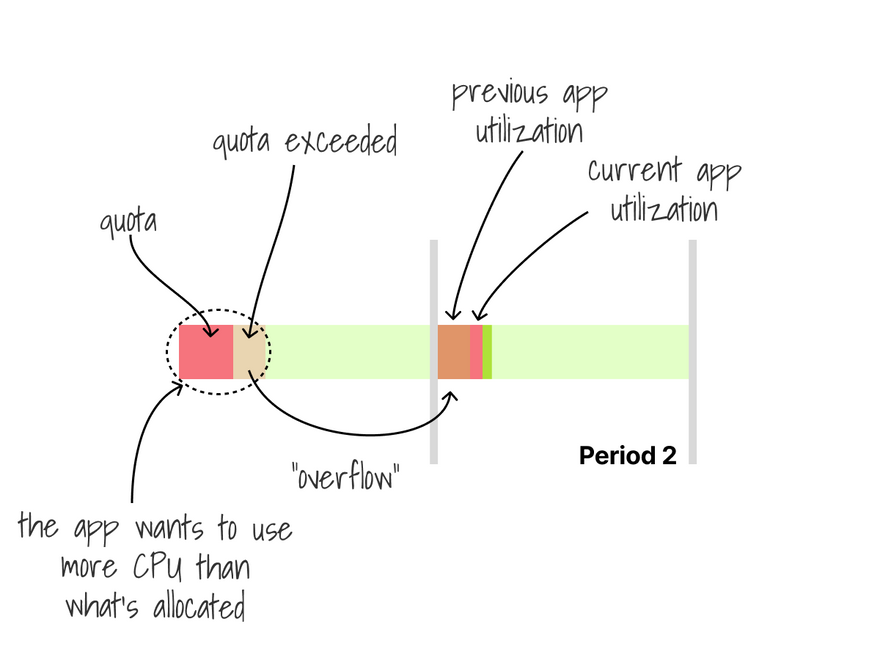

When you set a CPU limit, you define a period and quota.

Example:

- period: 100000 microseconds (0.1s).

- quota: 10000 microseconds (0.01s).

I can only use the CPU for 0.01 seconds every 0.1 seconds.

That's also abbreviated as "100m".

If your container has a hard limit and wants more CPU, it has to wait for the next period.

Your process is throttled.

So what should you use as CPU requests and limits in your Pods?

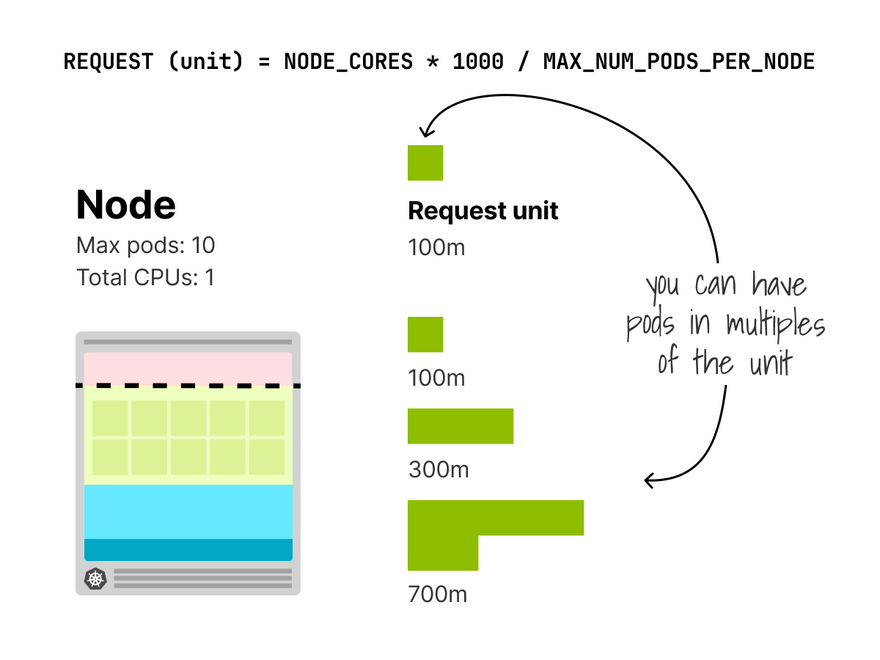

A simple (but not accurate) way is to calculate the smallest CPU unit as:

REQUEST = NODE_CORES * 1000 / MAX_NUM_PODS_PER_NODE

For a 1 vCPU node and a limit of 10 Pods, that's a 1 * 1000 / 10 = 100Mi request.

Assign the smallest unit or a multiplier of it to your containers.

For example, if you don't know how much CPU you need for Pod A, but you identified it is twice as Pod B, you could set:

- Request A: 1 unit

- Request B: 2 units

If the containers use 100% CPU, they repart the CPU according to their weights (1:2).

A better approach is to monitor the app and derive the average CPU utilization.

You can do this with your existing monitoring infrastructure or use the Vertical Pod Autoscaler to monitor and report the average request value.

How should you set the limits?

- Your app might already have "hard" limits. (Node.js is single-threaded and uses up to 1 core even if you assign 2).

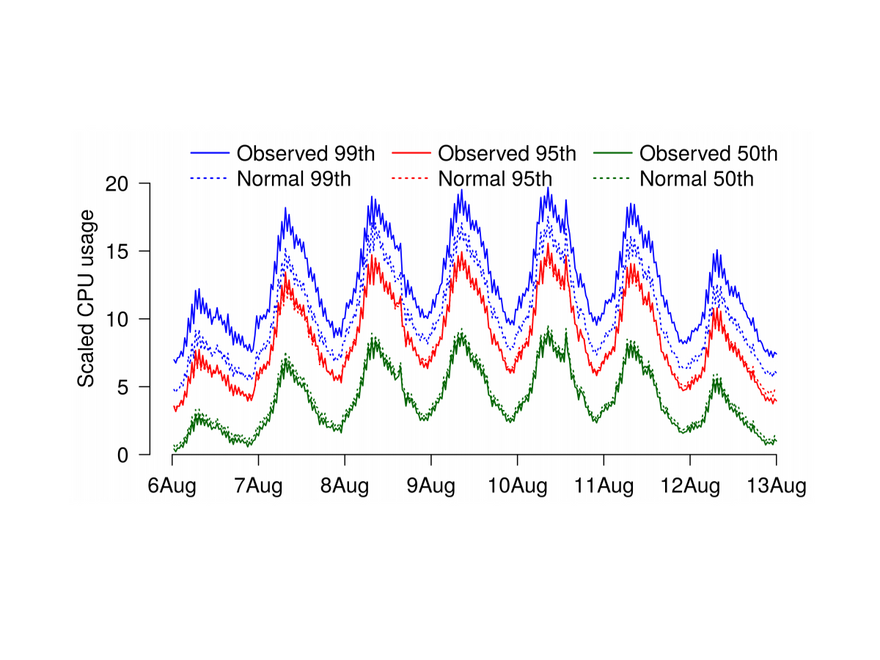

- You could have: limit = 99th percentile + 30-50%.

You should profile the app (or use the VPA) for a more detailed answer.

Should you always set the CPU request?

Absolutely, yes.

This is a standard good practice in Kubernetes and helps the scheduler allocate pods more efficiently.

Should you always set the CPU limit?

This is a bit more controversial, but, in general, I think so.

You can find a deeper dive here: https://dnastacio.medium.com/why-you-should-keep-using-cpu-limits-on-kubernetes-60c4e50dfc61

Also, if you want to dig in more a few relevant links:

- https://learnk8s.io/setting-cpu-memory-limits-requests

- https://medium.com/@betz.mark/understanding-resource-limits-in-kubernetes-cpu-time-9eff74d3161b

- https://nodramadevops.com/2019/10/docker-cpu-resource-limits/

And finally, if you've enjoyed this thread, you might also like:

- The Kubernetes workshops that we run at Learnk8s https://learnk8s.io/training

- This collection of past threads https://twitter.com/danielepolencic/status/1298543151901155330

- The Kubernetes newsletter I publish every week https://learnk8s.io/learn-kubernetes-weekly

Top comments (9)

Thank you for this incredibly clear and practical breakdown! The distinction between requests for "fair share" and limits for "hard caps" is explained perfectly, especially with the visual examples. The guidance on how to derive sensible values—from simple unit calculations to using monitoring and the 99th percentile—is exactly what teams need to move from theory to practice. Great work demystifying a complex but crucial topic! Free MBTI Personality Test

Great overview of CPU requests/limits! I've found that setting requests accurately is crucial for optimal scheduling and preventing resource starvation. Limits are tricky; excessive throttling hurts performance. Anyone else using resource quotas in conjunction with requests? Playing around with Block Blast while optimizing my resource configuration is my pastime.

In Kubernetes, cpu requests and limits are used to Crazy Cattle 3D control how much CPU a Pod (or container) is guaranteed and allowed to use on a node. Understanding them is critical for resource efficiency, scheduling, and performance management.

Great post! Clear explanation of how CPU requests and limits impact performance and scheduling. The throttle examples really help visualize what’s happening behind the scenes.

deltarune

@Drift Boss

Yes this requirement will make a lot of sense to the system is a good practice in Kubernetes and helps the scheduler allocate pods more efficiently. when completing the request and CPU limit in Kubernetes. Thanks for sharing this information!

I really appreciated how Daniele breaks down the difference between CPU requests and limits—especially the example showing how the scheduler uses requests to allocate pods and how limits actually throttle usage. It’s such a clear and practical walkthrough. Whenever I want to bounce ideas or clarify Kubernetes concepts in a lighthearted way, I sometimes turn to chat gpt gratuito—it’s surprisingly helpful for brainstorming and keeping things grounded.

Very interesting article, from my perspective the aim is to find the actual load, so for me a feasible approach is to set requests=limits, then run stress tests, and afterwards readjust.

In Kubernetes, Skribbl IO CPU requests and limits are resource specifications you set for containers in your Pods to control how much CPU time they get on a node.

Come play block blast with me, I just unlocked a new level and it's getting intense.