Tigera outlines the following benefits to enabling the eBPF dataplane:

The eBPF dataplane mode has several advantages over standard Linux networking pipeline mode:

It scales to higher throughput.

It uses less CPU per GBit.

It has native support for Kubernetes services (without needing kube-proxy) that:

Reduces first packet latency for packets to services.

Preserves external client source IP addresses all the way to the pod.

Supports DSR (Direct Server Return) for more efficient service routing.

Uses less CPU than kube-proxy to keep the dataplane in sync.

To learn more and see performance metrics from our test environment, see the blog, Introducing the Calico eBPF dataplane.

As I've written about before, RKE2 can be deploy with the kube-proxy replacement for Cilium, and it can be enabled on RKE2 after installation as well with a couple of additional changes to your Rancher cluster config.

Note For existing clusters, you can proceed immediately to the Calico patching process and skip the following about RKE2 provisioning and logging into your control plane. You can apply the following ConfigMap (below) through Rancher or via kubectl, etc. as normal.

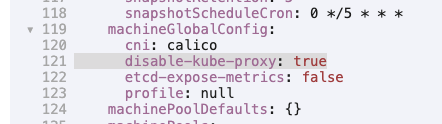

In Rancher, when you provision your cluster, ensure that disable-kube-proxy is set to true when your cni is set to calico:

Keep in mind that this will prevent the cluster from reading "Ready" in the UI until you complete this process as Calico's readiness probe will not signal to Rancher it is complete until we are done.

On the first Control Plane node, set your PATH and KUBECONFIG variables:

m-3c90900d-c758-459e-b010-e344d7668e48:~ # export PATH=$PATH:/var/lib/rancher/rke2/bin

m-3c90900d-c758-459e-b010-e344d7668e48:~ # export KUBECONFIG=/etc/rancher/rke2/rke2.yaml

and apply the following ConfigMap:

kind: ConfigMap

apiVersion: v1

metadata:

name: kubernetes-services-endpoint

namespace: tigera-operator

data:

KUBERNETES_SERVICE_HOST: '${KUBERNETES_VIP}'

KUBERNETES_SERVICE_PORT: '6443'

at which point, the Calico operator will restart the Pods:

watch kubectl get pods -n calico-system

and you can proceed to patch the Operator on this node to enable the eBPF dataplane:

kubectl patch installation.operator.tigera.io default --type merge -p '{"spec":{"calicoNetwork":{"linuxDataplane":"BPF"}}}'

At which point in Rancher, you will see the calico readiness probe for this node clear, and begin to process for the remaining nodes as Calico had now become ready (and no longer expecting kube-proxy to come online).

More on the Calico eBPF dataplane can be found here.

Top comments (2)

Liebe Grüsse! Ein Pop-up auf einer Wetterseite für Obwalden machte mich auf Poker aufmerksam. Da ich das Spiel liebe, schaute ich bei spin-granny.ch vorbei und wurde nicht enttäuscht. Die Konkurrenz an den Tischen ist fordernd, aber fair. Nachdem ich anfangs einige Rückschläge hinnehmen musste, konnte ich mich steigern. Der finale Gewinn war so hoch, dass ich nun mit einem sehr guten Gefühl in der Schweiz weiterlebe.

thanks