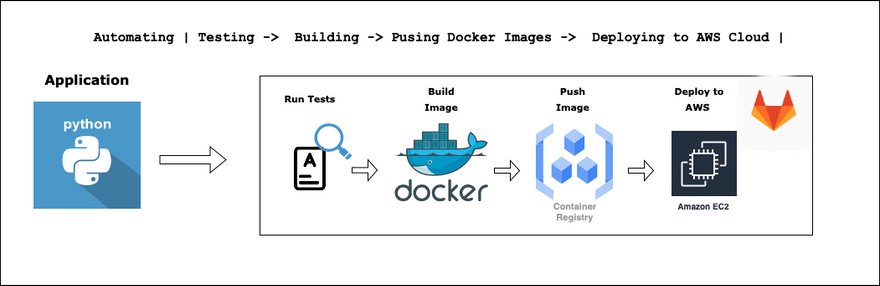

This blog talks about fundamental DevOps practices to automate continuous integration and continuous deployment using GitLab.

This is a beginner-friendly blog that assumes you do not know anything about GitLab and CI-CD.

Outcome of the blog

By the end of this blog, you will be confident to automate 3 major components of any CI-CD pipeline

- Running test cases

- Building a docker image

- Deploying it to infrastructure.

Bonus:- Pushing docker image to the repository

Motivation

The idea for this blog comes from my favourite DevOps Teacher who makes it so easy to learn and practice DevOps skills and she is none other than Nana Janashia. Subscribe to her youtube channel, to become a better DevOps Engineer and stay with the latest updates on the industry.

Prerequisites

I did mention earlier that it is a beginner-friendly blog, but there are a few basics which can really help you understand these concepts really well. (Don't worry if you don't know I will post relevant links to brush up wherever needed :D)

- Git

- Docker

- Linux

- AWS Account

Let's Build the pipeline

For building the pipeline, use this GitLab repository which has a sample python application and clone it to your local environment. You can also fork it and edit in pipeline editor but it's always advisable to work in a local editor like vs-code.

- Gitlab Repository which hosts application code and pipeline configuration

1. Test and run application locally

As a DevOps Engineer, you don't need to know all the details of the application code, knowledge of how to run and test the application is important. So depending on the application, you should ask your dev team, how to run and test the application locally.

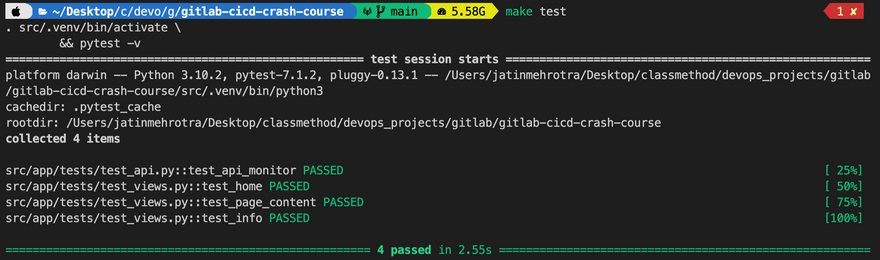

For this particular example application in order to test we use the following command

make test

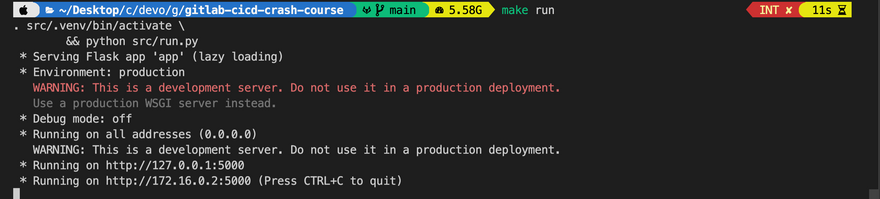

- In order to run the application locally, we use the following command

make run

Key Points to Note

- You need to know from the dev team how to run and test the application.

- We are using

makecommand since it's a python application for node-js-based applications we might have to use thenpmcommand. So availability of commands where we will run the application is very important.

2. Gitlab CI CD internals

GitLab is one platform which allows doing everything in DevOps perse, planning, CI-CD, and deployment to any cloud which really makes it efficient as there is a single interface to deal with in SDLC.

Nana Janashia jas given a great explanation in this video about GitLab architecture, though I will try to explain my understanding of the same so that it's easy to follow up in the blog

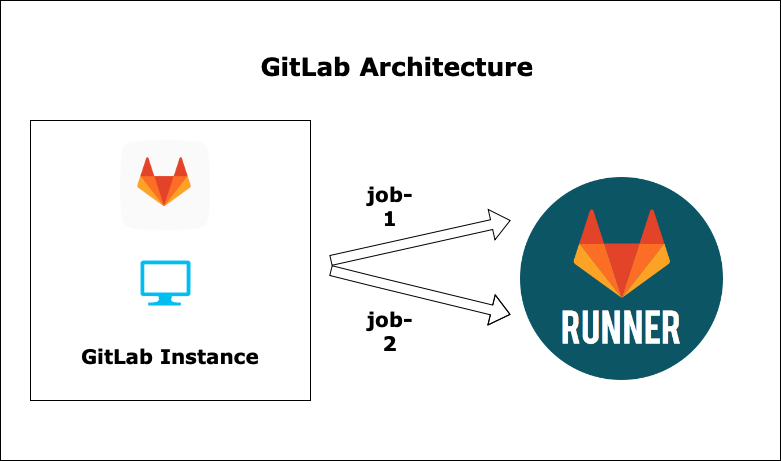

GitLab has 2 components:-

- Gitlab instance/Server (managed & self-managed) -> Hosts app code and pipeline config

-

Gitlab Runners (managed & self-managed) -> connected to GitLab servers, runners are agents that run your CI-CD jobs.

- Runners run on a separate machine ( Linux, windows) wrt to the GitLab instance.

For this blog, we will use managed components as it is easier to manage and operate.

3. Let's Build our CI-CD pipeline

In order to create a pipeline, GitLab requires us to create a file with the name

.gitlab-ci.ymlin the root of the git repository.Inside this file, there will be commands in the form of scripts which are grouped into jobs like running tests, building docker images or deploying could be 3 different jobs.

Till this point, we know there are jobs inside the pipeline which perform a task, within the jobs, there is a script which consists of commands, but the immediate question is where do commands will be executed?

Commands are executed by GitLab runner's execution environment. There are 2 kinds of execution environments.

GitLab Runner is installed on different OS like Linux, and Windows; this is called a shell executor.

Instead of a shell executor, we can use a Docker container running on Linux machines. Each job will be run in a separate and isolated container.

Managed runners use docker container as default execution environment with the ruby image as default for that container. Of course, we can choose the docker images of our choice depending on the job and use cases.

For running our test case in the pipeline we need make, pip and python available in our execution env, for that we will use python docker image (python:3.0-slim-buster).

Here is the job definition

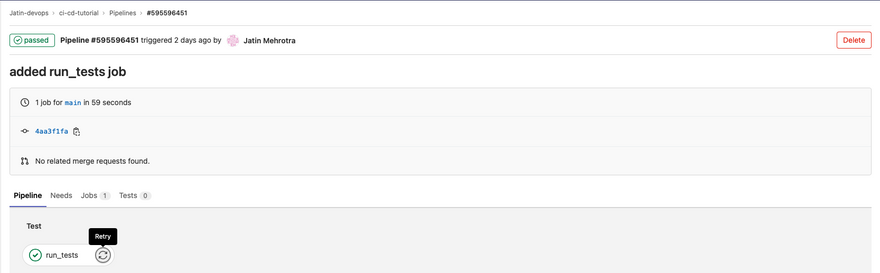

run_tests:

image: python:3.9-slim-buster

before_script:

- apt-get update && apt-get install make

script:

- make test

- Semantics :

- run_tests: name of the job.

- image: name of the docker image for executing commands.

- script: commands which need to be executed.

-

before_script: any commands which need to be installed before running script commands in this case we need the

makecommand to be installed to run our test.

- Commit your changes and push them to the remote repository, GitLab will automatically trigger the pipeline to be executed. ( quite an ease )

4. Building docker image of our Application

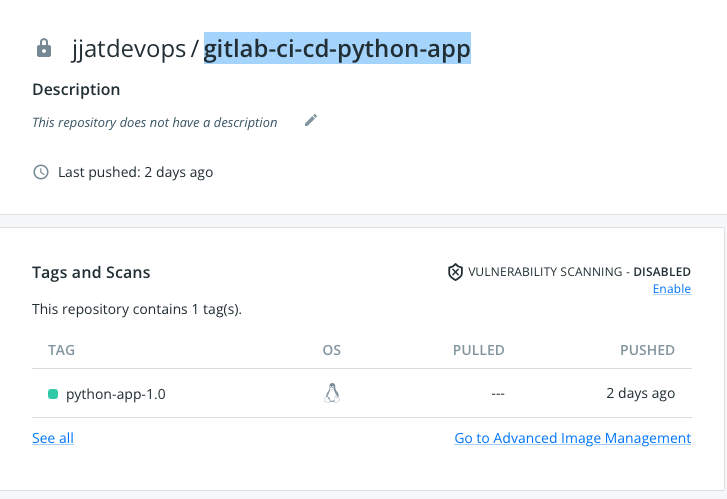

We want to deploy this application to AWS on ec2 as a docker container, for that we need to make a docker image out of this application and push it to the docker registry. For registry we are going to use dockerhub, aws ecr can also be used for this purpose.

Here is the job definition for building a docker image

variables:

IMAGE_NAME: jjatdevops/gitlab-ci-cd-python-app

IMAGE_TAG: python-app-1.0

build_image:

image: docker:20.10.17

services:

- docker:20.10.17-dind

variables:

DOCKER_TLS_CERTDIR: "/certs"

before_script:

- docker login -u $REGISTRY_USER -p $REGISTRY_PASS

script:

- docker build -t $IMAGE_NAME:$IMAGE_TAG .

- docker push $IMAGE_NAME:$IMAGE_TAG

- In this job, we are using variables and docker insider docker container (dind).

- Variables can be at the job level that is within the job, accessible only inside the job, or can be at the top level like here, which are accessible across all jobs or at the global level ($REGISTRY_USER, $REGISTRY_PASS) accessible across different repositories.

In order to build Docker images we need to configure GitLab runner to support docker containers, for this we need docker inside docker(dind)

docker:20.10.17 provides docker client which will build image and push to the registry but we also need docker daemon which is a process that allows docker client to execute the command, which is provided by docker:20.10.17-dind container.

- Semantics :

services - services in GitLab are parallel container attacher to job container ( inside same network) when job container needs another container to carry out its operation. This is much faster than installing the dependency in the job container.

DOCKER_TLS_CERTDIR - This allows client and daemon to talk to each other by authenticating to each other using a common certificate as mentioned in the docs ( find TLS on the page)

docker login & docker push - First it logins to the docker hub registry ( since it's a private repository ) and pushes the image to the repository.

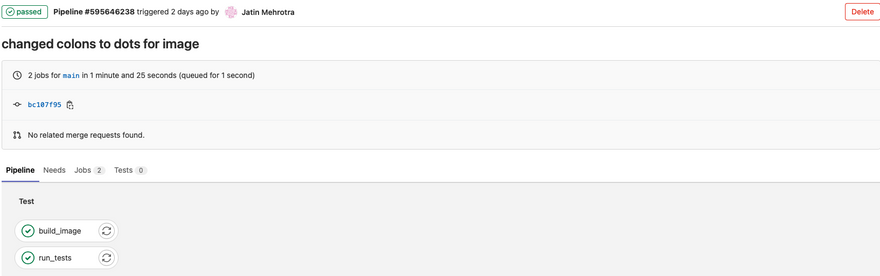

- After commit and push, GitLab will automatically trigger the pipeline.

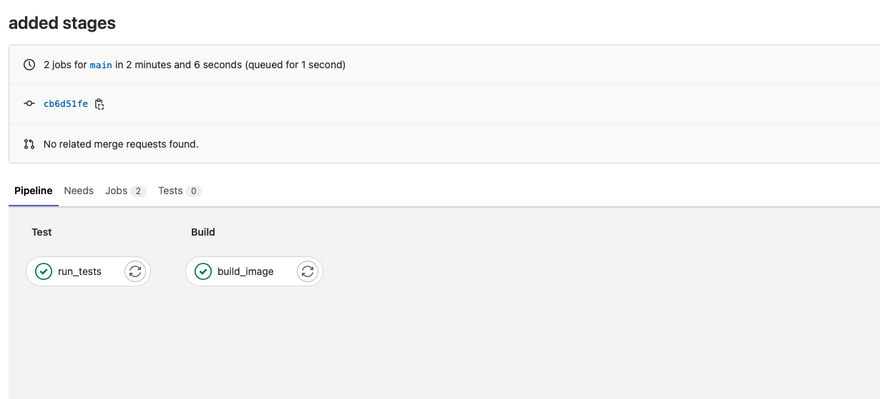

5. Introducing stages to Pipeline

Till this moment whenever we push both run_tests and build_image jobs run in a parallel fashion. In most of the cases, you want sequential execution of jobs which means if running tests cases job is successful then only build the docker image.

Sequential execution can be achieved in GitLab using stages.

Using stages we can group related jobs into stages that run in the defined order.

Here is the job definition after introducing stages to the pipeline

stages:

- test

- build

run_tests:

stage : test

image: python:3.9-slim-buster

before_script:

- apt-get update && apt-get install make

script:

- make test

build_image:

stage: build

image: docker:20.10.17

services:

- docker:20.10.17-dind

variables:

DOCKER_TLS_CERTDIR: "/certs"

before_script:

- docker login -u $REGISTRY_USER -p $REGISTRY_PASS

script:

- docker build -t $IMAGE_NAME:$IMAGE_TAG .

- docker push $IMAGE_NAME:$IMAGE_TAG

- All jobs in the test execute in parallel ( here we have a single job ).

- If all jobs in the test succeed, then only build jobs execute in parallel.

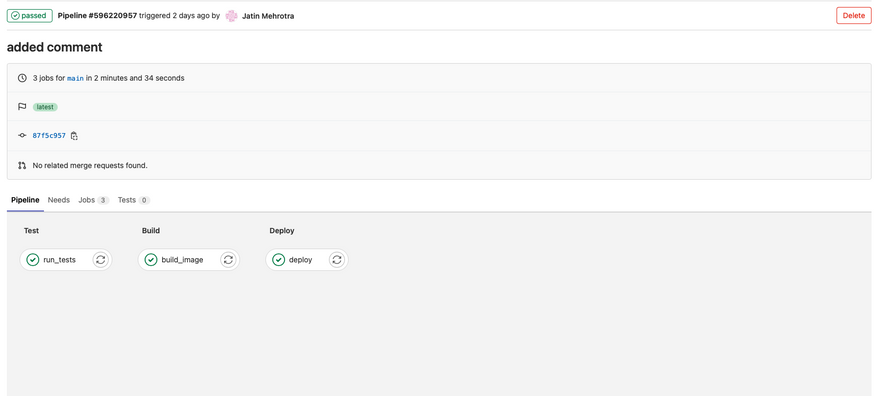

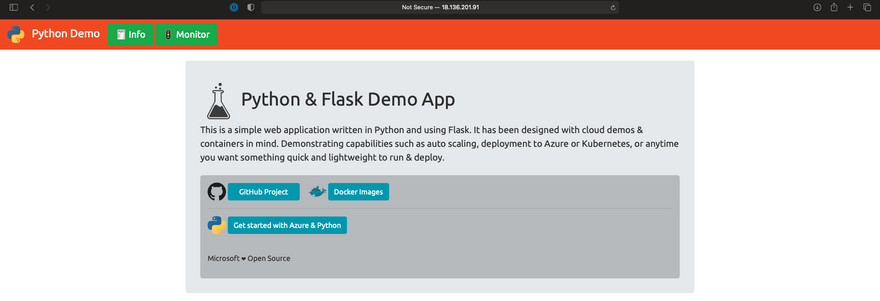

6. Deploying to Ec2 instance

In the final step for pipeline configuration we are going to run the docker image pushed to docker hub in ec2 instance running in AWS cloud.

We will manually start an ec2 instance with 5000 ports allowed on the security group with a new ec2 key pair.

After starting the ec2 instance we need to prepare the ec2 instance in order to run the docker container.

ssh into ec2 instance

ssh -i "your-ssh-key.pem" ec2-user@ec-your-ip-adress.ap-southeast-1.compute.amazonaws.com

- Install docker

sudo yum install docker

- Add group membership for the default ec2-user so you can run all docker commands without using the sudo command

sudo usermod -a -G docker ec2-user

- Enable docker service at AMI boot time

sudo systemctl enable docker.service

- Start the Docker service

sudo systemctl start docker.service

We will add

$SSH_KEYas file variable in the GitLab file variable. Contents of this variable will be the private key used for creating an ec2 instance.Note :- GitLab create a temporary file from this variable.

Here is the job definition for deploy

deploy:

stage: deploy

before_script:

- chmod 400 $SSH_KEY

script: ssh -o StrictHostKeyChecking=no -i $SSH_KEY ec2-user@ec2-3-1-100-185.ap-southeast-1.compute.amazonaws.com "

docker login -u $REGISTRY_USER -p $REGISTRY_PASS &&

docker ps -aq | xargs docker stop | xargs docker rm || true &&

docker run -d -p 5000:5000 $IMAGE_NAME:$IMAGE_TAG"

- Semantics:-

ssh -o StrictHostKeyChecking=no -i $SSH_KEY ec2-user@ec2-3-1-100-185.ap-southeast-1.compute.amazonaws.com- connect to ec2 instance and run the following commands.docker login -u $REGISTRY_USER -p $REGISTRY_PASS &&- run the docker container on the ec2 instance

docker ps -aq | xargs docker stop | xargs docker rm || true &&

docker run -d -p 5000:5000 $IMAGE_NAME:$IMAGE_TAG"

From DevOps Perspective

We have successfully achieved the automation of various SDLC processes like running tests, building docker images, pushing them to the container registry and deploying it to the cloud.

With this knowledge, you can successfully implement any CI-CD pipeline, it doesn't matter if it is JAVA based application or a nodejs-based application.

_We did achieve automation, however, there were still manual process elements like creating ec2 which can be further automated using terraform and guess what it will be part 2 of this blog. _

Note:- GitLab platform offers much more features when it comes to CI-CD, feel free to check out the Dive deeper in GitLab course from NANA. and become an expert at GitLab.

Till then, Happy Learning !!!

Feel Free to post any comments or questions.

Top comments (4)

@jatin, firstly, thanks so much for writing this intro. Secondly, if you would like to share this (or other posts) with your LinkedIn followers, you can do so using the three dots (

...) underneath the reaction buttons on the left of the post:Thank you so much @ellativity for the explanation.

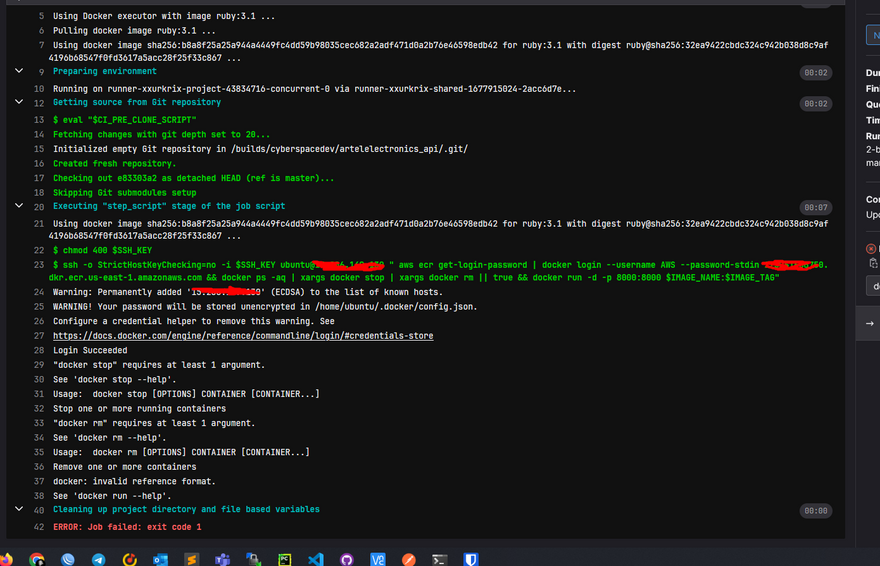

Hello, My my name is Shakhzod. I'm junior DevOps.

I have a problem with deploy stage my project.

When deploying to EC2 from AWS ECR i have problem like this. How can I solve this?

Hey, thank you for trying this blog. Really appreciate it.

Sorry for the late reply. looks like xargs has a problem.