How does Pod to Pod communication work in Kubernetes?

How does the traffic reach the pod?

In this article, you will dive into how low-level networking works in Kubernetes.

Let's start by focusing on the pod and node networking.

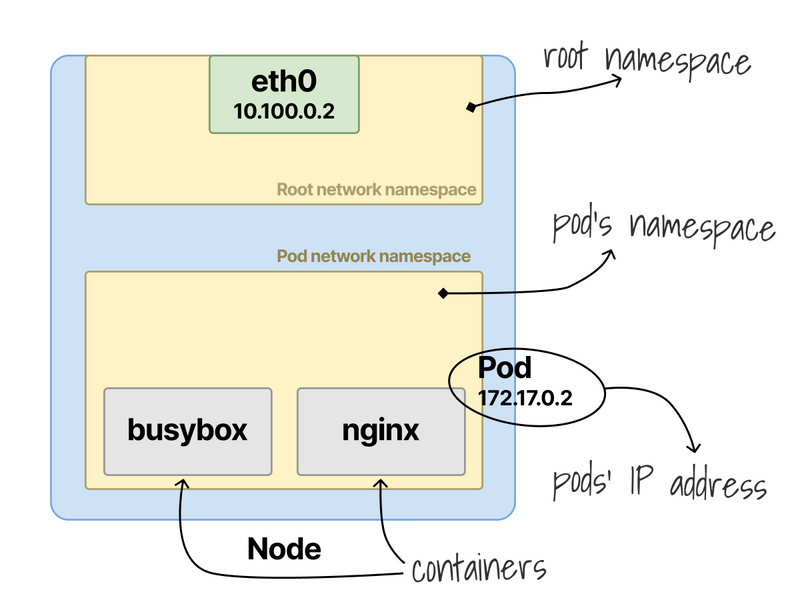

When you deploy a Pod, the following things happen:

- The pod gets its own network namespace.

- An IP address is assigned.

- Any containers in the pod share the same networking namespace and can see each other on localhost.

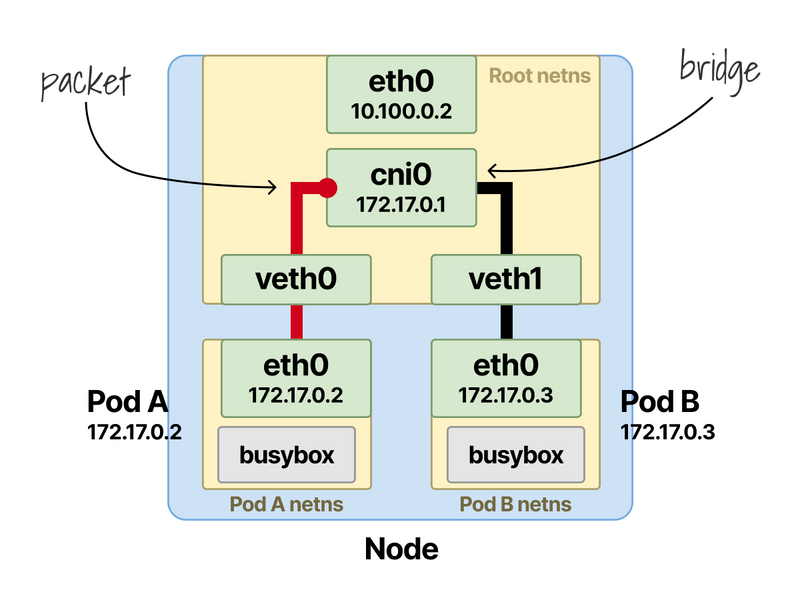

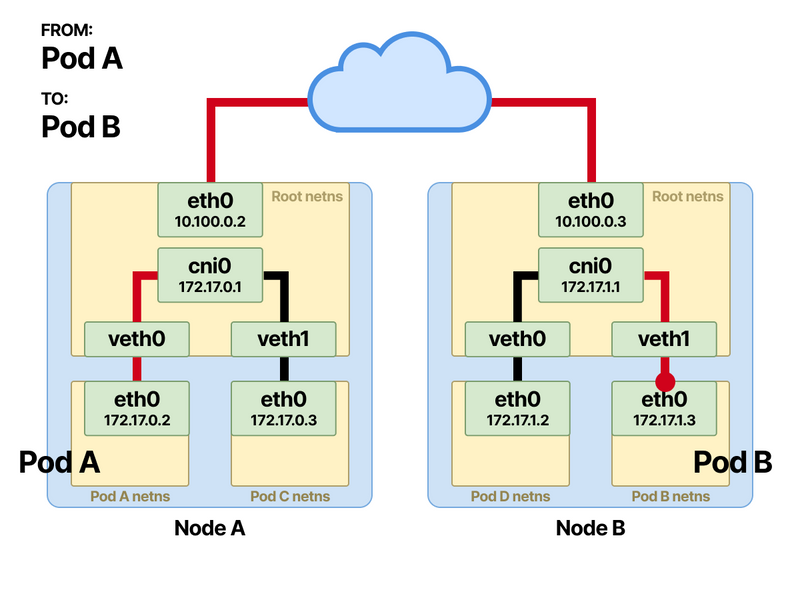

A pod must first have access to the node's root namespace to reach other pods.

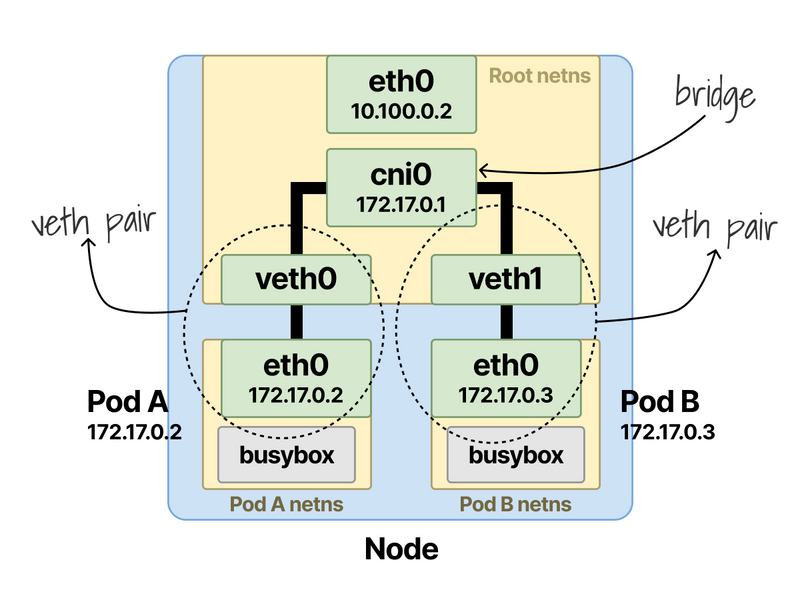

This is achieved using a virtual eth pair connecting the 2 namespaces: pod and root.

The bridge allows traffic to flow between virtual pairs and traverse through the common root namespace.

So what happens when Pod-A wants to send a message to Pod-B?

Since the destination isn't one of the containers in the namespace Pod-A sends out a packet to its default interface eth0.

This interface is tied to the veth pair and packets are forwarded to the root namespace.

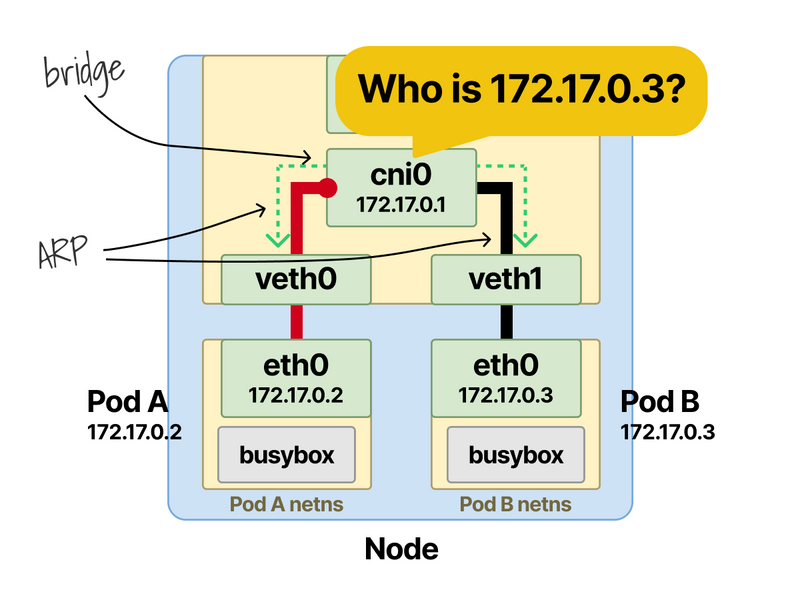

The ethernet bridge, acting as a virtual switch, has to somehow resolve the destination pod IP (Pod-B) to its MAC address.

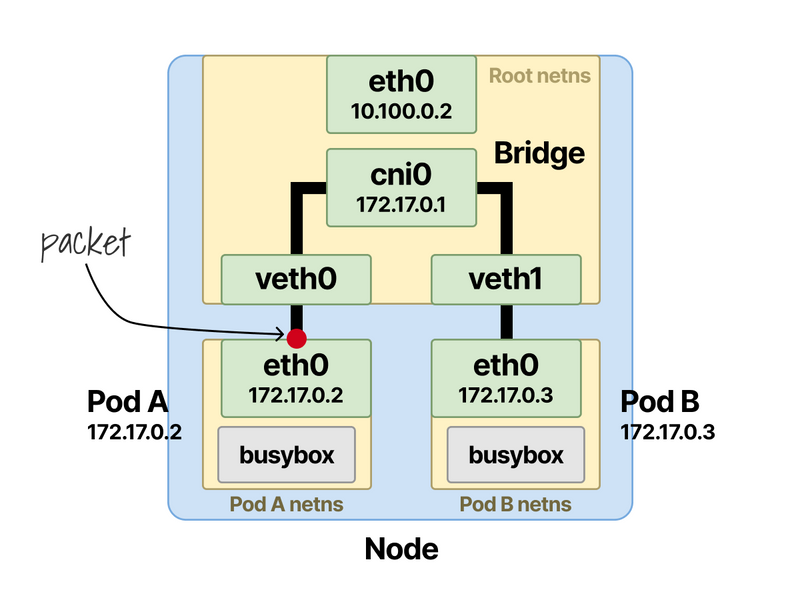

The ARP protocol comes to the rescue.

When the frame reaches the bridge, an ARP broadcast is sent to all connected devices.

The bridge shouts "Who has Pod-B IP address?"

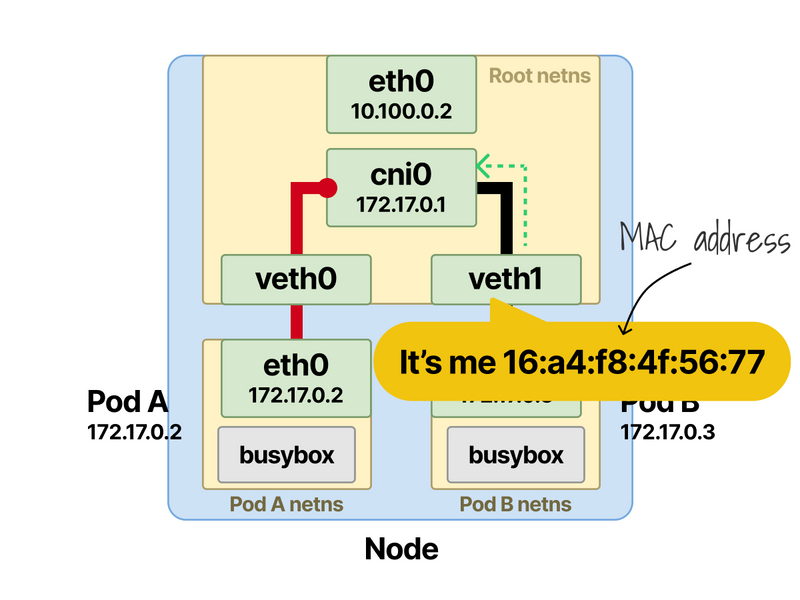

A reply is received with the interface's MAC address that connects Pod-B, which is stored in the bridge ARP cache (lookup table).

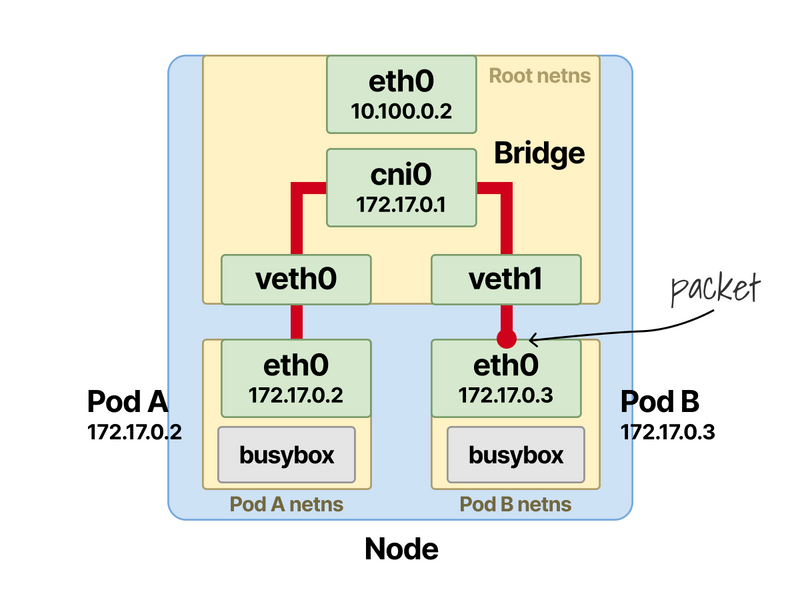

Once the IP and MAC address mapping is stored, the bridge looks up in the table and forwards the packet to the correct endpoint.

The packet reaches Pod-B veth in the root namespace, and from there, it quickly reaches the eth0 interface inside the Pod-B namespace.

With this, the communication between Pod-A and Pod-B has been successful.

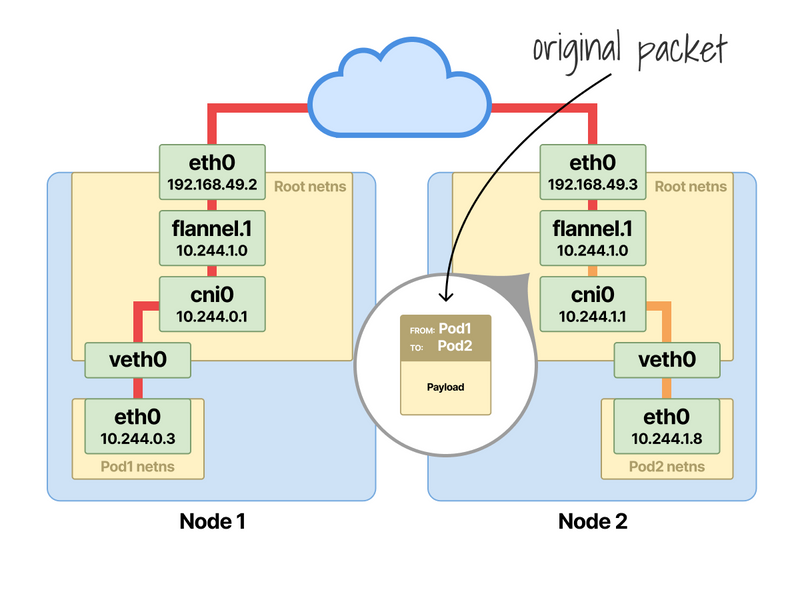

An additional hop is required for pods to communicate across different nodes, as the packets have to travel through the node network to reach their destination.

This is the "plain" networking version.

How does this change when you install a CNI plugin that uses an overlay network?

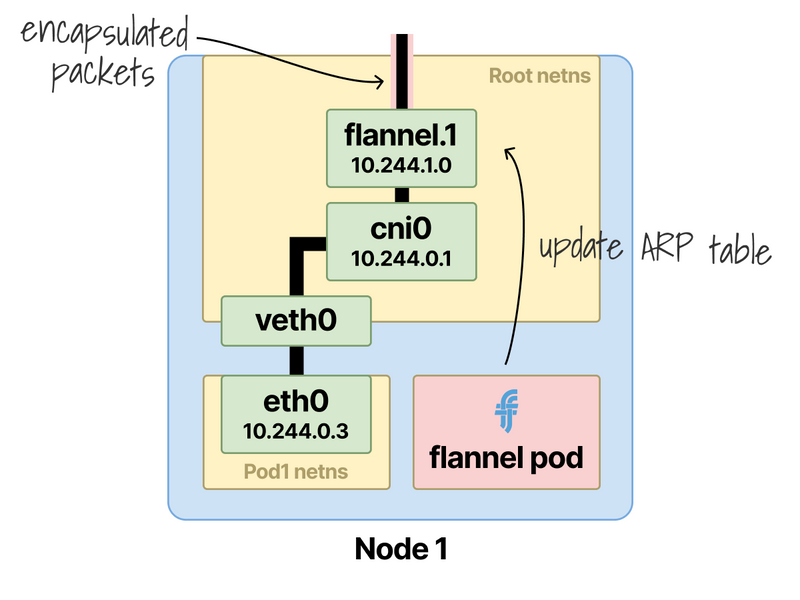

Let's take Flannel as an example.

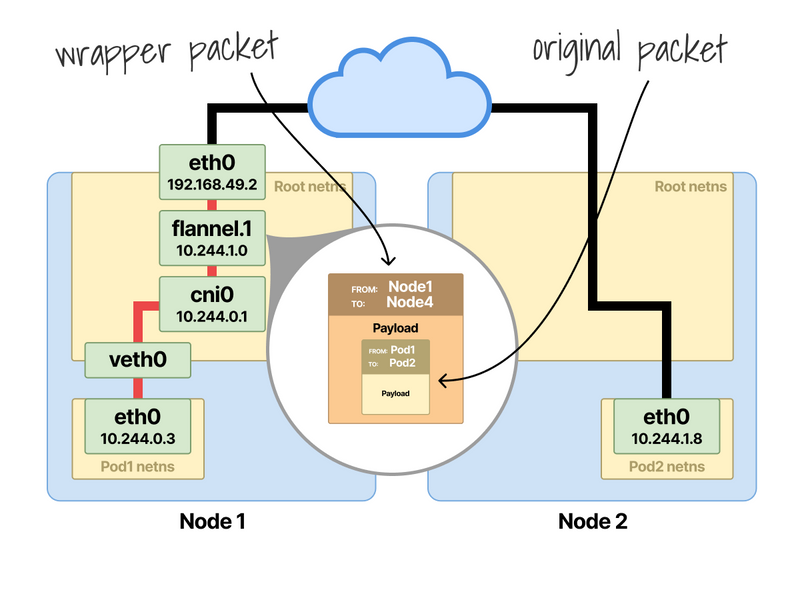

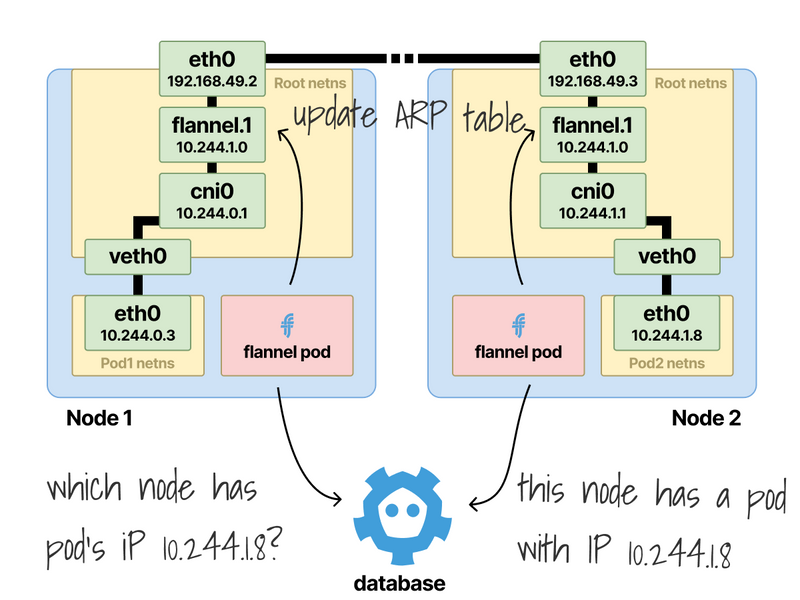

Flannel installs a new interface between the node's eth0 and the container bridge cni0.

All traffic flowing through this interface is encapsulated (e.g. VXLAN, Wireguard, etc.).

The new packets don't have pods' IP addresses as source and destination, but nodes' IPs.

So the wrapper packet will exit from the node and travel to the destination node.

Once on the other side, the flannel.1 interface unwraps the packet and lets the original pod-to-pod packet reach its destination.

How does Flannel know where all the Pods are located and their IP addresses?

On each node, the Flannel daemon syncs the IP addresses allocations in a distributed database.

Other instances can query this database to decide where to send those packets.

Here are a few links if you want to learn more:

- https://learnk8s.io/kubernetes-network-packets

- https://zhuanlan.zhihu.com/p/340747753

- https://blog.laputa.io/kubernetes-flannel-networking-6a1cb1f8ec7c

- https://www.sobyte.net/post/2022-07/k8s-flannel/

And finally, if you've enjoyed this thread, you might also like:

- The Kubernetes workshops that we run at Learnk8s https://learnk8s.io/training

- This collection of past threads https://twitter.com/danielepolencic/status/1298543151901155330

- The Kubernetes newsletter I publish every week https://learnk8s.io/learn-kubernetes-weekly

Top comments (1)

Understanding the intricacies of pod-to-pod communication in Kubernetes is crucial for optimizing network performance and troubleshooting. Your article provides a clear and detailed explanation of how packets traverse between pods, both within the same node and across different nodes, highlighting the roles of network namespaces, virtual ethernet pairs, and bridges. The inclusion of diagrams greatly aids in visualizing these concepts. Additionally, your explanation of how CNI plugins like Flannel encapsulate traffic using interfaces such as flannel.1 offers valuable insight into overlay networking.

scratch geometry dash