Data engineering involves designing, building, and maintaining systems created for collecting, storing, processing, and analyzing data. Data engineers are the ones who create and manage the pipelines that allow organizations to handle large volumes of data collected from different sources, transforming them into various formats that can be useful for analysis, reporting, and conducting other data-driven tasks.

Let's discuss.

What is Docker?

Docker is a virtualization containerization tool that stores your code files, applications, or software and ships it through the cloud. These files are guaranteed to reach the final destination in the same state or run similarly to what they ran on your computer.

Why Docker?

Run anywhere:You do not have to worry about shipping your models anywhere because of dependencies issues. The containers can standardize your software, making it easy to deploy anywhere. Just make sure your application or model has a complete environment configuration.

Fast deployment:Getting your applications or models to run on different platforms is always tedious. Docker containerization makes your code file compatible because it allows complete packaging of your dependencies.

Cloud Agnostic:Once you have your container set (stored in your codes or models), you can deploy it on any of your cloud vendors. This is helpful to a data engineer because you are not limited to deploying on operating systems.

Automation and Repeatability:If you have heard of ci/cd, which stands for continuous integration continuous deployment, then docker containers allow you to experience these aspects. In this case, if one container fails, you can isolate it and address the problem, and this is important for data engineers to ensure their models remain up and running.

Setting Docker

Step 1:Go to the official Docker Website

Step 2:Follow Installation processes (whichever year you will be reading this, the docker version may be updated. Therefore, it is important to look at the current version and whether it is compatible with your operating system.)

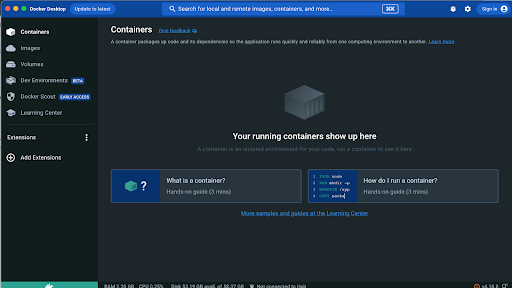

Output:

Basic Docker commands

You can decide to use your terminal to explore benefits. Grasping basic docker commands is important because it is almost impossible not to use the terminal.

These are some of the basic commands that you will interact regularly with:

docker pull-Used for pulling a docker image

docker build-Used for building a Docker image

docker container-Used to manage the containers

docker events-Used to view the server events

docker image-Used to manage the docker images

docker images-Returns the list of docker images

docker kill-Kills the running containers

docker logout-Used to logout of docker hub

docker login-Login to docker hub

docker logs-It returns logs from a container

docker port-Used to mention the running port number

docker pull-Pull an image from the docker hub

docker run-Run a new docker image

docker rm-Remove container

docker rmi-Remove the image

docker tag-Tag an Image referring source image

docker stop-Stop the running container

These commands are just the tip of the ocean:) But you will interact more with others as you keep interacting with docker.

Conclusion

Docker is critical in data engineering because it handles and isolates complex data pipelines and applications, ensuring uniformity in the environments across development, testing, and production stages. You no longer have to worry about your application not working on other machines. Its usefulness in scalability, resource utilization, and version control makes managing of diverse data processing tools and their dependencies simple. You can be able to collaborate among teams, speeding up development cycles and optimizing resource allocation, making it an essential tool for data engineering workflows.

Top comments (0)