Installing Karpenter on AWS EKS cluster with Terraform Karpenter module and configuring its Provisioner and AWSNodeTemplate

This is the third part of deploying an AWS Elastic Kubernetes Service cluster with Terraform, in which we will add Karpenter to our cluster. I’ve decided to post this separately because it’s quite a long post. And in the next and final (hopefully!), the fourth part, we will add the rest — all kinds of controllers.

All parts:

- Terraform: building EKS, part 1 — VPC, Subnets and Endpoints

- Terraform: building EKS, part 2 — an EKS cluster, WorkerNodes, and IAM

- Terraform: building EKS, part 3 — Karpenter installation (this)

- Terraform: building EKS, part 4 — installing controllers

Planning

What we have to do now:

- install Karpenter

- install EKS EBS CSI Addon

- install ExternalDNS

- install AWS Load Balancer Controller

- install SecretStore CSI Driver та ASCP

- install Metrics Server

- install Vertical Pod Autoscaler та Horizontal Pod Autoscaler

- install Subscription Filter to the EKS Cloudwatch Log Group to collect logs in Grafana Loki (see Loki: collecting logs from CloudWatch Logs using Lambda Promtail)

- create a StorageClass with the

ReclaimPolicy=Retainfor PVCs whose EBS disks should be retained when deleting a Deployment/StatefulSet

Files structure now looks like this:

$ tree .

.

├── backend.tf

├── configs

├── eks.tf

├── main.tf

├── outputs.tf

├── providers.tf

├── terraform.tfvars

├── variables.tf

└── vpc.tf

Terraform providers

In addition to the list of providers that we already have, the AWS and Kubernetes, we have to add two more — Helm and Kubectl.

Helm, obviously, for installing Helm charts, as we will install controllers from Helm charts, and kubectl for deploying resources from our own Kubernetes manifests.

So, add them to our providers.tf file. Authorization is done in the same way as we did for the Kubernetes provider – through the AWS CLI and a profile name passed to the args:

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.14.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "~> 2.23.0"

}

helm = {

source = "hashicorp/helm"

version = "~> 2.11.0"

}

kubectl = {

source = "gavinbunney/kubectl"

version = "~> 1.14.0"

}

}

}

...

provider "helm" {

kubernetes {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["--profile", "tf-admin", "eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

}

provider "kubectl" {

apply_retry_count = 5

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

load_config_file = false

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["--profile", "tf-admin", "eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

Run terraform init to install the providers.

Ways to install Karpenter in AWS EKS with Terraform

There are several options for installing Karpenter with Terraform:

- write everything by yourself — IAM roles, SQS for interruption-handling, updates for the

aws-authConfigMap, install a Helm-chart with Karpenter, create Provisioner and AWSNodeTemplate - use ready-made modules:

- from Anton Babenko — the

karpentersubmodule of the EKS module - the

karpentermodule from the Amazon EKS Blueprints for Terraform project

I decided to try Anton’s module because since the cluster is fired up with his EKS module, it makes sense to use it for Karpenter.

An example of its creation — here>>>, documentation and more examples — here>>>.

For the Node IAM Role, we will use an existing one because we already have the eks_managed_node_groups, which is created in the EKS module:

...

eks_managed_node_groups = {

default = {

# number, e.g. 2

min_size = var.eks_managed_node_group_params.default_group.min_size

...

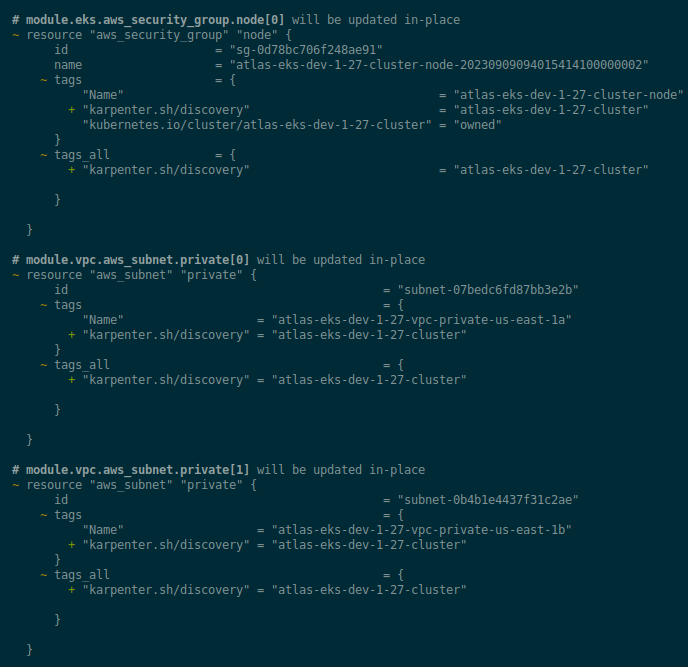

AWS SecurityGroup and Subnet Tags

To work, Karpenter uses the karpenter.sh/discovery tag from the SecurityGroup of our WorkerNodes and Private VPC Subnets to know which SecurityGroups to add to Nodes and in which subnets to run these nodes.

The tag value specifies the name of the cluster to which the SecurityGroup or Subnet belongs.

Add a new local variable eks_cluster_name to the main.tf:

locals {

# create a name like 'atlas-eks-dev-1-27'

env_name = "${var.project_name}-${var.environment}-${replace(var.eks_version, ".", "-")}"

eks_cluster_name = "${local.env_name}-cluster"

}

And in the eks.tf file, add the node_security_group_tags parameter:

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 19.0"

...

node_security_group_tags = {

"karpenter.sh/discovery" = local.eks_cluster_name

}

...

}

In the vpc.tf file, add a tag to the private subnets:

module "vpc" {

source = "terraform-aws-modules/vpc/aws"

version = "~> 5.1.1"

...

private_subnet_tags = {

"karpenter.sh/discovery" = local.eks_cluster_name

}

...

}

Check and deploy the changes:

The Karpenter module

Let’s move on to the Karpenter module itself.

Let’s put it in a separate file karpenter.tf.

In the iam_role_arn, pass the role from our existing eks_managed_node_groups named “default“, because it has already been created and added to the aws-auth ConfigMap.

I also added the irsa_use_name_prefix here, because I was getting an error that the name for the IAM role was too long:

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

cluster_name = module.eks.cluster_name

irsa_oidc_provider_arn = module.eks.oidc_provider_arn

irsa_namespace_service_accounts = ["karpenter:karpenter"]

create_iam_role = false

iam_role_arn = module.eks.eks_managed_node_groups["default"].iam_role_arn

irsa_use_name_prefix = false

}

Under the hood, the module will create necessary IAM resources, plus add an AWS SQS Queue and an EventBridge Rule, which will in turn send EC2-related notifications, see Node Termination Event Rules and Amazon documentation — Monitoring AWS Health events with Amazon EventBridge.

Let’s add some outputs:

...

output "karpenter_irsa_arn" {

value = module.karpenter.irsa_arn

}

output "karpenter_aws_node_instance_profile_name" {

value = module.karpenter.instance_profile_name

}

output "karpenter_sqs_queue_name" {

value = module.karpenter.queue_name

}

And then we can move on to Helm to add a chart with Karpenter himself.

Here in variables you can put the version of the module:

...

variable "karpenter_chart_version" {

description = "Karpenter Helm chart version to be installed"

type = string

}

And in the terraform.tfvars add a value with the latest chart release:

...

karpenter_chart_version = "v0.30.0"

For Helm to access the oci://public.ecr.aws repository, we need a token which we can get from the aws_ecrpublic_authorization_token Terraform resource. There is a nuance here that it only works for the us-east-1 region because AWS ECR tokens are issued there. See aws_ecrpublic_authorization_token breaks if region != us-east-1.

The “cannot unmarshal bool into Go struct field ObjectMeta.metadata.annotations of type string” error

Also, since we are using a VPC Endpoint for the AWS STS, we need to add the eks.amazonaws.com/sts-regional-endpoints=true annotation to the ServiceAccount that will be created for Karpenter.

However, if you add the annotation to the set as:

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/sts-regional-endpoints"

value = "true"

}

Then Terraform responds with the “ cannot unmarshal bool into Go struct field ObjectMeta.metadata.annotations of type string ” error.

The solution was googled in this comment to the GitHub Issue — “just add water type=string“.

The error text says that Terraform cannot “unmarshal bool” (“unpack a value with a bool type”) into the spec.tolerances.value field, which is of the _string _type.

The solution is to specify the value type explicitly using type = "string".

In karpenter.tf add aws_ecrpublic_authorization_token and helm_release resource, in which we will install the Karpenter’s Helm chart:

...

data "aws_ecrpublic_authorization_token" "token" {}

resource "helm_release" "karpenter" {

namespace = "karpenter"

create_namespace = true

name = "karpenter"

repository = "oci://public.ecr.aws/karpenter"

repository_username = data.aws_ecrpublic_authorization_token.token.user_name

repository_password = data.aws_ecrpublic_authorization_token.token.password

chart = "karpenter"

version = var.karpenter_chart_version

set {

name = "settings.aws.clusterName"

value = local.eks_cluster_name

}

set {

name = "settings.aws.clusterEndpoint"

value = module.eks.cluster_endpoint

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/role-arn"

value = module.karpenter.irsa_arn

}

set {

name = "serviceAccount.annotations.eks\\.amazonaws\\.com/sts-regional-endpoints"

value = "true"

type = "string"

}

set {

name = "settings.aws.defaultInstanceProfile"

value = module.karpenter.instance_profile_name

}

set {

name = "settings.aws.interruptionQueueName"

value = module.karpenter.queue_name

}

}

Karpenter Provisioner and Terraform templatefile()

For now, we’ll have only one Karpernter Provisioner, but we’ll probably add more later, so let’s do it right away through templates.

On the old cluster, our default Provider manifest looks like this:

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: default

spec:

requirements:

- key: karpenter.k8s.aws/instance-family

operator: In

values: [t3]

- key: karpenter.k8s.aws/instance-size

operator: In

values: [small, medium, large]

- key: topology.kubernetes.io/zone

operator: In

values: [us-east-1a, us-east-1b]

providerRef:

name: default

consolidation:

enabled: true

ttlSecondsUntilExpired: 2592000

First, add a variable to the variables.tf that will hold the parameters for the Provisioner:

...

variable "karpenter_provisioner" {

type = list(object({

name = string

instance-family = list(string)

instance-size = list(string)

topology = list(string)

labels = optional(map(string))

taints = optional(object({

key = string

value = string

effect = string

}))

}))

}

And values to the tfvars:

...

karpenter_provisioner = {

name = "default"

instance-family = ["t3"]

instance-size = ["small", "medium", "large"]

topology = ["us-east-1a", "us-east-1b"]

labels = {

created-by = "karpenter"

}

}

Create the template file itself — first, add a local directory configs where all the templates will be, and add the karpenter-provisioner.yaml.tmpl file in it.

For the parameters where we will pass elements of the list type, add the jsonencode() to turn them into strings:

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: ${name}

spec:

%{ if taints != null ~}

taints:

- key: ${taints.key}

value: ${taints.value}

effect: ${taints.effect}

%{ endif ~}

%{ if labels != null ~}

labels:

%{ for k, v in labels ~}

${k}: ${v}

%{ endfor ~}

%{ endif ~}

requirements:

- key: karpenter.k8s.aws/instance-family

operator: In

values: ${jsonencode(instance-family)}

- key: karpenter.k8s.aws/instance-size

operator: In

values: ${jsonencode(instance-size)}

- key: topology.kubernetes.io/zone

operator: In

values: ${jsonencode(topology)}

providerRef:

name: default

consolidation:

enabled: true

kubeletConfiguration:

maxPods: 100

ttlSecondsUntilExpired: 2592000

Next, in the karpenter.tf, add the kubectl_manifest resource with the for_each loop, in which we iterate over all the elements of the karpenter_provisioner map:

resource "kubectl_manifest" "karpenter_provisioner" {

for_each = var.karpenter_provisioner

yaml_body = templatefile("${path.module}/configs/karpenter-provisioner.yaml.tmpl", {

name = each.key

instance-family = each.value.instance-family

instance-size = each.value.instance-size

topology = each.value.topology

taints = each.value.taints

labels = merge(

each.value.labels,

{

component = var.component

environment = var.environment

}

)

})

depends_on = [

helm_release.karpenter

]

}

We specify the depends_on here, because if all this is deployed for the first time, Terraform reports the error “resource [karpenter.sh/v1alpha5/Provisioner] is not valid for cluster, check the APIVersion and Kind fields are valid” because Karpenter itself is not yet in the cluster.

The AWSNodeTemplate is unlikely to be changed in the future (yet), so we can create it with just another kubectl_manifest:

...

resource "kubectl_manifest" "karpenter_node_template" {

yaml_body = <<-YAML

apiVersion: karpenter.k8s.aws/v1alpha1

kind: AWSNodeTemplate

metadata:

name: default

spec:

subnetSelector:

karpenter.sh/discovery: ${local.eks_cluster_name}

securityGroupSelector:

karpenter.sh/discovery: ${local.eks_cluster_name}

tags:

Name: ${local.eks_cluster_name}-node

environment: ${var.environment}

created-by: "karpneter"

karpenter.sh/discovery: ${local.eks_cluster_name}

YAML

depends_on = [

helm_release.karpenter

]

}

Run terraform init, deploy, and check the nodes:

$ kk -n karpenter get pod

NAME READY STATUS RESTARTS AGE

karpenter-556f8d8f6b-f48v7 1/1 Running 0 26s

karpenter-556f8d8f6b-w2pl9 1/1 Running 0 26s

But wait — why did they start up if we have set the taints on our WorkerNodes?

$ kubectl get nodes -o json | jq '.items[].spec.taints'

[

{

"effect": "NoSchedule",

"key": "CriticalAddonsOnly",

"value": "true"

},

{

"effect": "NoExecute",

"key": "CriticalAddonsOnly",

"value": "true"

}

]

...

Because Karpernter’s Deployment has the appropriate tolerations by default:

$ kk -n karpenter get deploy -o yaml | yq '.items[].spec.template.spec.tolerations'

[

{

"key": "CriticalAddonsOnly",

"operator": "Exists"

}

]

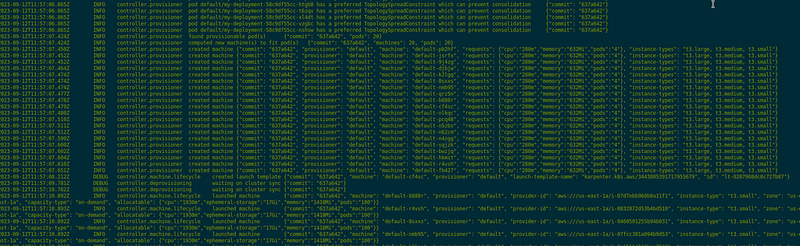

Testing Karpenter

It’s worth checking whether WorkerNodes scaling and de-scaling are working.

Create a Deployment — 20 Pods, allocate 512 MB of memory to each Pod, and use topologySpreadConstraints to specify that they should be placed on different Nodes:

apiVersion: apps/v1

kind: Deployment

metadata:

name: my-deployment

spec:

replicas: 20

selector:

matchLabels:

app: my-app

template:

metadata:

labels:

app: my-app

spec:

containers:

- name: my-container

image: nginxdemos/hello

imagePullPolicy: Always

resources:

requests:

memory: "512Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "100m"

topologySpreadConstraints:

- maxSkew: 1

topologyKey: kubernetes.io/hostname

whenUnsatisfiable: ScheduleAnyway

labelSelector:

matchLabels:

app: my-app

Deploy it and check Karpenter logs with the kubectl -n karpenter logs -f -l app.kubernetes.io/instance=karpenter:

And we have new WorkerNodes:

$ kk get node

NAME STATUS ROLES AGE VERSION

ip-10-1-47-71.ec2.internal Ready <none> 2d1h v1.27.4-eks-8ccc7ba

ip-10-1-48-216.ec2.internal NotReady <none> 31s v1.27.4-eks-8ccc7ba

ip-10-1-48-93.ec2.internal Unknown <none> 31s

ip-10-1-49-32.ec2.internal NotReady <none> 31s v1.27.4-eks-8ccc7ba

ip-10-1-50-224.ec2.internal NotReady <none> 31s v1.27.4-eks-8ccc7ba

ip-10-1-51-194.ec2.internal NotReady <none> 31s v1.27.4-eks-8ccc7ba

ip-10-1-52-188.ec2.internal NotReady <none> 31s v1.27.4-eks-8ccc7ba

...

Don’t forget to delete the test Deployment.

The “UnauthorizedOperation: You are not authorized to perform this operation” error

During this setup, I’ve encountered the following error in the Karpenter logs:

2023-09-12T11:17:10.870Z ERROR controller Reconciler error {"commit": "637a642", "controller": "machine.lifecycle", "controllerGroup": "karpenter.sh", "controllerKind": "Machine", "Machine": {"name":"default-h8lrh"}, "namespace": "", "name": "default-h8lrh", "reconcileID": "3e67c553-2cbd-4cf8-87fb-cb675ea3722b", "error": "creating machine, creating instance, with fleet error(s), UnauthorizedOperation: You are not authorized to perform this operation. Encoded authorization failure message: wMYY7lD-rKC1CjJU**qmhcq77M;

...

Read the error itself using aws sts decode-authorization-message:

$ export mess="wMYY7lD-rKC1CjJU**qmhcq77M"

$ aws sts decode-authorization-message --encoded-message $mess | jq

Get the following text:

{

"DecodedMessage": "{\"allowed\":false,\"explicitDeny\":false,\"matchedStatements\":{\"items\":[]},\"failures\":{\"items\":[]},\"context\":{\"principal\":{\"id\":\"ARO ***S4C:1694516210624655866\",\"arn\":\"arn:aws:sts::492*** 148:assumed-role/KarpenterIRSA-atlas-eks-dev-1-27-cluster/1694516210624655866\"},\"action\":\"ec2:RunInstances\",\"resource\":\"arn:aws:ec2:us-east-1:492***148:launch-template/lt-03dbc6b0b60ee0b40\",\"conditions\":{\"items\":[{\"key\":\"492***148:kubernetes.io/cluster/atlas-eks-dev-1-27-cluster\",\"values\":{\"items\":[{\"value\":\"owned\"}]}},{\"key\":\"ec2:ResourceTag/environment\"

...

The arn:aws:sts::492***148:assumed-role/KarpenterIRSA-atlas-eks-dev-1-27-cluster IAM Role could not perform the ec2:RunInstances operation.

Why? Because in the IAM Policy that the Karpenter module creates, it sets a Condition:

...

},

{

"Action": "ec2:RunInstances",

"Condition": {

"StringEquals": {

"ec2:ResourceTag/karpenter.sh/discovery": "atlas-eks-dev-1-27-cluster"

}

},

"Effect": "Allow",

"Resource": "arn:aws:ec2:*:492***148:launch-template/*"

},

...

And in AWSNodeTemplate, I removed the creation of the tag "karpenter.sh/discovery: ${local.eks_cluster_name}".

It can also be useful to look at the errors in CloudTrail — there is more information there.

That’s all for now — we can move on to the controllers.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (1)

This post shows how to set up Karpenter on EKS using Terraform. Karpenter helps manage resources automatically. It's a great way to improve your cloud setup easily!