This 3rd part of the series would be centered around migrating your terraform state file which has been stored locally so far in the project, to an AWS S3 bucket so it can be accessed remotely. This is important if you intend to work in a team environment, or even for more secured storage of your state file.

It is a very important file which terraform uses to manage, configure and store information about your infrastructure. Without the state, Terraform cannot function. Server-side encryption would be used to ensure that your state files are encrypted since the state files stores sensitive information like passwords. Tampering with this state file which terraform stores as 'terraform.tfstate' could be a nightmare, except you know exactly what you are doing.

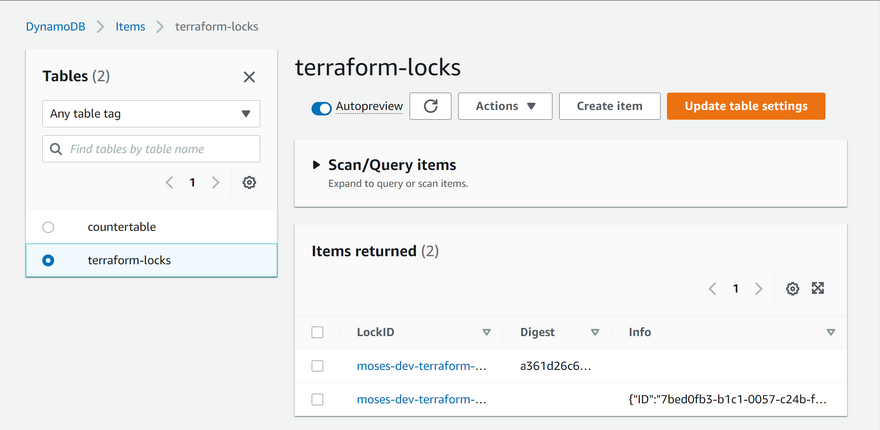

Terraform will lock your state for all operations that could write state. This prevents others from acquiring the lock and potentially corrupting your state. In order words, the state lock file locks the state during a deployment such that no two terraform processes try to update the same state at the same time. So in the case where you work in a dev environment and everyone have access to the code base, no two engineers can run terraform command at the same time or while a command is already running. We'd implement this using AWS DynamoDB. State locking happens automatically on all operations that could write state. You won't see any message that it is happening. If state locking fails, Terraform will not continue.

Here is our plan to Re-initialize Terraform to use S3 backend:

- Add S3 and DynamoDB resource blocks before deleting the local state file

- Update terraform block to introduce backend and locking

- Re-initialize terraform

- Delete the local

tfstatefile and check the one in S3 bucket - Add outputs

- terraform apply

-

Create

backend.tfand add the following

resource "aws_s3_bucket" "terraform_state" { bucket = "moses-dev-terraform-bucket" # Enable versioning so we can see the full revision history of our state files versioning { enabled = true } # Enable server-side encryption by default server_side_encryption_configuration { rule { apply_server_side_encryption_by_default { sse_algorithm = "AES256" } } } }

Note: The bucket name may not work for you since buckets are unique globally in AWS, so you must give it a unique name. Read more about AWS S3 Bucket Naming Policies Here -

Create DynamoDB table to handle locking. With a cloud storage database like DynamoDB, anyone running Terraform against the same infrastructure can use a central location to control a situation where Terraform is running at the same time from multiple different people.

resource "aws_dynamodb_table" "terraform_locks" { name = "terraform-locks" billing_mode = "PAY_PER_REQUEST" hash_key = "LockID" attribute { name = "LockID" type = "S"s } }

Terraform expects that both S3 bucket and DynamoDB resources are already created before we configure the backend. So, let us runterraform applyto provision resources. -

Configure S3 backend

terraform { backend "s3" { bucket = "moses-dev-terraform-bucket" key = "global/s3/terraform.tfstate" region = "us-east-1" dynamodb_table = "terraform-locks" encrypt = true } }

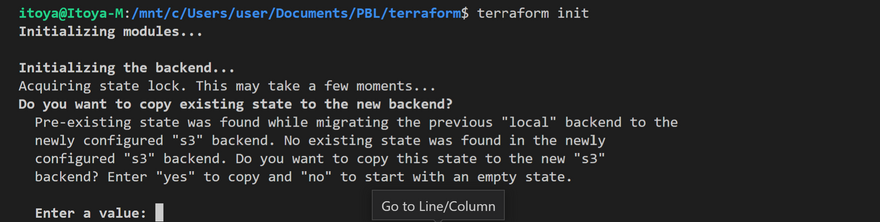

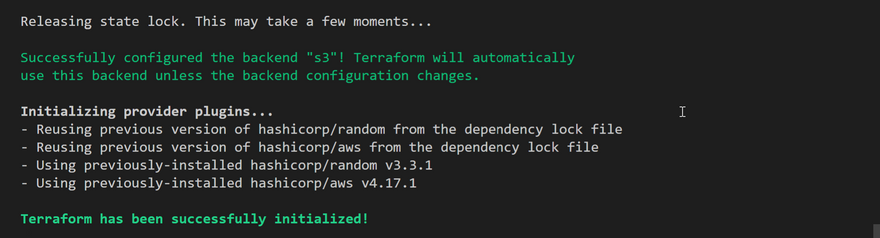

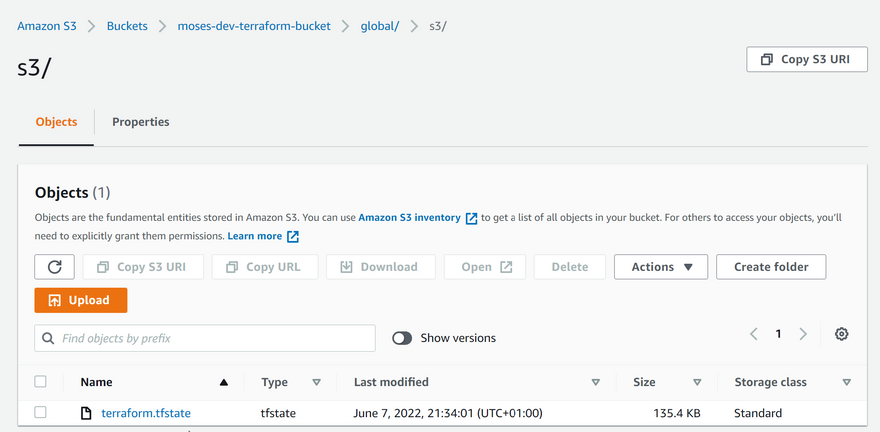

Now, Runterraform initand confirm you are OK to change the backend by typing 'yes' Verify the changes - Checking AWS, the following will be noted: tfstatefile is now inside the S3 bucket

DynamoDB table which we create has an entry which includes state file status

-

Add Terraform Output

Add the following tooutput.tf

output "s3_bucket_arn" { value = aws_s3_bucket.terraform_state.arn description = "The ARN of the S3 bucket" } output "dynamodb_table_name" { value = aws_dynamodb_table.terraform_locks.name description = "The name of the DynamoDB table" } Run

terraform apply

Terraform will automatically read the latest state from the S3 bucket to determine the current state of the infrastructure. Even if another engineer has applied changes, the state file will always be up to date. Check the tfstate file in S3 and click the version tab to see the different versions of the tfstate file.

In the next part of the series, Modules would be discussed, and more some more refactoring as well.

Code from Darey.io

Latest comments (0)