We recently tackled load testing prior to a new product launch — and yeah, we did it in Production. Read on to learn why we’re not totally crazy, and to learn more about load testing and how it can be applied, and adapted, to your use case.

What is a load test?

So what is a load test? It’s an Experiment! When we design systems, we can only do an estimation of how services will handle larger volumes of data. Therefore, the load test is an experiment to determine in reality how our systems handle this surge of data.

Why bother?

We want confidence that our system will scale and handle volume as we expect. Are we scaling up correctly, how do our databases handle this surge of data, how is our application latency affected? Or, we want to walk away with learnings about bottlenecks and/or issues that we may have overlooked in our system.

These are not mutually exclusive. In fact, I would say that the most successful outcome is to achieve both of these goals. Success is success! Even failure can be a success. We should use load tests to find issues before our customers do.

Our use case

Campaigns is the name of the Lob feature that allows for automation of direct mail campaigns, at scale. Those last two words, “at scale” are exactly why we wanted to load test the backend service that serves as the brains behind Campaigns:

- It handles the ingestion of an audience file (i.e., some sort of tabulated data, currently a CSV but in the future could be Parquet files, JSON, etc.) This means reading it efficiently and chopping it up into individual rows that can be processed by our API.

- It handles the creation of mailpieces. This means taking these individual rows and creating a mailpiece from the details of the CSV.

- It handles record keeping. This helps us keep track of mailpieces within the system to surface to our customers as well as help with reconciliation in case something goes wrong.

So…What happens if someone wants to send out millions of postcards? Well, I was challenged to find out. Not gonna lie, it kind of felt like I was getting handed the keys to the Benz.

How do you conduct a load test?

The answer is that it all depends on your service. If you don’t already have a workflow diagram, create one. From there it should be obvious: however your application ingests data, that's your ingress point. One tool we use is k6, an awesome open-source tool that can be used to simulate API request traffic (i.e., simulating traffic for multiple users, making multiple requests per second).

Considerations

We started with a list of questions (and how we applied them); these will differ for your specific workflow, but these should get the wheels turning to direct your own load test.

What is the scale/volume of the test?

For Campaigns, a load test meant uploading a massive CSV. In this case, we used a CSV file with millions of rows, which means we would be creating millions of mail pieces.

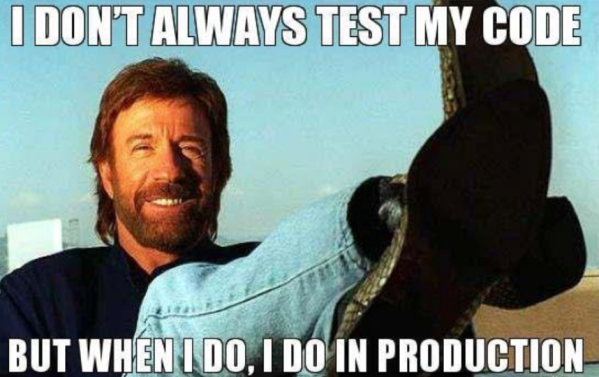

Where will you test?

Is your test system a true reflection of production? We ran the load test in production/live mode. Say what? That's right. Lob's API system results in physical output: an API request means that data gets routed through our system and to our partners, who then print a mailpiece that is inducted into the USPS mailstream—that is, an API request typically results in a physical mail arriving in a mailbox. So while we have a “test” system, creating mail in test mode doesn't kick off certain systems that we were hoping to test via the load test. Our production system is "live" in the sense that everything happens for real, so I had to really dive into all the different parts from our system end to end (p&m API, rendering, routing, partner systems) to ensure that even though we're in production, we don’t actually create mailpieces.

When will you test?

We identified the best off-peak period to run this load test, and also took into consideration how long it would take.

What are some downstream systems that will get kicked off by this test? How do we make sure the results from those systems are acceptable?

For us, this meant asking, how can we ensure we’re not billed for any API usage from this test?

What are the warning signs I should be looking out for in our execution services X, Y, Z?

Ex: We kept an eye on the service that creates the PDF from the digital file.

What are the warning signs I should be looking at in our notifications services X, Y, and Z?

We looked at changes in our webhook system throughput compared to expected throughput, as well as watching out for an elevated rate of 5xx errors (e.g. 503's)

How do I monitor the health of our infrastructure to ensure we’re not red-lining any particular resource?

What are your benchmarks/metrics?

Benchmarks/metrics are identified upfront so you know what success/failure looks like. Some key metrics we measure are throughput, latency, and average time to render, for example.

Wrap up

Discovery is half the fun of a load test. And remember, even “failure” is a success. With a list of findings in hand, the team can review and determine exactly where to invest engineering resources to improve the system, i.e., what changes will give you the most bang for your buck?

Two important learnings for us were:

- Our previous expectation was that our API workers in charge of creating mailpieces were the bottleneck—this turned out to be wrong! Our bottleneck ended up being our ingestion system, due to the worker being designed to ingest a CSV serially. Ultimately this led us to redesign this worker to ingest concurrently.

- We also discovered an unbounded transaction within our code; we were able to rework this particular operation to run in batches.

In addition to meeting the end goals of gaining confidence and identifying opportunities for improvement, teams can learn a lot about internal architecture and workflows—even outside of their own team or area of expertise.

Getting to run this magnitude of a test on our production system was an incredible experience. I previously mentioned doing this load test was like getting handed the keys to the Benz, and I gotta say, it was an awesome ride.

Article adapted from a presentation by Lob Software Engineer Sishaar Rao.

Oldest comments (3)

@ellativity for review :-)

Thanks so much for your patience while I investigated this @lob_dev!

Mystery solved: the canonical link for this blog post is already linked in this post, which explains why we can't use it. Each canonical link can only be used once, because otherwise the SEO juice machine gets tangled in its own wires.

oops, well THANK YOU for catching my error! I have corrected the ML article :-)