When designing modern applications in the cloud, there is always the debate – should we base our application on a container engine, or should we go with a fully serverless solution?

In this blog post, we will review some of the pros and cons of each alternative, trying to understand which solution we should consider.

The containers alternative

Containers have been with us for about 10 years.

The Docker engine was released in 2013, and Kubernetes was released in 2015.

The concept of packaging an application inside a container image brought many benefits:

- Portability – The ability to run the same code on any system that supports a container engine.

- Scalability – The ability to add or remove container instances according to application load.

- Isolation – The ability to limit the blast radius to a single container, instead of the whole running server (which in many cases used to run multiple applications).

- Resource Efficiency – Container image is usually made of the bare minimum required binaries and libraries (compared to a fully operating system).

- Developer experience – The ability to integrate container development processes with developers' IDE, and with CI/CD pipelines.

- Consistency – Once you have completed creating the container image and fully tested it, it will be deployed and run in the same way every time.

- Fast deployment time – It takes a short amount of time to deploy a new container (or to delete a running container when it is no longer needed).

Containers are not perfect – they have their disadvantages, to name a few:

- Security – The container image is made of binaries, libraries, and code. Each of them may contain vulnerabilities and must be regularly scanned and updated, under the customer’s responsibility.

- Storage challenges – Container images are by default stateless. They should not hold any persistent data, which forces them to connect to external (usually managed) storage services (such as object storage, managed NFS, managed file storage, etc.)

- Orchestration – When designing a containers-based solution, you need to consider the networking side, meaning, how do I separate between a publicly facing interface (for receiving inbound traffic from customers), and private subnets (for deploying containers or Pods, and communication between them).

Containers are very popular in many organizations (from small startups to large enterprises), and today organizations have many alternatives for running containers – from Amazon ECS, Azure Container Apps, and Google Cloud Run, to managed Kubernetes services such as Amazon EKS, Azure AKS, and Google GKE.

The Serverless alternative

Serverless, at a high level, is any solution that does not require end-users to deploy or maintain the underlying infrastructure (mostly servers).

There are many services under this category, to name a few:

- Object storage, such as Amazon S3, Azure Blob Storage, and Google Cloud Storage.

- Managed databases, such as Amazon Aurora, Azure SQL, and Google Cloud SQL.

- Message queuing services, such as Amazon SQS, Azure Service Bus, and Google Pub/Sub.

- Functions as a service (FaaS), such as AWS Lambda, Azure Functions, and Google Cloud Functions.

The Serverless alternative usually means the use of FaaS, together with other managed services, in the cloud provider ecosystem (such as running functions based on containers, mounting persistent storage, database, etc.)

FaaS has been with us for nearly 10 years.

AWS Lambda became available in 2015, Azure Functions became available in 2016, and Google Cloud Functions became available in 2018.

The use of FaaS has advantages, to name a few:

- Infrastructure maintenance – The cloud provider is responsible for maintaining the underlying servers and infrastructure, including resiliency (i.e., deploying functions across multiple AZs).

- Fast Auto-scaling – The cloud provider is responsible for adding or removing running functions according to the application's load. Customers do not need to take care of scale.

- Fast time to market – Customers can focus on what is important to their business, instead of the burden of taking care of the server provisioning task.

- Cost – You pay per the amount of time a function was running, and the number of running functions (also known as invocations or executions).

FaaS is not perfect – it has its disadvantages, to name a few:

- Vendor lock-in – Each cloud provider has its implementation of FaaS, making it almost impossible to migrate between cloud providers.

- Maximum execution time – Functions have hard limits in terms of maximum execution time – AWS Lambda is limited to 15 minutes, Azure Functions (in the Consumption plan) are limited to 10 minutes, and Google Cloud Functions (HTTP functions) are limited to 9 minutes.

- Cold starts – The time it takes a function to respond (and execute), for a function that has not been in use recently, which increases the number of seconds it takes a function to load.

- Security – Each cloud provider implements isolation between different functions running for different customers. Customers have no visibility on how each deployed function is protected by the cloud provider, at the infrastructure level.

- Observability – Troubleshooting a running function in real-time is challenging in a fully managed environment, managed by cloud providers, in a distributed architecture.

- Cost - Workloads with predictable load, or bugs in the function’s code which ends up with an endless loop, may generate high costs for running FaaS.

How do we know what to choose?

The answer to this question is not black or white, it depends on the use case.

Common use cases for choosing containers or Kubernetes:

- Legacy application modernization – The ability to package legacy applications inside containers, and run them inside a managed infrastructure at scale.

- Environment consistency – The ability to run containers consistently across different environments, from Dev, Test, to Prod.

- Hybrid and Multi-cloud – The ability to deploy the same containers across hybrid or multi-cloud environments (with adjustments such as connectivity to different storage or database services).

Common use cases for choosing Functions as a Service:

- Event-driven architectures – The ability to trigger functions by events, such as file upload, database change, etc.

- API backends – The ability to use functions to handle individual API requests and scale automatically based on demand.

- Data processing – Functions are suitable for data processing tasks such as batch processing, stream processing, ETL operations, and more because you can spawn thousands of them in a short time.

- Automation tasks – Functions are perfect for tasks such as log processing, scheduled maintenance tasks (such as initiating backups), etc.

One of the benefits of using microservices architecture is the ability to choose different solutions for each microservice.

Customers can mix between containers, and FaaS in the same architecture.

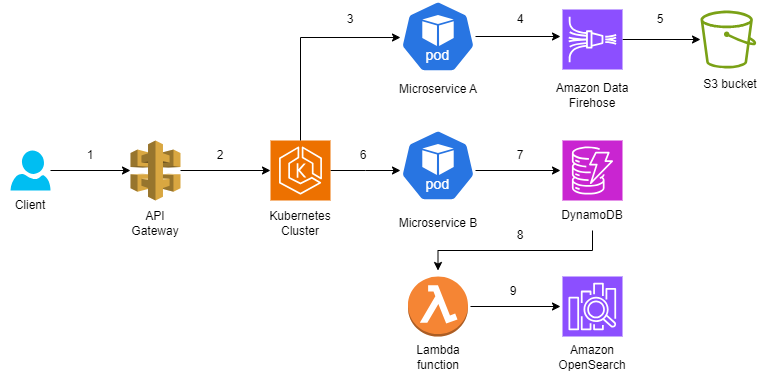

Below is a sample microservice architecture:

- A customer logs into an application using an API gateway.

- API calls are sent from the API gateway to a Kubernetes cluster (deployed with 3 Pods).

- User access logs are sent from the Kubernetes cluster to Microservice A.

- Microservice A sends the logs to Amazon Data Firehose.

- The Amazon Data Firehose converts the logs to JSON format and stores them in an S3 bucket.

- The Kubernetes cluster sends an API call to Microservice B.

- Microservice B sends a query for information from DynamoDB.

- A Lambda function pulls information from DynamoDB tables.

- The Lambda function sends information from DynamoDB tables to OpenSearch, for full-text search, which later be used to respond to customer's queries.

Note: Although the architecture above mentions AWS services, the same architecture can be implemented on top of Azure, or GCP.

Summary

In this blog post, we have reviewed the pros and cons of using containers and Serverless.

Some use cases are more suitable for choosing containers (such as modernization of legacy applications), while others are more suitable for choosing serverless (such as event-driven architecture).

Before designing an application using containers or serverless, understand what are you trying to achieve, which services will allow you to accomplish your goal, and what are the services' capabilities, limitations, and pricing.

The public cloud allows you to achieve similar goals using different methods, based on different services – never stop questioning your architecture decisions over time, and if needed, adjust to gain better results (in terms of performance, cost, etc.)

About the authors

Efi Merdler-Kravitz is an AWS Serverless Hero and the author of 'Learning Serverless in Hebrew'. With over 15 years of experience, he brings extensive expertise in cloud technologies, encompassing both hands-on development and leadership of R&D teams.

You can connect with him on social media (https://linktr.ee/efimk).

Eyal Estrin is a cloud and information security architect, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Top comments (4)

This is a fascinating and ongoing debate—container orchestration offers greater control and scalability for complex applications, while FaaS shines with simplicity and cost-effectiveness for event-driven workloads. Both have their place depending on architecture needs. And if you're labeling dev projects or naming environments creatively, stylish-names.net is a handy tool to generate bold, fancy, or stylish text that adds a polished touch to your workflow.

The Ehsaas Program 8171 and BISP 25000 cash assistance are designed to support eligible individuals. Find out if you qualify and apply free on the official site now: 8171ahsaasprogram.pk/.

Great topic — the container orchestration vs. FaaS debate is super relevant for architects deciding between control and simplicity in cloud deployments. 🎯 I’ve been leaning toward FaaS for smaller, event-driven services because of the scaling and cost benefits, but containers definitely still shine for complex stateful apps. After digging into these tech comparisons, I like to unwind with some good shows on my phone — this Android streaming app works really well for that playpelis-apk.com/ playpelis para smart tv 📱📺. Curious to hear what others are using in production!

Great breakdown of container orchestration vs. FaaS — both offer smart solutions depending on the use case! Speaking of efficient systems, Ecuador now provides an easy way to manage vehicle registrations online. This portal de matriculación vehicular lets users check plate info, schedule appointments, and renew documents without long waits. It’s a practical tool that saves time and brings convenience to everyday life.