When designing modern applications in the cloud, one of the topics we need to consider is how to configure scalability policies for adding or removing resources according to the load on our application.

In this blog post, I will review resource-based and request-based scaling and try to explain the advantages and disadvantages of both alternatives.

What is resource-based scaling?

Using resource-based scaling, resources are allocated and scaled based on how much CPU or memory is being used by an application or a service.

The system monitors metrics like CPU utilization (e.g., percentage used) or memory usage. When these metrics exceed predefined thresholds, the service scales up (adds more resources); when they fall below thresholds, it scales down.

This method is effective for applications where resource usage directly correlates with workload, such as compute-intensive or memory-heavy tasks.

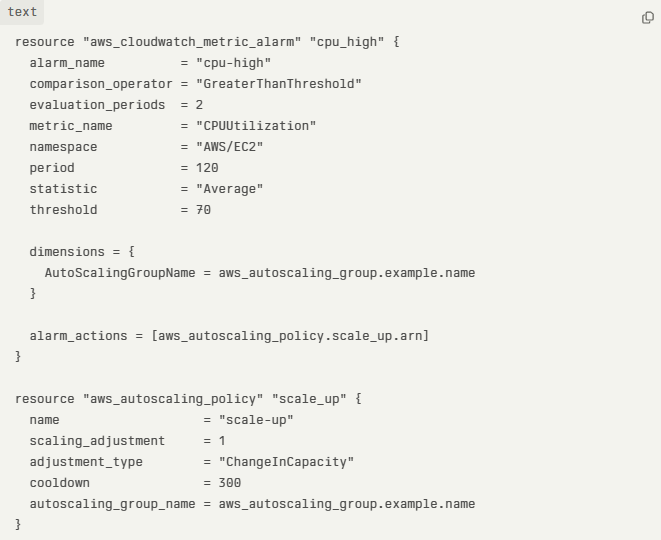

In the example sample Terraform code below, we can see CloudWatch alarm is triggered once the average CPU utilization threshold is above 70%:

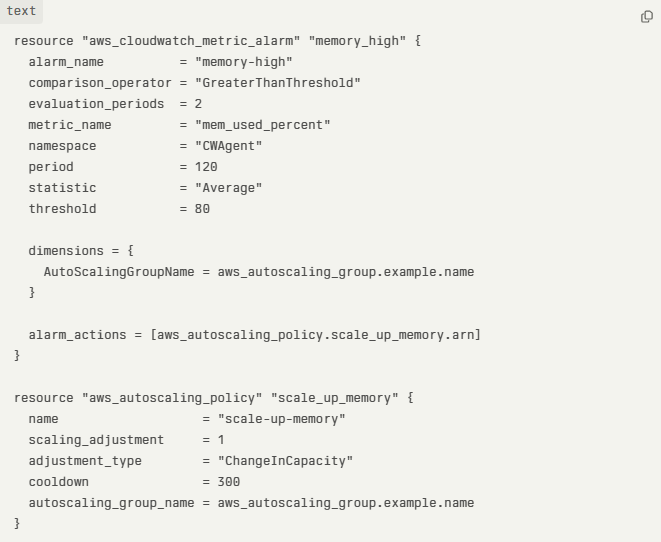

In the example sample Terraform code below, we can see CloudWatch alarm triggers once the average memory usage is above 80%:

Advantages of using resource-based scaling

- Automatically adjust resources based on required CPU or memory, which decreases the chance of over-provisioning (wasting money) or underutilizing (potential performance issues).

- Decrease the chance of an outage due to a lack of CPU or memory resources.

- Once a policy is configured, there is no need to manually adjust the required resources.

Disadvantages of using resource-based scaling

- Autoscaling may respond slowly in case of a sudden spike in resource requirements.

- When the autoscaling policy is not properly tuned, it may lead to resource over-provisioning or underutilization, which may increase the chance of high resource cost.

- Creating an effective autoscaling policy may be complex and requires planning, monitoring, and expertise.

What is request-based scaling?

Using request-based scaling, resources are allocated and scaled based on the actual number of incoming requests (such as HTTP requests to a website or API calls to an API gateway).

In this method, the system counts the number of incoming requests. When the number of requests crosses a pre-defined threshold, it triggers a scaling action (i.e., adding or removing resources).

Suited for applications where each request is relatively uniform in resource consumption, or where user experience is tightly coupled to request volume (e.g., web APIs, serverless functions).

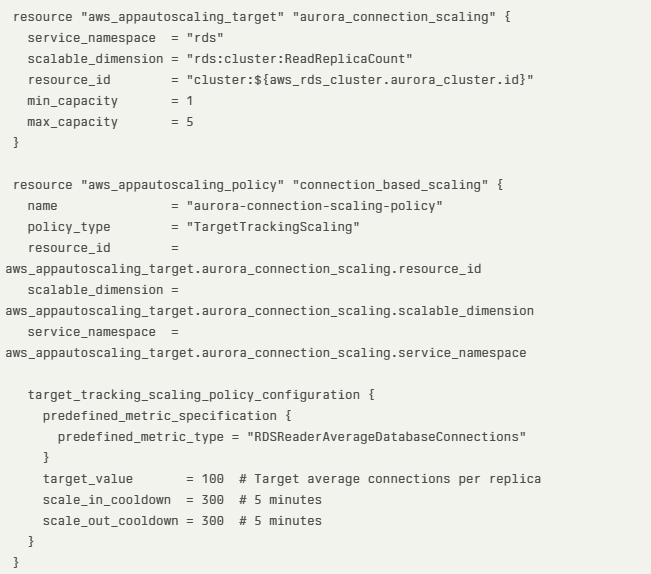

In the example sample Terraform code below, we can see Amazon Aurora request-based scaling using the average number of database connections as the scaling metric:

Advantages of using request-based scaling

- Immediate or predictable scaling in response to user demand.

- Align resource allocation to user activity, which increases application responsiveness.

- Align cost to resource allocation, which is useful in SaaS applications when we want to charge-back customers to their actual resource usage (such as HTTP requests, API calls, etc.).

Disadvantages of using request-based scaling

- Potential high cost during peak periods, due to the fast demand for resource allocation.

- To gain the full benefit of request-based scaling, it is required to design stateless applications.

- Creating a request-based scaling policy may be a complex task due to the demand to manage multiple nodes or instances (including load-balancing, synchronization, and monitoring).

- During spikes, there may be temporary performance degradation due to the time it takes to allocate the required resources.

How do we decide on the right scaling alternative?

When designing modern (or SaaS) applications, no one solution fits all.

It depends on factors such as:

- Application’s architecture or services in use – Resource-based scaling is more suitable for architectures based on VMs or containers, while request-based scaling is more suitable for architectures using serverless functions (such as Lambda functions) or APIs.

- Workload patterns – Workloads that rely heavily on resources (such as back-end services or batch jobs), compared to workloads designed for real-time data processing based on APIs (such as event-driven applications processing messages or HTTP requests).

- Business requirements – Resource-based scaling is more suitable for predictable or steady workloads (where scale based on CPU/Memory is more predictable), for legacy or monolithic applications. Request-based scaling is more suitable for applications experiencing frequent spikes or for scenarios where the business would like to optimize cost per customer request (also known as pay-for-use).

- Organization/workload maturity – When designing early-stage SaaS, it is recommended to begin with resource-based scaling due to the ease of implementation. Once the SaaS matures and adds front-end or API services, it is time to begin using request-based scaling (for services that support this capability). For mature SaaS applications using microservices architecture, it is recommended to implement advanced monitoring and dynamically adjust scaling policies according to the target service (front-end/API vs. back-end/heavy compute resources).

Summary

Choosing the most suitable scaling alternative requires careful design of your workloads, understanding demands (customer facing/API calls, vs. heavy back-end compute), careful monitoring (to create the most suitable policies) and understanding how important responsiveness to customers behavior is (from adjusting required resources to ability to charge-back customers per their actual resource usage).

I encourage you to learn about the different workload patterns, to be able to design highly scalable modern applications in the cloud, to meet customers' demand, and to be cost cost-efficient as possible.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Top comments (6)

That’s an important discussion. Configuring scalability policies is essential for ensuring performance and cost efficiency in cloud applications, your review of resource-based and request-based scaling provides valuable insights for developers to make informed decisions based on their application’s specific needs.

विभिन्न विभागों से डेटा संग्रह, अंतर्विभागीय बैठकें और प्री-बजट चर्चाएं चल रही हैं।

यह बजट भारत की 7-7.5% ग्रोथ रेट को बनाए रखने और वैश्विक अनिश्चितताओं से निपटने में अहम भूमिका निभाएगा।

अधिक जानकारी के लिए ये उपयोगी लिंक देखें:

That is a crucial conversation. Setting up scaling standards is crucial for geometry dash guaranteeing cloud applications' cost-effectiveness and performance. Your analysis of resource-based

Really solid overview! One thing I’d add is that observability (via tools like Prometheus/Grafana or AWS X-Ray) can help fine-tune scaling policies. We’ve avoided a few outages just by catching misconfigured alarms early.

Also, just like in tunnel rush, things can look smooth until you're suddenly dodging 20 spinning obstacles at once — that’s your weekend if your autoscaling isn’t tuned right.

Rajasthan Single Sign-On [ssoid.info/2025/09/sso-id-login.html] id login portal government services with one digital sso id

To complete the gauntlet, the cube must hop and even rocket to the beat of the music.

geometry dash lite

Some comments may only be visible to logged-in visitors. Sign in to view all comments.