For more than a decade, organizations are using machine learning for various use cases such as predictions, assistance in the decision-making process, and more.

Due to the demand for high computational resources and in many cases expensive hardware requirements, the public cloud became one of the better ways for running machine learning or deep learning processes.

Terminology

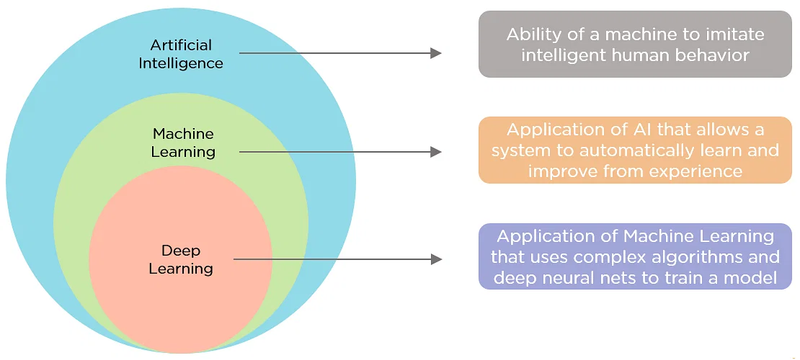

Before we dive into the topic of this post, let us begin with some terminology:

- Artificial Intelligence – "The ability of a computer program or a machine to think and learn", Wikipedia

- Machine Learning – "The task of making computers more intelligent without explicitly teaching them how to behave", Bill Brock, VP of Engineering at Very

- Deep Learning – "A branch of machine learning that uses neural networks with many layers. A deep neural network analyzes data with learned representations like the way a person would look at a problem", Bill Brock, VP of Engineering at Very

Public use cases of deep learning

- Disney makes its archive accessible using deep learning built on AWS

- NBA accelerates modern app time to market to ramp up fans’ excitement

- Satair: Enhancing customer service with Lilly, a smart online assistant built on Google Cloud

In this blog post, I will focus on deep learning and hardware available in the cloud for achieving deep learning.

Deep Learning workflow

The deep learning process is made of the following steps:

- Prepare – Store data in a repository (such as object storage or a database)

- Build – Choose a machine learning framework (such as TensorFlow, PyTorch, Apache MXNet, etc.)

- Train – Choose hardware (compute, network, storage) to train the model you have built ("learn" and optimize model from data)

- Inference – Using the trained model (on large scale) to make a prediction

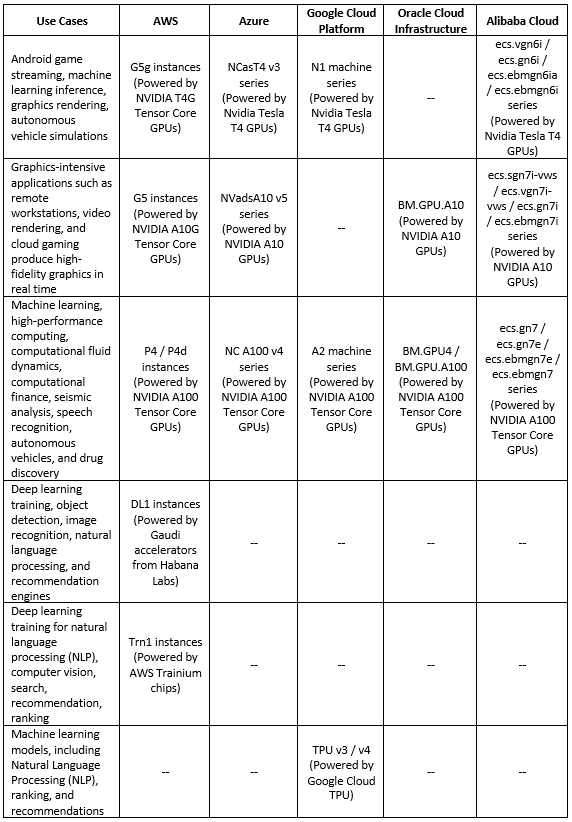

Deep Learning processors comparison (Training phase)

Below is a comparison table for the various processors available in the public cloud, dedicated to the deep learning training phase:

Additional References

- Amazon EC2 - Accelerated Computing

- AWS EC2 Instances Powered by Gaudi Accelerators for Training Deep Learning Models

- AWS Trainium

- Azure - GPU-optimized virtual machine sizes

- Google Cloud - GPU platforms

- Google Cloud - Introduction to Cloud TPU

- Oracle Cloud Infrastructure - Compute Shapes - GPU Shapes

- Alibaba Cloud GPU-accelerated compute-optimized and vGPU-accelerated instance families

- NVIDIA T4 Tensor Core GPU

- NVIDIA A10 Tensor Core GPU

- NVIDIA A100 Tensor Core GPU

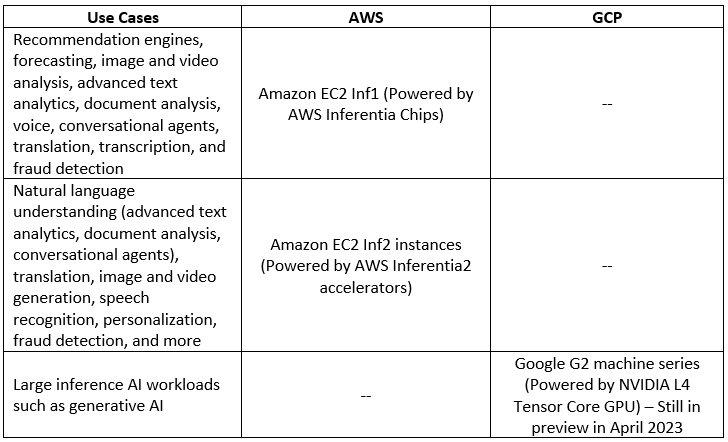

Deep Learning processors comparison (Inference phase)

Below is a comparison table for the various processors available in the public cloud, dedicated to the deep learning inference phase:

Additional References

Summary

In this blog post, I have shared information about the various alternatives for using hardware available in the public cloud to run deep learning processes.

I recommend you to keep reading and expand your knowledge on both machine learning and deep learning, what services are available in the cloud and what are the use cases to achieve outcomes from deep learning.

Additional References

- AWS Machine Learning Infrastructure

- AWS - Select the right ML instance for your training and inference jobs

- AWS - Accelerate deep learning and innovate faster with AWS Trainium

- Azure AI Infrastructure

- Google Cloud Platform - AI Infrastructure

- Oracle Cloud - Machine Learning Services

- Alibaba Cloud - Machine Learning Platform for AI

About the Author

Eyal Estrin is a cloud and information security architect, the owner of the blog Security & Cloud 24/7 and the author of the book Cloud Security Handbook, with more than 20 years in the IT industry.

Eyal is an AWS Community Builder since 2020.

You can connect with him on Twitter and LinkedIn.

Top comments (0)