Amazon SageMaker HyperPod flexible training plans

Amazon SageMaker HyperPod announces flexible training plans, a new capability that allows you to train generative AI models within your timelines and budgets. Gain predictable model training timelines and run training workloads within your budget requirements, while continuing to benefit from features of SageMaker HyperPod such as resiliency, performance-optimized distributed training, and enhanced observability and monitoring.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-sagemaker-hyperpod-flexible-training-plans/

https://aws.amazon.com/blogs/aws/meet-your-training-timelines-and-budgets-with-new-amazon-sagemaker-hyperpod-flexible-training-plans/

https://docs.aws.amazon.com/sagemaker/latest/dg/reserve-capacity-with-training-plans.html

Amazon SageMaker HyperPod task governance

Amazon SageMaker HyperPod now provides you with centralized governance across all generative AI development tasks, such as training and inference. You have full visibility and control over compute resource allocation, ensuring the most critical tasks are prioritized and maximizing compute resource utilization, reducing model development costs by up to 40%.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/task-governance-amazon-sagemaker-hyperpod/

https://aws.amazon.com/blogs/aws/maximize-accelerator-utilization-for-model-development-with-new-amazon-sagemaker-hyperpod-task-governance/

AI apps from AWS partners now available in Amazon SageMaker

Amazon SageMaker partner AI apps, a new capability that enables customers to easily discover, deploy, and use best-in-class machine learning (ML) and generative AI (GenAI) development applications from leading app providers privately and securely, all without leaving Amazon SageMaker AI so they can develop performant AI models faster.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-sagemaker-partner-ai-apps/

https://docs.aws.amazon.com/sagemaker/latest/dg/partner-apps.html

poolside in Amazon Bedrock (coming soon)

poolside's generative AI Assistant puts the power of poolside's malibu and point models right inside of your developers' Integrated Development Environment (IDE). poolside models are fine-tuned on your team's interactions, code base, practices, libraries, and knowledge bases.

For more details:

https://aws.amazon.com/bedrock/poolside/

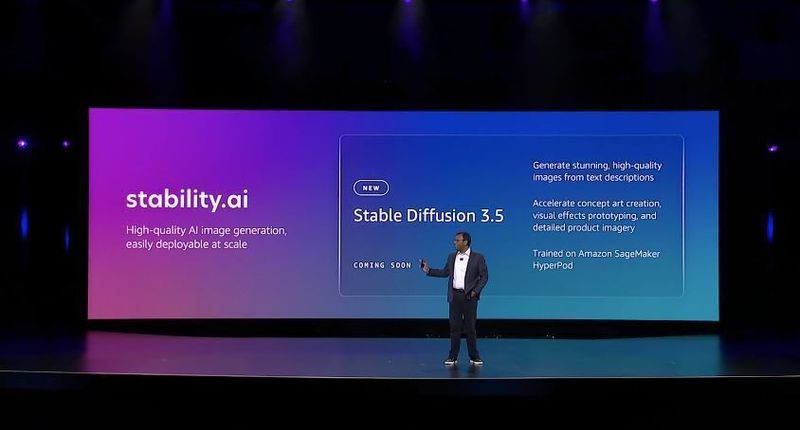

Stability AI in Amazon Bedrock (coming soon)

Three of Stability AI's newest cutting-edge text-to-image models are now available in Amazon Bedrock, providing high-speed, scalable, AI-powered visual content creation capabilities.

For more details:

https://aws.amazon.com/bedrock/stability-ai/

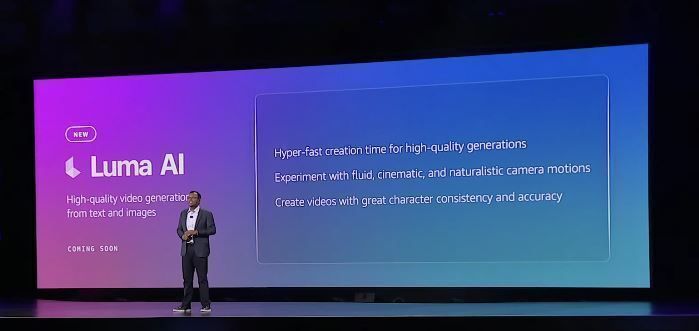

Luma AI in Amazon Bedrock (coming soon)

Luma AI is a startup building multimodal foundation models (FMs) and software products with the goal of helping consumers and professionals across industries creatively grow, ideate, and build. Luma AI’s goal is to create an “imagination engine” where users can play with and build new worlds without limiting the imagination.

For more details:

https://aws.amazon.com/bedrock/luma-ai/

Amazon Bedrock Marketplace

Amazon Bedrock Marketplace provides generative AI developers access to over 100 publicly available and proprietary foundation models (FMs), in addition to Amazon Bedrock’s industry-leading, serverless models. Customers deploy these models onto SageMaker endpoints where they can select their desired number of instances and instance types. Amazon Bedrock Marketplace models can be accessed through Bedrock’s unified APIs, and models which are compatible with Bedrock’s Converse APIs can be used with Amazon Bedrock’s tools such as Agents, Knowledge Bases, and Guardrails.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-bedrock-marketplace-100-models-bedrock/

https://aws.amazon.com/blogs/aws/amazon-bedrock-marketplace-access-over-100-foundation-models-in-one-place/

https://docs.aws.amazon.com/bedrock/latest/userguide/amazon-bedrock-marketplace.html

Amazon Bedrock supports prompt caching (Preview)

Prompt caching is a new capability that can reduce costs by up to 90% and latency by up to 85% for supported models by caching frequently used prompts across multiple API calls. It allows you to cache repetitive inputs and avoid reprocessing context, such as long system prompts and common examples that help guide the model’s response. When cache is used, fewer computing resources are needed to generate output. As a result, not only can we process your request faster, but we can also pass along the cost savings from using fewer resources.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-bedrock-preview-prompt-caching/

https://aws.amazon.com/blogs/aws/reduce-costs-and-latency-with-amazon-bedrock-intelligent-prompt-routing-and-prompt-caching-preview/

https://docs.aws.amazon.com/bedrock/latest/userguide/prompt-caching.html

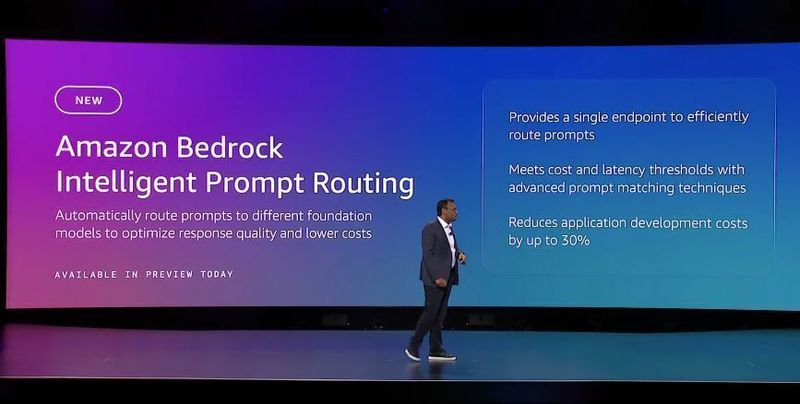

Amazon Bedrock Intelligent Prompt Routing (Preview)

Amazon Bedrock Intelligent Prompt Routing routes prompts to different foundational models within a model family, helping you optimize for quality of responses and cost. Using advanced prompt matching and model understanding techniques, Intelligent Prompt Routing predicts the performance of each model for each request and dynamically routes each request to the model that it predicts is most likely to give the desired response at the lowest cost. Customers can choose from two prompt routers in preview that route requests either between Claude Sonnet 3.5 and Claude Haiku, or between Llama 3.1 8B and Llama 3.1 70B.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-bedrock-intelligent-prompt-routing-preview/

https://aws.amazon.com/blogs/aws/reduce-costs-and-latency-with-amazon-bedrock-intelligent-prompt-routing-and-prompt-caching-preview/

https://docs.aws.amazon.com/bedrock/latest/userguide/prompt-routing.html

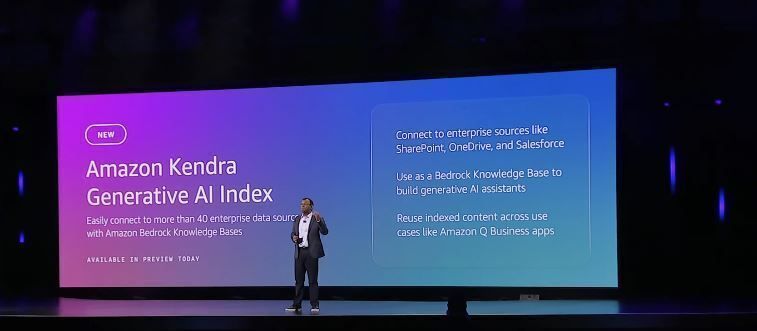

Amazon Kendra Generative AI Index (Preview)

Amazon Kendra is an AI-powered search service enabling organizations to build intelligent search experiences and retrieval augmented generation (RAG) systems to power generative AI applications. Starting today, AWS customers can use a new index - the GenAI Index for RAG and intelligent search. With the Kendra GenAI Index, customers get high out-of-the-box search accuracy powered by the latest information retrieval technologies and semantic models.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/genai-index-amazon-kendra/

https://aws.amazon.com/blogs/machine-learning/introducing-amazon-kendra-genai-index-enhanced-semantic-search-and-retrieval-capabilities/

https://docs.aws.amazon.com/kendra/latest/dg/hiw-index-types.html

Amazon Bedrock Knowledge Bases supports structured data retrieval (Preview)

Amazon Bedrock Knowledge Bases now supports natural language querying to retrieve structured data from your data sources. With this launch, Bedrock Knowledge Bases offers an end-to-end managed workflow for customers to build custom generative AI applications that can access and incorporate contextual information from a variety of structured and unstructured data sources. Using advanced natural language processing, Bedrock Knowledge Bases can transform natural language queries into SQL queries, allowing users to retrieve data directly from the source without the need to move or preprocess the data.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-bedrock-knowledge-bases-structured-data-retrieval/

https://docs.aws.amazon.com/bedrock/latest/userguide/knowledge-base-supported.html

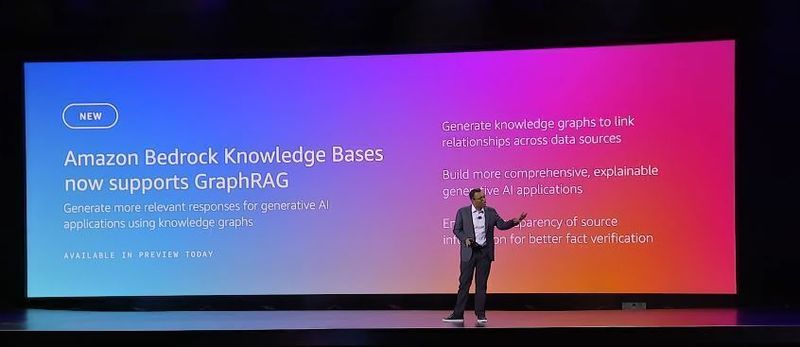

Amazon Bedrock Knowledge Bases now supports GraphRAG (Preview)

GraphRAG enhances generative AI applications by providing more accurate and comprehensive responses to end users by using RAG techniques combined with graphs.

For more details:

https://aws.amazon.com/blogs/aws/new-amazon-bedrock-capabilities-enhance-data-processing-and-retrieval/

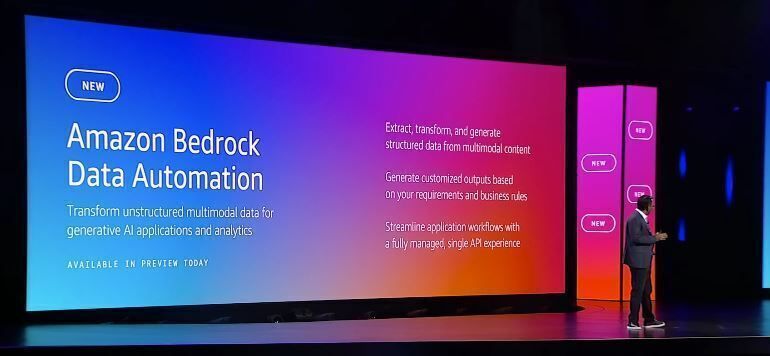

Amazon Bedrock Data Automation (Preview)

Amazon Bedrock Data Automation (BDA), a new feature of Amazon Bedrock that enables developers to automate the generation of valuable insights from unstructured multimodal content such as documents, images, video, and audio to build GenAI-based applications. These insights include video summaries of key moments, detection of inappropriate image content, automated analysis of complex documents, and much more. Developers can also customize BDA’s output to generate specific insights in consistent formats required by their systems and applications.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-bedrock-data-automation-available-preview/

https://www.amazon.com/bedrock/bda

Amazon Bedrock Guardrails Multimodel toxicity detection (Preview)

Amazon Bedrock Guardrails now supports multimodal toxicity detection for image content, enabling organizations to apply content filters to images. This new capability with Guardrails, now in public preview, removes the heavy lifting required by customers to build their own safeguards for image data or spend cycles with manual evaluation that can be error-prone and tedious.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-bedrock-guardrails-multimodal-toxicity-detection-image-content-preview/

https://aws.amazon.com/blogs/aws/amazon-bedrock-guardrails-now-supports-multimodal-toxicity-detection-with-image-support/

https://docs.aws.amazon.com/bedrock/latest/userguide/guardrails-mmfilter.html

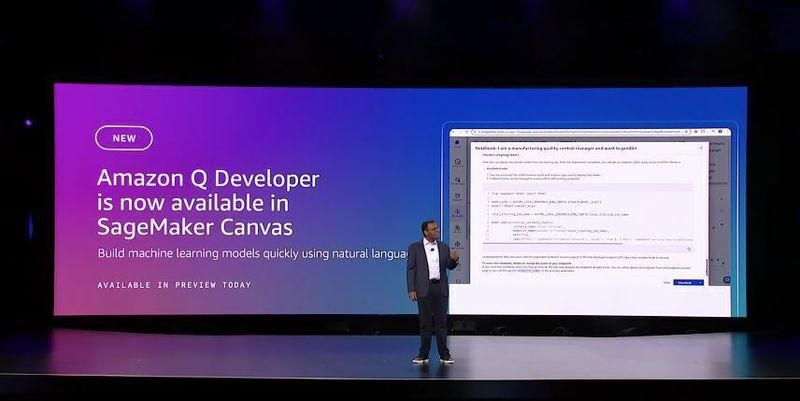

Amazon Q Developer is now available in SageMaker Canvas (Preview)

Starting today, you can build ML models using natural language with Amazon Q Developer, now available in Amazon SageMaker Canvas in preview. You can now get generative AI-powered assistance through the ML lifecycle, from data preparation to model deployment. With Amazon Q Developer, users of all skill levels can use natural language to access expert guidance to build high-quality ML models, accelerating innovation and time to market.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/amazon-q-developer-guide-sagemaker-canvas-users-ml-development/

https://aws.amazon.com/blogs/aws/use-amazon-q-developer-to-build-ml-models-in-amazon-sagemaker-canvas/

https://docs.aws.amazon.com/sagemaker/latest/dg/canvas-q.html

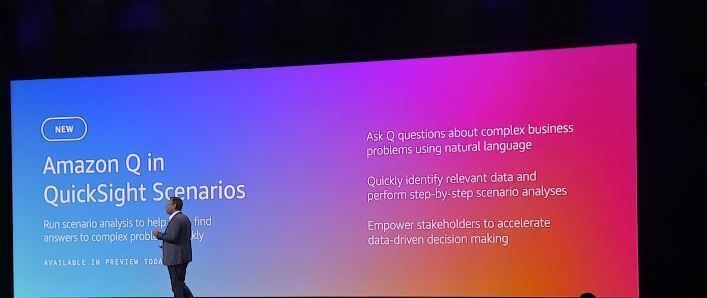

Amazon Q in QuickSight Scenarios (Preview)

A new scenario analysis capability of Amazon Q in QuickSight is now available in preview. This new capability provides an AI-assisted data analysis experience that helps you make better decisions, faster. Amazon Q in QuickSight simplifies in-depth analysis with step-by-step guidance, saving hours of manual data manipulation and unlocking data-driven decision-making across your organization.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/scenario-analysis-capability-amazon-q-quicksight-preview/

https://aws.amazon.com/blogs/aws/solve-complex-problems-with-new-scenario-analysis-capability-in-amazon-q-in-quicksight/

https://docs.aws.amazon.com/quicksight/latest/user/working-with-scenarios.html

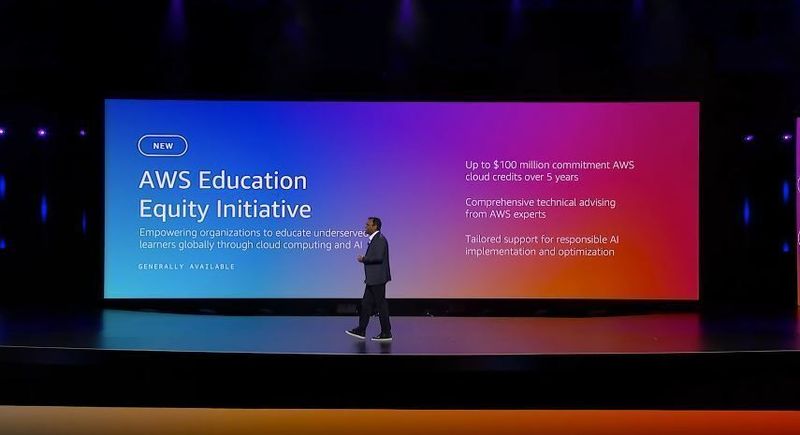

AWS Education Equity Initiative

Amazon announces a five-year commitment of cloud technology and technical support for organizations creating digital learning solutions that expand access for underserved learners worldwide through the AWS Education Equity Initiative. While the use of educational technologies continues to rise, many organizations lack access to cloud computing and AI resources needed to accelerate and scale their work to reach more learners in need.

For more details:

https://aws.amazon.com/about-aws/whats-new/2024/12/aws-education-equity-boost-education-underserved-learners/

https://aws.amazon.com/about-aws/our-impact/education-equity-initiative/

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Top comments (1)

Dr. Swami shared some really exciting news at re:Invent 2024. The keynote showed how fast tech is changing and helping businesses grow. culvers menu It’s great to see new tools that make things easier and smarter.