Cloud providers are expensive and spending on infrastructure can be painful. But...You know what's more painful? Spending on unused infrastructure. Infrastructure that you don't even remember choosing in the first place.

In the current era of microservices and almost everything being on cloud, it is extremely important to spend on infrastructure wisely. During the initial phase of designing and architecting, many of us tend to get carried away by scalability and availability. We go overboard and design a system which is over-engineered and a bit too ready for the traffic it won't even meet in the next five to ten years. I don't say that we shouldn't be future ready. We need to definitely design systems that are robust and scalable to withstand harsh conditions. But what we definitely need to avoid is building these bulky systems that come with neverending maintenance costs. It's like the broadband or any other plan that you usually opt for. When you know that your needs would be fulfilled by the basic plan then why pay for the premium plan which you won't even use(especially when you know that you can switch to premium at any moment).

Figuring out the boo-boo

In the last project that I had worked on, we kind of faced a similar situation. The infrastructure at hand was over-engineered resulting in numerous repositories, huge maintenance costs and unhappy developers. Carrying out the daily tasks was getting difficult as there was a lot to deal with. Our major pain points were:

Managing the cloud instances

We use AWS as our cloud provider and we overused their compute services. We had bulky EC2 instances and a bit of complex auto-scaling and monitoring strategy. We later realised that we actually don't even need EC2s and they can be easily replaced by ECS and docker containers.

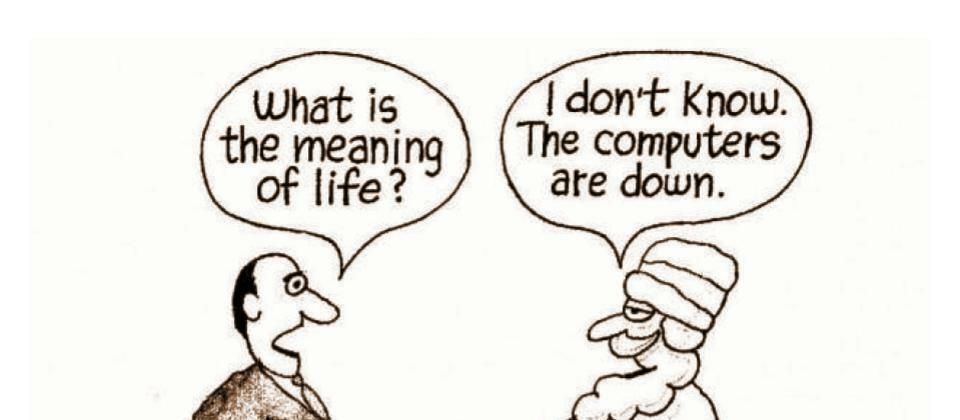

Getting lost in the world of AWS while debugging issues

Whenever there was a new bug in production, it made our hearts skip a beat (not in a good way). We literally used to get lost in the world of AWS and sometimes even forget why we landed on the page in the first place.

The repository maze

We dreaded to look at our repositories to fix the bug, mainly because there were too many to start with and we ended up going through all the repositories for every single bug. No form of graph traversals could save us.

Varnish aches

We used varnish for stitching our pages and, fun fact, there isn't much documentation on troubleshooting related to varnish. The very beloved stackoverflow too seemed powerless sometimes.

So here we had an infrastructure which was extremely difficult to use and maintain. Within a couple of months we figured out that this won't fly. We instantly took actions and had a lot of spikes done. We came up with an alternate solution that was far more maintainable and scalable.

Minimal impact on the current services

We came up with an alternate solution of using ECS instead of EC2. mainly because our needs were met by ECS and we didn't want to pay for a server we wouldn't need. We replaced Varnish with Kong. Kong, which is a fancy Nginx(in my opinion), had a slight learning curve, decent documentation and good plugin support.

The only thing left was implementing and going live. Being a platform team, we help other teams go live by staying alive. It is supremely important that we don't go down and we knew that the transition needs to be done carefully. We had thorough rounds of testing done before going live. Only when we were finally confident with the results, we went live.

Projecting the gains

Personally I feel, this was the most difficult task. You have redesigned your system and have taken a couple of months to do so. You say that the current solution is better. It has made maintenance easier and is helpful in fixing bugs quickly. But.. If you have spent far more than what you have gained or would be gaining in the coming years, then was it even worth it? How do you justify your decision? And more importantly, what are the metrics to measure the success of your decision.

We answered these concerns by keeping track of the frequency of bugs that we got earlier vs now. Besides, we made use of AWS cost explorer. We found out that within six months we saved the development cost that we spent while redesigning. Also note that we have rolled out three new services and on the whole we are still saving costs i.e our clients got three new services and they didn't pay a buck extra for it, instead they are gaining every day.

The silver lining

After going live, we got zero bugs. Just kidding. We did get bugs, but this time we had more confidence and lesser repositories to traverse. Our faith in stackoverflow was reinstated. We could find our way within the AWS console too. We could finally sleep with lesser nightmares.

If you are pondering over whether you need to redesign your system or not, you have already begun your journey. The key here is to actually think, reason and finally accept. After careful speculation if you still believe your system is decent, congrats. But if you feel that you have made a boo-boo, like we did, congrats again. You have identified the shortcoming and are to unravel a whole new adventure of redesigning your architecture.

Top comments (5)

This was a really fun summary of your process and outcomes, @ujjavala - thanks for sharing! I do have a bunch of questions:

Did you need to project these gains ahead of time, and were those the same metrics that you ended up using after implementation? Were there any surprises at all, or did everything work as expected and optimize as expected?

Thanks @ellativity . Glad that you liked it.

To answer your question, the entire redesign and refactoring was done to have better grip and grasp over what we had developed. As mentioned in the blog, with the earlier design when we had bugs, it was becoming difficult to fix them and sometimes when we had production bugs, we spent nights to fix it. It was becoming difficult to the level that we just couldn't go on with the code. And on top of that, with the huge infrastructure, we were incurring a lot of loss since most of it was not even being used. The entire redesign took around 2-3 months (most of the time was to get approvals) and IMO it helped us a lot.

To measure it, we had used RED metrics and of course the cost gains that we had.

Surprises I reckon we didn't have many. Yes, we had bugs in the beginning but we could fix them easily as we had lesser codebase and better logs now.

Thanks for responding and explaining in more detail. So, although projecting gains was important, what was more important was that the existing codebase was unmanageable full-stop? I think I understand!

Ain't that the truth 90% of the time, tho!

Exactly! Since ours was a platform team, any downtime affected around 10-15 other teams (micro-services). So basically we have to stay alive so that others are alive, and this is only possible when our MTTR is minimal.

Whatever it takes!