Terraform: EKS and Karpenter — upgrade the module version from 19.21 to 20.0

It seems like a common task to update a version of a Terraform module, but terraform-aws-modules/eks version 20.0 had some pretty big changes with breaking changes.

The changes relate to authentication and authorization in AWS IAM and AWS EKS, which I analyzed in the post AWS: Kubernetes and Access Management API, the new authentication in EKS.

But there, we did everything manually to look at the new mechanism in general, and now let’s do it with Terraform.

In addition, Karpenter has made changes to IRSA (IAM Roles for ServiceAccounts). I described the new scheme for working with ServiceAccounts in the AWS: EKS Pod Identities — a replacement for IRSA? Simplifying IAM access management, and we’ll look at the update specifically in the Karpenter module in this post.

Although I will be upgrading the AWS EKS version and the Terraform module by creating a new cluster, and therefore I can not bother with a “live-update” of the cluster, I want to try to make sure that this can be applied to a live cluster without losing access to it and breaking a Production environment.

However, keep in mind that what is described in this post is done on a test cluster (because I have one cluster for Dev/Staging/Production). So you shouldn’t upgrade to Production right away, but rather test on some Dev environment first.

I wrote about the cluster setup in more detail at Terraform: Building EKS, part 2 — an EKS cluster, WorkerNodes, and IAM, and in this post, the code examples will be from there, although it is already slightly different from what was described there, because I made the creation of EKS as a dedicated module to make it easier to manage different cluster environments.

In general, there seem to be very few changes — but the post turned out to be long because I tried to show everything in detail and with real examples.

What has changed?

The full description is available on GitHub — see. v20.0.0 та Upgrade from v19.x to v20.x.

What exactly are we interested in (again, specifically in my case):

EKS :

-

aws-auth: is moved to a separate module, it is no longer in the parameters of theterraform-aws-modules/eksmodule itself — from theterraform-aws-modules/eksparameters removed -manage_aws_auth_configmap,create_aws_auth_configmap,aws_auth_roles,aws_auth_users,aws_auth_accounts -

authentication_mode: added a new valueAPI_AND_CONFIG_MAP -

bootstrap_cluster_creator_admin_permissions: hard-coded tofalse— but you can pass it with theenable_cluster_creator_admin_permissionswith a value of true, although here it seems that you need to add an Access Entry -

create_instance_profile: default value changed from true tofalseto match the changes in Karpenter v0.32 (but I already have Karpenter 0.32 and everything works, so there shouldn't be any changes here)

Karpenter :

-

irsa: removed variable names with_"irsa"_- a bunch of renames and a few names removed -

create_instance_profile:the default value has changed fromtruetofalse -

enable_karpenter_instance_profile_creation: removed -

iam_role_arn: becamenode_iam_role_arn -

irsa_use_name_prefix: becameiam_role_name_prefix

The update in the documentation describes quite a few more changes to Karpenter — but I have Karpenter itself now at version 0.32 (see Karpenter: the Beta version — an overview of changes, and upgrade from v0.30.0 to v0.32.1), the Terraform module terraform-aws-modules/eks/aws//modules/karpenter is now 19.21.0, and the process of upgrading EKS itself from 19 to 20 did not affect Karpenter's operation in any way, so you can update them separately - first EKS, then Karpenter.

The upgrade plan

What we will do:

- EKS: update the module version from 19.21 => 20.0 with

API_AND_CONFIG_MAP - add

aws-authas a separate module - Karpenter: module version update from 19.21 => 20.0

EKS: upgrade 19.21 to 20.0

What do we need:

- delete everything related to

aws_auth, in my case it is: manage_aws_auth_configmapaws_auth_usersaws_auth_roles- add a

authentication_modewith the valueAPI_AND_CONFIG_MAP(later, for 21, you will need to replace it withAPI) - add a new module for

aws_auth_rolesand moveaws_auth_usersandaws_auth_rolesthere

Regarding the bootstrap_cluster_creator_admin_permissions and enable_cluster_creator_admin_permissions, since this cluster was created in 19.21, the root user is already there, and it will be added to Access Entries along with the WorkerNodes IAM Role, so you don't need to do anything here.

And how to transfer our users and roles to access_entries we will probably see in the next post, because now we will only update the version of the module while keeping the aws-auth ConfigMap.

For the Karpenter test, I created a test deploy with a single Kubernetes Pod that will trigger the creation of a WorkerNode, and an Ingress/ALB to it, which is constantly ping-ed to make sure that everything will work without downtimes.

NodeClaims now:

$ kk get nodeclaim

NAME TYPE ZONE NODE READY AGE

default-7hjz7 t3.small us-east-1a ip-10-0-45-183.ec2.internal True 53s

Okay, let’s update EKS.

Current Terraform code and module structure for EKS

To further understand the point about removing the aws_auth resource from the Terraform state, here is my current files/modules structure:

$ tree .

.

|-- Makefile

|-- backend.hcl

|-- backend.tf

|-- envs

| `-- test-1-28

| |-- VERSIONS.md

| |-- backend.tf

| |-- main.tf

| |-- outputs.tf

| |-- providers.tf

| |-- test-1-28.tfvars

| `-- variables.tf

|-- modules

| `-- atlas-eks

| |-- configs

| | `-- karpenter-nodepool.yaml.tmpl

| |-- controllers.tf

| |-- data.tf

| |-- eks.tf

| |-- iam.tf

| |-- karpenter.tf

| |-- outputs.tf

| |-- providers.tf

| `-- variables.tf

|-- outputs.tf

|-- providers.tf

|-- variables.tf

`-- versions.tf

Here:

- the

envs/test-1-28/main.tfcalls themodules/atlas-eksmodule with the required parameters - and we will execute terraform state rm in theenvs/test-1-28directory - the

modules/atlas-eks/eks.tfcalls theterraform-aws-modules/eks/awsmodule with a required version - here we will make changes to the code

The call to the root module in the envs/test-1-28/main.tf file looks like this:

module "atlas_eks" {

source = "../../modules/atlas-eks"

# 'devops'

component = var.component

# 'ops'

aws_environment = var.aws_environment

# 'test'

eks_environment = var.eks_environment

env_name = local.env_name

# '1.28'

eks_version = var.eks_version

# 'endpoint_public_access', 'enabled_log_types'

eks_params = var.eks_params

# 'coredns = v1.10.1-eksbuild.6', 'kube_proxy = v1.28.4-eksbuild.1', etc

eks_addon_versions = var.eks_addon_versions

# AWS IAM Roles to be added to EKS aws-auth as 'masters'

eks_aws_auth_users = var.eks_aws_auth_users

# GitHub IAM Roles used in Workflows

eks_github_auth_roles = var.eks_github_auth_roles

# 'vpc-0fbaffe234c0d81ea'

vpc_id = var.vpc_id

helm_release_versions = var.helm_release_versions

# override default 'false' in the module's variables

# will trigger a dedicated module like 'eks_blueprints_addons_external_dns_test'

# with a domainFilters == variable.external_dns_zones.test == 'test.example.co'

external_dns_zone_test_enabled = true

# 'instance-family', 'instance-size', 'topology'

karpenter_nodepool = var.karpenter_nodepool

}

And the code of the root module is like this:

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 19.21.0"

# is set in `locals` per env

# '${var.project_name}-${var.eks_environment}-${local.eks_version}-cluster'

# 'atlas-eks-test-1-28-cluster'

# passed from the root module

cluster_name = "${var.env_name}-cluster"

# passed from the root module

cluster_version = var.eks_version

# 'eks_params' passed from the root module

cluster_endpoint_public_access = var.eks_params.cluster_endpoint_public_access

# 'eks_params' passed from the root module

cluster_enabled_log_types = var.eks_params.cluster_enabled_log_types

# 'eks_addons_version' passed from the root module

cluster_addons = {

coredns = {

addon_version = var.eks_addon_versions.coredns

configuration_values = jsonencode({

replicaCount = 1

resources = {

requests = {

cpu = "50m"

memory = "50Mi"

}

}

})

}

kube-proxy = {

addon_version = var.eks_addon_versions.kube_proxy

configuration_values = jsonencode({

resources = {

requests = {

cpu = "20m"

memory = "50Mi"

}

}

})

}

vpc-cni = {

# old: eks_addons_version

# new: eks_addon_versions

addon_version = var.eks_addon_versions.vpc_cni

configuration_values = jsonencode({

env = {

ENABLE_PREFIX_DELEGATION = "true"

WARM_PREFIX_TARGET = "1"

AWS_VPC_K8S_CNI_EXTERNALSNAT = "true"

}

})

}

aws-ebs-csi-driver = {

addon_version = var.eks_addon_versions.aws_ebs_csi_driver

# iam.tf

service_account_role_arn = module.ebs_csi_irsa_role.iam_role_arn

}

}

# make as one complex var?

# passed from the root module

vpc_id = var.vpc_id

# for WorkerNodes

# passed from the root module

subnet_ids = data.aws_subnets.private.ids

# for the Control Plane

# passed from the root module

control_plane_subnet_ids = data.aws_subnets.intra.ids

manage_aws_auth_configmap = true

# `env_name` make too long name causing issues with IAM Role (?) names

# thus, use a dedicated `env_name_short` var

eks_managed_node_groups = {

# eks-default-dev-1-28

"${local.env_name_short}-default" = {

# `eks_managed_node_group_params` from defaults here

# number, e.g. 2

min_size = var.eks_managed_node_group_params.default_group.min_size

# number, e.g. 6

max_size = var.eks_managed_node_group_params.default_group.max_size

# number, e.g. 2

desired_size = var.eks_managed_node_group_params.default_group.desired_size

# list, e.g. ["t3.medium"]

instance_types = var.eks_managed_node_group_params.default_group.instance_types

# string, e.g. "ON_DEMAND"

capacity_type = var.eks_managed_node_group_params.default_group.capacity_type

# allow SSM

iam_role_additional_policies = {

AmazonSSMManagedInstanceCore = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

}

taints = var.eks_managed_node_group_params.default_group.taints

update_config = {

max_unavailable_percentage = var.eks_managed_node_group_params.default_group.max_unavailable_percentage

}

}

}

# 'atlas-eks-test-1-28-node-sg'

node_security_group_name = "${var.env_name}-node-sg"

# 'atlas-eks-test-1-28-cluster-sg'

cluster_security_group_name = "${var.env_name}-cluster-sg"

# to use with EC2 Instance Connect

node_security_group_additional_rules = {

ingress_ssh_vpc = {

description = "SSH from VPC"

protocol = "tcp"

from_port = 22

to_port = 22

cidr_blocks = [data.aws_vpc.eks_vpc.cidr_block]

type = "ingress"

}

}

# 'atlas-eks-test-1-28'

node_security_group_tags = {

"karpenter.sh/discovery" = var.env_name

}

cluster_identity_providers = {

sts = {

client_id = "sts.amazonaws.com"

}

}

# passed from the root module

aws_auth_users = var.eks_aws_auth_users

# locals flatten() 'eks_masters_access_role' + 'eks_github_auth_roles'

aws_auth_roles = local.aws_auth_roles

}

For more information about Terraform modules, see Terraform: Modules, Outputs and Variables and Terraform: Creating a Module for Collecting AWS ALB Logs in Grafana Loki.

Roles/users for the aws-auth are formed in locals from variables in the variables.tf and envs/test-1-28/test-1-28.tfvars, all users have system:masters as we haven't implemented RBAC yet:

locals {

# create a short name for node names in the 'eks_managed_node_groups'

# 'test-1-28'

env_name_short = "${var.eks_environment}-${replace(var.eks_version, ".", "-")}"

# 'eks_github_auth_roles' passed from the root module

github_roles = [for role in var.eks_github_auth_roles : {

rolearn = role

username = role

groups = ["system:masters"]

}]

# 'eks_masters_access_role' + 'eks_github_auth_roles'

# 'eks_github_auth_roles' from the root module

# 'aws_iam_role.eks_masters_access' from the iam.tf here

aws_auth_roles = flatten([

{

rolearn = aws_iam_role.eks_masters_access_role.arn

username = aws_iam_role.eks_masters_access_role.arn

groups = ["system:masters"]

},

local.github_roles

])

}

Anton Babenko has made a cool Diff of Before (v19.21) vs After (v20.0 ) in the documentation on the changes, so let’s try it.

Changes on the authentication_mode and aws_auth

In the EKS module, the most significant change is switching authentication_mode to API_AND_CONFIG_MAP.

The testing cluster already exists, created with version 19.21.0.

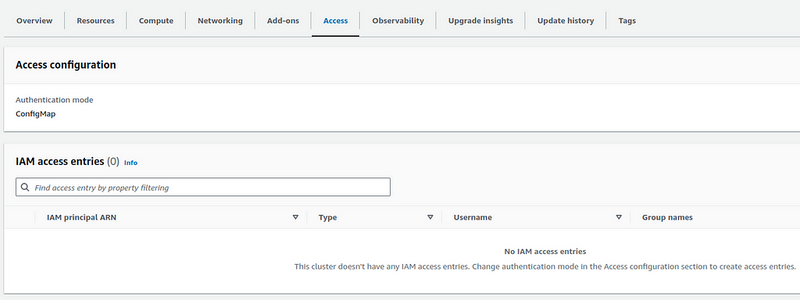

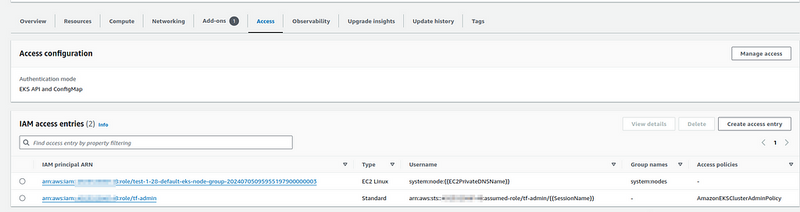

The Access tab now looks like this:

Access configuration == ConfigMap, and the IAM access entries are empty.

Now make changes in the code of the modules/atlas-eks/eks.tf file:

- change the version to v20.0

- remove everything related to

aws_auth - add

authentication_modewith the valueAPI_AND_CONFIG_MAP

The changes so far look like this:

module "eks" {

source = "terraform-aws-modules/eks/aws"

#version = "~> 19.21.0"

version = "~> v20.0"

...

# removing for API_AND_CONFIG_MAP

#manage_aws_auth_configmap = true

# adding for API_AND_CONFIG_MAP

authentication_mode = "API_AND_CONFIG_MAP"

...

# removing for API_AND_CONFIG_MAP

# passed from the root module

#aws_auth_users = var.eks_aws_auth_users

# removing for API_AND_CONFIG_MAP

# locals flatten() 'eks_masters_access_role' + 'eks_github_auth_roles'

#aws_auth_roles = local.aws_auth_roles

}

Run terraform init to update the EKS module:

...

Initializing modules...

Downloading registry.terraform.io/terraform-aws-modules/eks/aws 20.0.1 for atlas_eks.eks...

- atlas_eks.eks in .terraform/modules/atlas_eks.eks

- atlas_eks.eks.eks_managed_node_group in .terraform/modules/atlas_eks.eks/modules/eks-managed-node-group

- atlas_eks.eks.eks_managed_node_group.user_data in .terraform/modules/atlas_eks.eks/modules/_user_data

- atlas_eks.eks.fargate_profile in .terraform/modules/atlas_eks.eks/modules/fargate-profile

...

Let’s check out aws-auth now, and also we'll have a backup of it in YAML:

$ kk -n kube-system get cm aws-auth -o yaml

apiVersion: v1

data:

mapAccounts: |

[]

mapRoles: |

- "groups":

- "system:bootstrappers"

- "system:nodes"

"rolearn": "arn:aws:iam::492***148:role/test-1-28-default-eks-node-group-20240705095955197900000003"

"username": "system:node:{{EC2PrivateDNSName}}"

- "groups":

- "system:masters"

"rolearn": "arn:aws:iam::492***148:role/atlas-eks-test-1-28-masters-access-role"

"username": "arn:aws:iam::492***148:role/atlas-eks-test-1-28-masters-access-role"

- "groups":

- "system:masters"

"rolearn": "arn:aws:iam::492***148:role/atlas-test-ops-1-28-github-access-role"

"username": "arn:aws:iam::492***148:role/atlas-test-ops-1-28-github-access-role"

...

mapUsers: |

- "groups":

- "system:masters"

"userarn": "arn:aws:iam::492***148:user/arseny"

"username": "arseny"

...

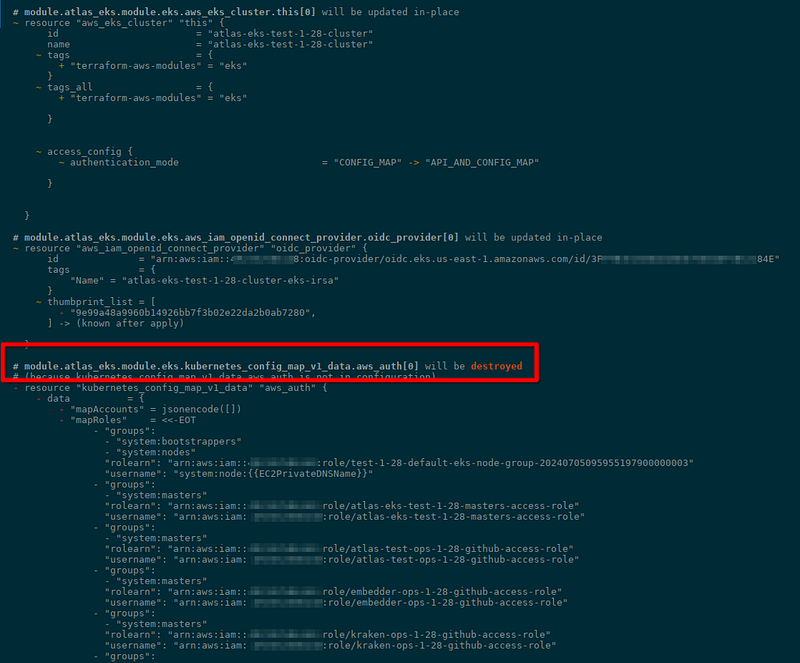

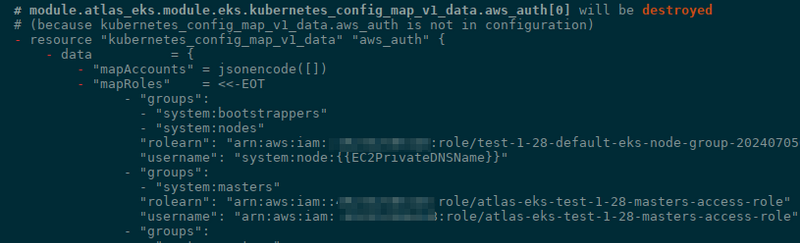

Run terraform plan, and we see that aws-auth indeed will be removed:

So, if we want to keep it, we need to add a new module from the terraform-aws-modules/eks/aws//modules/aws-auth.

The example in the documentation looks like this:

module "eks" {

source = "terraform-aws-modules/eks/aws//modules/aws-auth"

version = "~> 20.0"

manage_aws_auth_configmap = true

aws_auth_roles = [

{

rolearn = "arn:aws:iam::66666666666:role/role1"

username = "role1"

groups = ["custom-role-group"]

},

]

aws_auth_users = [

{

userarn = "arn:aws:iam::66666666666:user/user1"

username = "user1"

groups = ["custom-users-group"]

},

]

}

In my case, for the aws_auth_roles and aws_auth_users, we use the values from locals:

module "aws_auth" {

source = "terraform-aws-modules/eks/aws//modules/aws-auth"

version = "~> 20.0"

manage_aws_auth_configmap = true

aws_auth_roles = local.aws_auth_roles

aws_auth_users = var.eks_aws_auth_users

}

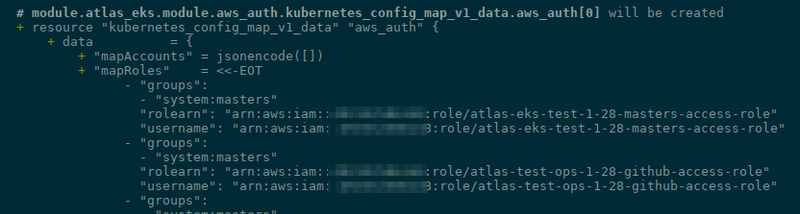

Run terraform init, do terraform plan again, and now we have a new resource for the aws-auth:

And the old one will still be deleted — that is, Terraform will first delete it and then create it again, just with a new name and ID in the state:

To prevent Terraform from removing the aws-auth resource from the cluster, we need to remove it from the state file: then Terraform will not know anything about this ConfigMap, and when creating "aws_auth" from our module, it will just create entries in its state file, but will not do anything in Kubernetes.

Important : just in case — make a backup of the corresponding state-file, because we will make state remove.

Removing aws_auth from Terraform State

In my case, we need to go to the environment directory, the envs/test-1–28, and perform state operations from there.

Note : I’m clarifying this because I accidentally deleted aws-auth ConfigMap from the Terraform State on the Production cluster. But I simply re-executed terraform apply on it, and everything was restored without any problems.

Find the name of the module as it is written in the state:

$ cd envs/test-1-28/

$ terraform state list | grep aws_auth

module.atlas_eks.module.eks.kubernetes_config_map_v1_data.aws_auth[0]

You can check with the terraform plan output, which we did above, to make sure that it is the one we are deleting:

...

module.atlas_eks.module.eks.kubernetes_config_map_v1_data.aws_auth[0]: Refreshing state... [id=kube-system/aws-auth]

...

Here, the name module.atlas_eks is exactly the same as it is in my EKS module in the envs/test-1-28/main.tf file:

module "atlas_eks" {

source = "../../modules/atlas-eks"

...

Delete the resource record with the terraform state rm:

$ terraform state rm 'module.atlas_eks.module.eks.kubernetes_config_map_v1_data.aws_auth[0]'

Acquiring state lock. This may take a few moments...

Removed module.atlas_eks.module.eks.kubernetes_config_map_v1_data.aws_auth[0]

Successfully removed 1 resource instance(s).

Releasing state lock. This may take a few moments...

Run terraform plan again, and now there is no_"destroy"_- only the creation of module.atlas_eks.module.aws_auth.kubernetes_config_map_v1_data.aws_auth[0]:

# module.atlas_eks.module.aws_auth.kubernetes_config_map_v1_data.aws_auth[0] will be created

+ resource "kubernetes_config_map_v1_data" "aws_auth" {

...

Plan: 1 to add, 10 to change, 0 to destroy.

Run terraform apply:

...

module.atlas_eks.module.eks.aws_eks_cluster.this[0]: Still modifying... [id=atlas-eks-test-1-28-cluster, 50s elapsed]

module.atlas_eks.module.eks.aws_eks_cluster.this[0]: Modifications complete after 52s [id=atlas-eks-test-1-28-cluster]

...

module.atlas_eks.module.aws_auth.kubernetes_config_map_v1_data.aws_auth[0]: Creation complete after 6s [id=kube-system/aws-auth]

...

Check the ConfigMap now — no changes:

$ kk -n kube-system get cm aws-auth -o yaml

apiVersion: v1

data:

mapAccounts: |

[]

mapRoles: |

- "groups":

- "system:masters"

"rolearn": "arn:aws:iam::492***148:role/atlas-eks-test-1-28-masters-access-role"

"username": "arn:aws:iam::492***148:role/atlas-eks-test-1-28-masters-access-role"

...

And let’s see what has changed in AWS Console > EKS > ClusterName > Access:

Now we have the EKS API and ConfigMap values and two Access Entries — for WorkerNodes and for the root user.

Check NodeClaims — is Karpenter working?

You can scale the workflows to make sure:

$ kk -n test-fastapi-app-ns scale deploy fastapi-app --replicas=20

deployment.apps/fastapi-app scaled

Karpenter’s logs are fine:

...

karpenter-8444499996-9njx6:controller {"level":"INFO","time":"2024-07-05T12:27:41.527Z","logger":"controller.provisioner","message":"created nodeclaim","commit":"a70b39e","nodepool":"default","nodeclaim":"default-59ms4","requests":{"cpu":"1170m","memory":"942Mi","pods":"8"},"instance-types":"c5.large, r5.large, t3.large, t3.medium, t3.small"}

...

And new NodeClaims were created:

$ kk get nodeclaim

NAME TYPE ZONE NODE READY AGE

default-59ms4 t3.small us-east-1a ip-10-0-46-72.ec2.internal True 59s

default-5fc2p t3.small us-east-1a ip-10-0-39-114.ec2.internal True 7m6s

That’s basically it — we can move on to upgrading the Karpenter’s module version.

Karpenter: upgrade 19.21 to 20.0

There is also a Karpenter Diff of Before (v19.21) vs After (v20.0), but I had to change some things manually.

Here I went with the “poke method” — make a terraform plan, see what's wrong with the results - fix it - plan again. It went off without problems, although with some errors - let's look at them.

Current Terraform code for Karpenter

The code is in the modules/atlas-eks/karpenter.tf file:

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

version = "19.21.0"

cluster_name = module.eks.cluster_name

irsa_oidc_provider_arn = module.eks.oidc_provider_arn

irsa_namespace_service_accounts = ["karpenter:karpenter"]

create_iam_role = false

iam_role_arn = module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_arn

irsa_use_name_prefix = false

# In v0.32.0/v1beta1, Karpenter now creates the IAM instance profile

# so we disable the Terraform creation and add the necessary permissions for Karpenter IRSA

enable_karpenter_instance_profile_creation = true

}

Some outputs from the Karpenter module are used in the helm_release - the module.karpenter.irsa_arn:

resource "helm_release" "karpenter" {

namespace = "karpenter"

create_namespace = true

name = "karpenter"

repository = "oci://public.ecr.aws/karpenter"

repository_username = data.aws_ecrpublic_authorization_token.token.user_name

repository_password = data.aws_ecrpublic_authorization_token.token.password

chart = "karpenter"

version = var.helm_release_versions.karpenter

values = [

<<-EOT

replicas: 1

settings:

clusterName: ${module.eks.cluster_name}

clusterEndpoint: ${module.eks.cluster_endpoint}

interruptionQueueName: ${module.karpenter.queue_name}

featureGates:

drift: true

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: ${module.karpenter.irsa_arn}

EOT

]

depends_on = [

helm_release.karpenter_crd

]

}

Karpenter’s module version upgrade

First, change the version to 20.0:

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

#version = "19.21.0"

version = "20.0"

...

Do terraform init and terraform plan, and look for errors:

...

An argument named "iam_role_arn" is not expected here.

...

│ An argument named "irsa_use_name_prefix" is not expected here.

...

│ An argument named "enable_karpenter_instance_profile_creation" is not expected here.

Change the names of the parameters, and from Karpenter Diff of Before (v19.21) vs After (v20.0) add the creation of resources for IRSA (IAM Role for ServiceAccounts), because we have it in the current setup:

-

iam_role_arn=>node_iam_role_arn -

irsa_use_name_prefix- I'm a little confused here, because according to the documentation, it becameiam_role_name_prefix, butiam_role_name_prefixis not in inputs at all, so I just commented -

iam_role_name_prefix- I didn't understand it either - according to the documentation it becamenode_iam_role_name_prefix, but again, there is no such thing, so I just commented it out -

enable_karpenter_instance_profile_creation: removed

Now the code looks like this:

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

#version = "19.21.0"

version = "20.0"

cluster_name = module.eks.cluster_name

irsa_oidc_provider_arn = module.eks.oidc_provider_arn

irsa_namespace_service_accounts = ["karpenter:karpenter"]

create_iam_role = false

# 19 > 20

#iam_role_arn = module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_arn

node_iam_role_arn = module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_arn

# 19 > 20

#irsa_use_name_prefix = false

#iam_role_name_prefix = false

#node_iam_role_name_prefix = false

# 19 > 20

#enable_karpenter_instance_profile_creation = true

# 19 > 20

enable_irsa = true

create_instance_profile = true

# 19 > 20

# To avoid any resource re-creation

iam_role_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_role_description = "Karpenter IAM role for service account"

iam_policy_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_policy_description = "Karpenter IAM role for service account"

}

Do terraform plan again, and now we have another error, this time in the helm_release:

...

This object does not have an attribute named "irsa_arn".

...

Because the irsa_arn parameter has now become iam_role_arn, so change it too:

...

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: ${module.karpenter.iam_role_arn}

EOT

...

Once again, run terraform plan, and now we have another problem with the length of the role name:

...

Plan: 1 to add, 3 to change, 0 to destroy.

╷

│ Error: expected length of name_prefix to be in the range (1 - 38), got KarpenterIRSA-atlas-eks-test-1-28-cluster-

│

│ with module.atlas_eks.module.karpenter.aws_iam_role.controller[0],

│ on .terraform/modules/atlas_eks.karpenter/modules/karpenter/main.tf line 69, in resource "aws_iam_role" "controller":

│ 69: name_prefix = var.iam_role_use_name_prefix ? "${var.iam_role_name}-" : null

...

To fix that, I set the iam_role_use_name_prefix = false, and now the updated code looks like this:

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

#version = "19.21.0"

version = "20.0"

cluster_name = module.eks.cluster_name

irsa_oidc_provider_arn = module.eks.oidc_provider_arn

irsa_namespace_service_accounts = ["karpenter:karpenter"]

# 19 > 20

#create_iam_role = false

create_node_iam_role = false

# 19 > 20

#iam_role_arn = module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_arn

node_iam_role_arn = module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_arn

# 19 > 20

#irsa_use_name_prefix = false

#iam_role_name_prefix = false

#node_iam_role_name_prefix = false

# 19 > 20

#enable_karpenter_instance_profile_creation = true

# 19 > 20

enable_irsa = true

create_instance_profile = true

# To avoid any resource re-creation

iam_role_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_role_description = "Karpenter IAM role for service account"

iam_policy_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_policy_description = "Karpenter IAM role for service account"

# expected length of name_prefix to be in the range (1 - 38), got KarpenterIRSA-atlas-eks-test-1-28-cluster-

iam_role_use_name_prefix = false

}

...

resource "helm_release" "karpenter" {

...

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: ${module.karpenter.iam_role_arn}

EOT

]

...

}

Run terraform plan - nothing should be deleted:

...

Plan: 1 to add, 5 to change, 0 to destroy.

We have a plan:

-

module.atlas_eks.module.karpenter.aws_eks_access_entry.nodewill be added: a little bit ahead, this will need to be disabled, will see why shortly - in

module.atlas_eks.module.karpenter.aws_iam_policy.controller: policies will be updated - everything seems to be OK here - to

module.atlas_eks.module.karpenter.aws_iam_role.controller: add theAllowrule frompods.eks.amazonaws.comto work with EKS Pod Identities

Everything seems to be OK — let’s deploy and test.

Karpenter logs are running, NodeClaim is already created, ping to the test Ingress/ALB is going.

EKS: CreateAccessEntry — access entry resource is already in use on this cluster

Do terraform apply, and - what a surprise! - we got an error:

...

│ Error: creating EKS Access Entry (atlas-eks-test-1-28-cluster:arn:aws:iam::492***148:role/test-1-28-default-eks-node-group-20240710092944387500000003): operation error EKS: CreateAccessEntry, https response error StatusCode: 409, RequestID: 004e014d-ebbb-4c60-919b-fb79629bf1ff, ResourceInUseException: The specified access entry resource is already in use on this cluster.

Because “EKS automatically adds access entries for the roles used by EKS managed node groups and Fargate profiles”, see authentication_mode = “API_AND_CONFIG_MAP”.

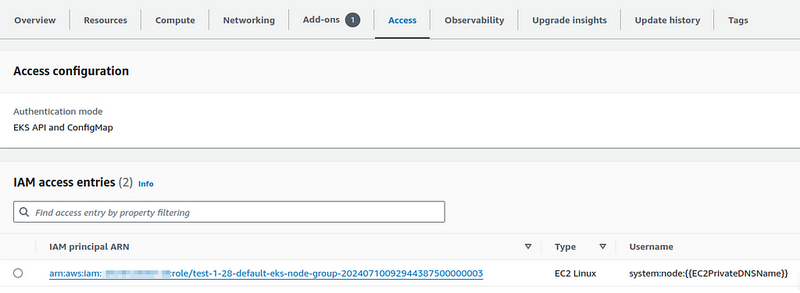

And we have already seen the Access Entry for WorkerNodes when we upgraded the version of the EKS module:

If self-managed NodeGroups are used, then the create_access_entry variable with a default value of true has been added to terraform-aws-eks/modules/self-managed-node-group/variables.tf.

Karpenter also has a new create_access_entry variable, and it also has a default value of true.

Set it to false, because in my case, the nodes created from Karpenter use the same IAM Role as the nodes from module.eks.eks_managed_node_groups:

...

# To avoid any resource re-creation

iam_role_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_role_description = "Karpenter IAM role for service account"

iam_policy_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_policy_description = "Karpenter IAM role for service account"

# expected length of name_prefix to be in the range (1 - 38), got KarpenterIRSA-atlas-eks-test-1-28-cluster-

iam_role_use_name_prefix = false

# Error: creating EKS Access Entry ResourceInUseException: The specified access entry resource is already in use on this cluster.

create_access_entry = false

}

...

Run terraform apply again, and this time everything went off without issues.

There’s nothing in the Karpenter logs at all, meaning that the Kubernetes Pod was not restarted, and ping to the test app continue to go through.

You can scale the Deployment once again to trigger the creation of new Karpenter’s NodeClaims:

$ kk -n test-fastapi-app-ns scale deploy fastapi-app --replicas=10

deployment.apps/fastapi-app scaled

And they were created without any problems:

...

karpenter-649945c6c5-lj2xh:controller {"level":"INFO","time":"2024-07-10T11:33:34.507Z","logger":"controller.nodeclaim.lifecycle","message":"launched nodeclaim","commit":"a70b39e","nodeclaim":"default-pn64t","provider-id":"aws:///us-east-1a/i-0316a99cd2d6e172c","instance-type":"t3.small","zone":"us-east-1a","capacity-type":"spot","allocatable":{"cpu":"1930m","ephemeral-storage":"17Gi","memory":"1418Mi","pods":"110"}}

Everything seems to be working.

Final Terraform code for EKS and Karpenter

As a result of all the changes, the code will look like this.

For EKS:

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> v20.0"

# is set in `locals` per env

# '${var.project_name}-${var.eks_environment}-${local.eks_version}-cluster'

# 'atlas-eks-test-1-28-cluster'

# passed from a root module

cluster_name = "${var.env_name}-cluster"

# passed from a root module

cluster_version = var.eks_version

# 'eks_params' passed from a root module

cluster_endpoint_public_access = var.eks_params.cluster_endpoint_public_access

# 'eks_params' passed from a root module

cluster_enabled_log_types = var.eks_params.cluster_enabled_log_types

# 'eks_addons_version' passed from a root module

cluster_addons = {

coredns = {

addon_version = var.eks_addon_versions.coredns

configuration_values = jsonencode({

replicaCount = 1

resources = {

requests = {

cpu = "50m"

memory = "50Mi"

}

}

})

}

kube-proxy = {

addon_version = var.eks_addon_versions.kube_proxy

configuration_values = jsonencode({

resources = {

requests = {

cpu = "20m"

memory = "50Mi"

}

}

})

}

vpc-cni = {

# old: eks_addons_version

# new: eks_addon_versions

addon_version = var.eks_addon_versions.vpc_cni

configuration_values = jsonencode({

env = {

ENABLE_PREFIX_DELEGATION = "true"

WARM_PREFIX_TARGET = "1"

AWS_VPC_K8S_CNI_EXTERNALSNAT = "true"

}

})

}

aws-ebs-csi-driver = {

addon_version = var.eks_addon_versions.aws_ebs_csi_driver

# iam.tf

service_account_role_arn = module.ebs_csi_irsa_role.iam_role_arn

}

}

# make as one complex var?

# passed from a root module

vpc_id = var.vpc_id

# for WorkerNodes

# passed from a root module

subnet_ids = data.aws_subnets.private.ids

# for the ControlPlane

# passed from a root module

control_plane_subnet_ids = data.aws_subnets.intra.ids

# adding for API_AND_CONFIG_MAP

# TODO: change to the "API" only after adding aws_eks_access_entry && aws_eks_access_policy_association

authentication_mode = "API_AND_CONFIG_MAP"

# `env_name` make too long name causing issues with IAM Role (?) names

# thus, use a dedicated `env_name_short` var

eks_managed_node_groups = {

# eks-default-dev-1-28

"${local.env_name_short}-default" = {

# `eks_managed_node_group_params` from defaults here

# number, e.g. 2

min_size = var.eks_managed_node_group_params.default_group.min_size

# number, e.g. 6

max_size = var.eks_managed_node_group_params.default_group.max_size

# number, e.g. 2

desired_size = var.eks_managed_node_group_params.default_group.desired_size

# list, e.g. ["t3.medium"]

instance_types = var.eks_managed_node_group_params.default_group.instance_types

# string, e.g. "ON_DEMAND"

capacity_type = var.eks_managed_node_group_params.default_group.capacity_type

# allow SSM

iam_role_additional_policies = {

AmazonSSMManagedInstanceCore = "arn:aws:iam::aws:policy/AmazonSSMManagedInstanceCore"

}

taints = var.eks_managed_node_group_params.default_group.taints

update_config = {

max_unavailable_percentage = var.eks_managed_node_group_params.default_group.max_unavailable_percentage

}

}

}

# 'atlas-eks-test-1-28-node-sg'

node_security_group_name = "${var.env_name}-node-sg"

# 'atlas-eks-test-1-28-cluster-sg'

cluster_security_group_name = "${var.env_name}-cluster-sg"

# to use with EC2 Instance Connect

node_security_group_additional_rules = {

ingress_ssh_vpc = {

description = "SSH from VPC"

protocol = "tcp"

from_port = 22

to_port = 22

cidr_blocks = [data.aws_vpc.eks_vpc.cidr_block]

type = "ingress"

}

}

# 'atlas-eks-test-1-28'

node_security_group_tags = {

"karpenter.sh/discovery" = var.env_name

}

cluster_identity_providers = {

sts = {

client_id = "sts.amazonaws.com"

}

}

}

For Karpenter:

module "karpenter" {

source = "terraform-aws-modules/eks/aws//modules/karpenter"

version = "20.0"

cluster_name = module.eks.cluster_name

irsa_oidc_provider_arn = module.eks.oidc_provider_arn

irsa_namespace_service_accounts = ["karpenter:karpenter"]

create_node_iam_role = false

node_iam_role_arn = module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_arn

enable_irsa = true

create_instance_profile = true

# backward compatibility with 19.21.0

# see https://github.com/terraform-aws-modules/terraform-aws-eks/blob/master/docs/UPGRADE-20.0.md#karpenter-diff-of-before-v1921-vs-after-v200

iam_role_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_role_description = "Karpenter IAM role for service account"

iam_policy_name = "KarpenterIRSA-${module.eks.cluster_name}"

iam_policy_description = "Karpenter IAM role for service account"

iam_role_use_name_prefix = false

# already created during EKS 19 > 20 upgrade with 'authentication_mode = "API_AND_CONFIG_MAP"'

create_access_entry = false

}

...

resource "helm_release" "karpenter" {

namespace = "karpenter"

create_namespace = true

name = "karpenter"

repository = "oci://public.ecr.aws/karpenter"

repository_username = data.aws_ecrpublic_authorization_token.token.user_name

repository_password = data.aws_ecrpublic_authorization_token.token.password

chart = "karpenter"

version = var.helm_release_versions.karpenter

values = [

<<-EOT

replicas: 1

settings:

clusterName: ${module.eks.cluster_name}

clusterEndpoint: ${module.eks.cluster_endpoint}

interruptionQueueName: ${module.karpenter.queue_name}

featureGates:

drift: true

serviceAccount:

annotations:

eks.amazonaws.com/role-arn: ${module.karpenter.iam_role_arn}

EOT

]

depends_on = [

helm_release.karpenter_crd

]

}

It will be necessary to upgrade Karpenter itself from 0.32 to 0.37.

Preparing to upgrade from v20.0 to v21.0

See Upcoming Changes Planned in v21.0.

What will be changed?

The main thing is the changes with aws-auth: the module will be removed, so you should switch to authentication_mode = API, and for this, we need to move all our users and roles from the aws-auth ConfigMap to EKS Access Entries.

In addition, it is worth switching to a new system for working with ServiceAccounts — EKS Pod Identities.

And it turns out that there will be two changes:

- in the

aws-auththere are records for IAM Roles and IAM Users: they need to be created as EKS Access Entries - in Terraform for this we have new resources —

aws_eks_access_entryandaws_eks_access_policy_association - separately, we have several IAM Roles that are used in ServiceAccounts — they need to be moved to Pod Identity associations

- in Terraform for this we have a new resource `aws_eks_pod_identity_association

- OIDC seems to be not needed at all

But I will do all this in a new project in a separate repository that will deal with IAM and access to EKS and RDS. I will describe it in the next post (already in drafts).

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (1)

Nice work getting the module upgraded! Going from version 19.21 to 20.0 gives us some cool new stuff and better support. This should make your EKS and Karpenter setup run even better.