Creating an AWS EKS with Terraform — a cluster with the Terraform EKS module, a default WorkerNode Group, and configure IAM authentication settings

We continue the topic of deploying an AWS Elastic Kubernetes Service cluster using Terraform.

In the first part, we prepared an AWS VPC .

In this part, we’ll deploy the EKS cluster itself, and will configure AIM for it, and in the next part, we’ll install Karpenter and the rest of the controllers.

All parts:

- Terraform: building EKS, part 1 - VPC, Subnets and Endpoints

- Terraform: building EKS, part 2 – an EKS cluster, WorkerNodes, and IAM (this)

- Terraform: building EKS, part 3 – Karpenter installation

- Terraform: building EKS, part 4 – installing controllers

Planning

In general, the TODO list currently looks like this:

- create a default NodeGroup with Taints

CrticalAddonsOnly=true(see Kubernetes: Pods and WorkerNodes – control the placement of the Pods on the Nodes) - create a StorageClass with the

ReclaimPolicy=Retain– for PVCs whose disks should be retained when deleting Deployment/StatefulSet - create an IAM “masters_access_role” with the

eks:DescribeClusterpolicy to useaws eks update-kubeconfigto add users later - add this “masters_access_role” and my personal IAM User as admins to the

aws-authConfigMap – let’s not complicate things with RBAC for now, as we are only the beginning of the RKS journey here - create an OIDC Provider for the cluster

- add a Subscription Filter to the EKS CloudWatch Log Group to collect logs in Grafana Loki (see Loki: collecting logs from CloudWatch Logs using Lambda Promtail)

- install Karpenter

- install EKS EBS CSI Add-on

- install ExternalDNS controller

- install AWS Load Balancer Controller

- add SecretStore CSI Driver and ASCP

- install Metrics Server

- add Vertical Pod Autoscaler and Horizontal Pod Autoscaler

We will also use a module for the cluster, again from Anton Babenko — Terraform EKS module. However, there are other modules available, for example, terraform-aws-eks by Cookpad – I also used it a bit, it worked well, but I won’t compare it.

Just like the VPC module, the Terraform EKS module also has an example of a cluster and related resources — examples/complete/main.tf.

Terraform Kubernetes provider

For the module to work with aws-auth ConfigMap, you will need to add another provider – kubernetes.

Add it to the file providers.tf:

...

provider "kubernetes" {

host = module.eks.cluster_endpoint

cluster_ca_certificate = base64decode(module.eks.cluster_certificate_authority_data)

exec {

api_version = "client.authentication.k8s.io/v1beta1"

command = "aws"

args = ["--profile", "tf-admin", "eks", "get-token", "--cluster-name", module.eks.cluster_name]

}

}

Here, note that an AWS profile is passed to the args because the cluster itself is created by Terraform on behalf of the IAM Role:

...

provider "aws" {

region = "us-east-1"

assume_role {

role_arn = "arn:aws:iam::492***148:role/tf-admin"

}

...

And the AWS CLI Profile tf-admin also performs IAM Role Assume:

...

[profile work]

region = us-east-1

output = json

[profile tf-admin]

role_arn = arn:aws:iam::492***148:role/tf-admin

source_profile = work

...

Error: The configmap “aws-auth” does not exist

A fairly common error, at least I’ve encountered it many times, is when you get this error at the end of terraform apply, and aws-auth is not created in the cluster.

This leads to the fact that the default WorkerNodes Group does not connect to the cluster, and secondly, we cannot access the cluster from kubectl because although aws eks update-kubeconfig creates a new context in the local ~/.kube/config, kubectl itself returns a cluster authorization error.

This was resolved by enabling the Terraform debug log through the TF_LOG=INFO variable, where I saw the provider authentication error:

...

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "kind": "Status",

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "apiVersion": "v1",

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "metadata": {},

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "status": "Failure",

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "message": "Unauthorized",

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "reason": "Unauthorized",

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: "code": 401

[DEBUG] provider.terraform-provider-kubernetes_v2.23.0_x5: }

...

The error occurred exactly because the correct local profile was not set to the provider in its args.

There is another option for authentication — via a token, see this comment in GitHub Issues.

But there were problems with it when creating a cluster from scratch because Terraform could not execute the data "aws_eks_cluster_auth". Will have to try it again because in general, I like the idea of a token more than using the AWS CLI. On the other hand, we will still have to have the kubectl and helm providers, and I’m not sure that they can be authenticated via a token (although it’s likely that they can, just need to dig around).

Terraform Kubernetes module

Okay, we’ve figured out the provider — let’s add the module itself.

First, we will start the cluster itself with one NodeGroup, and then we will add controllers.

EKS NodeGroups Types

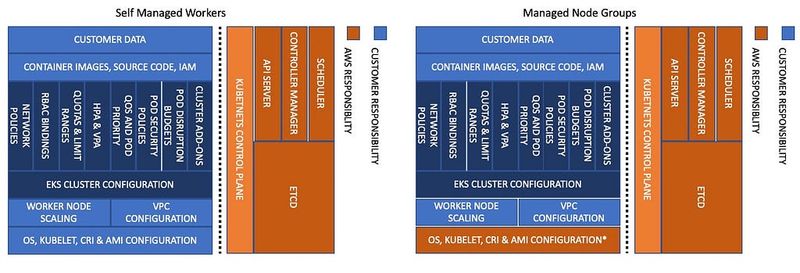

AWS has two types of NodeGroups — the Self-Managed and Amazon Managed, see Amazon EKS nodes.

The main advantage of the Amazon Managed type, in my opinion, is that you don’t have to worry about updates — everything related to the operating system and the components of Kubernetes itself is taken care of by Amazon:

However, if you create a Self-managed Nodes using an AMI from AWS itself with the Amazon Linux, everything will be already configured there, and even for updates it is enough to reboot or recreate an ES2 — then it will run with AMI with the latest patches.

Fargate is worth mentioning here too — see AWS: Fargate — capabilities, comparison with Lambda/EC2 and usage with AWS EKS, but I don’t see any big sense in them, especially since we won’t be able to create DaemonSets with, for example, Promtail for logs.

Also, Managed NodeGroups do not need to be separately configured in the aws-auth ConfigMap – EKS will add the necessary entries.

Anyway, to make our lives easier, we will use the Amazon Managed Nodes. Only the controllers — the “Critical Addons” — will live on these nodes, and the nodes for other workloads will be managed by the Karpenter.

Terraform EKS variables

First, we need to add variables.

In general, it’s good to go through all the inputs of the module, and see what you can customize for yourself.

For a minimal configuration, we need:

-

cluster_endpoint_public_access– bool -

cluster_enabled_log_types– list -

eks_managed_node_groups: -

min_size, max_size та desired_size– number -

instance_types– list -

capacity_type– string -

max_unavailable_percentage– number -

aws_auth_roles– map -

aws_auth_users– map

Let’s divide the variables into three groups — one for EKS itself, the second with parameters for NodeGroups, and the third for IAM Users.

Let’s describe the first variable — with parameters for the EKS:

...

variable "eks_params" {

description = "EKS cluster itslef parameters"

type = object({

cluster_endpoint_public_access = bool

cluster_enabled_log_types = list(string)

})

}

And the terraform.tfvars with values – for now, we will include all the logs, then will leave only the really necessary ones:

...

eks_params = {

cluster_endpoint_public_access = true

cluster_enabled_log_types = ["audit", "api", "authenticator", "controllerManager", "scheduler"]

}

Next, the parameters for NodeGroups. Let’s create an object of the map type, in which we can add configurations for several groups, which we will keep in elements of the object type because the parameters will be of different types:

...

variable "eks_managed_node_group_params" {

description = "EKS Managed NodeGroups setting, one item in the map() per each dedicated NodeGroup"

type = map(object({

min_size = number

max_size = number

desired_size = number

instance_types = list(string)

capacity_type = string

taints = set(map(string))

max_unavailable_percentage = number

}))

}

An example of adding Taints can be found here>>>, so describe them and other parameters in tfvars:

...

eks_managed_node_group_params = {

default_group = {

min_size = 2

max_size = 6

desired_size = 2

instance_types = ["t3.medium"]

capacity_type = "ON_DEMAND"

taints = [

{

key = "CriticalAddonsOnly"

value = "true"

effect = "NO_SCHEDULE"

},

{

key = "CriticalAddonsOnly"

value = "true"

effect = "NO_EXECUTE"

}

]

max_unavailable_percentage = 50

}

}

And the third group is a list of IAM users that will be added to the aws-auth ConfgiMap to access the cluster. Here, we use the set type with another object because for the user you will need to pass a list with a list of RBAC groups:

...

variable "eks_aws_auth_users" {

description = "IAM Users to be added to the aws-auth ConfigMap, one item in the set() per each IAM User"

type = set(object({

userarn = string

username = string

groups = list(string)

}))

}

Add values to the tfvars:

...

eks_aws_auth_users = [

{

userarn = "arn:aws:iam::492***148:user/arseny"

username = "arseny"

groups = ["system:masters"]

}

]

Just like with the NodeGroups parameters, here we can define multiple users, and all of them will then be passed to the aws_auth_users of the EKS module.

Creating a cluster

Create the eks.tf file, add the module:

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 19.0"

cluster_name = "${local.env_name}-cluster"

cluster_version = var.eks_version

cluster_endpoint_public_access = var.eks_params.cluster_endpoint_public_access

cluster_enabled_log_types = var.eks_params.cluster_enabled_log_types

cluster_addons = {

coredns = {

most_recent = true

}

kube-proxy = {

most_recent = true

}

vpc-cni = {

most_recent = true

}

}

vpc_id = module.vpc.vpc_id

subnet_ids = module.vpc.private_subnets

control_plane_subnet_ids = module.vpc.intra_subnets

manage_aws_auth_configmap = true

eks_managed_node_groups = {

default = {

min_size = var.eks_managed_node_group_params.default_group.min_size

max_size = var.eks_managed_node_group_params.default_group.max_size

desired_size = var.eks_managed_node_group_params.default_group.desired_size

instance_types = var.eks_managed_node_group_params.default_group.instance_types

capacity_type = var.eks_managed_node_group_params.default_group.capacity_type

taints = var.eks_managed_node_group_params.default_group.taints

update_config = {

max_unavailable_percentage = var.eks_managed_node_group_params.default_group.max_unavailable_percentage

}

}

}

cluster_identity_providers = {

sts = {

client_id = "sts.amazonaws.com"

}

}

aws_auth_users = var.eks_aws_auth_users

#aws_auth_roles = TODO

}

If you need to add parameters for Add-ons, you can do it with the configuration_values, see an example here>>>.

Let’s add some outputs:

...

output "eks_cloudwatch_log_group_arn" {

value = module.eks.cloudwatch_log_group_arn

}

output "eks_cluster_arn" {

value = module.eks.cluster_arn

}

output "eks_cluster_endpoint" {

value = module.eks.cluster_endpoint

}

output "eks_cluster_iam_role_arn" {

value = module.eks.cluster_iam_role_arn

}

output "eks_cluster_oidc_issuer_url" {

value = module.eks.cluster_oidc_issuer_url

}

output "eks_oidc_provider" {

value = module.eks.oidc_provider

}

output "eks_oidc_provider_arn" {

value = module.eks.oidc_provider_arn

}

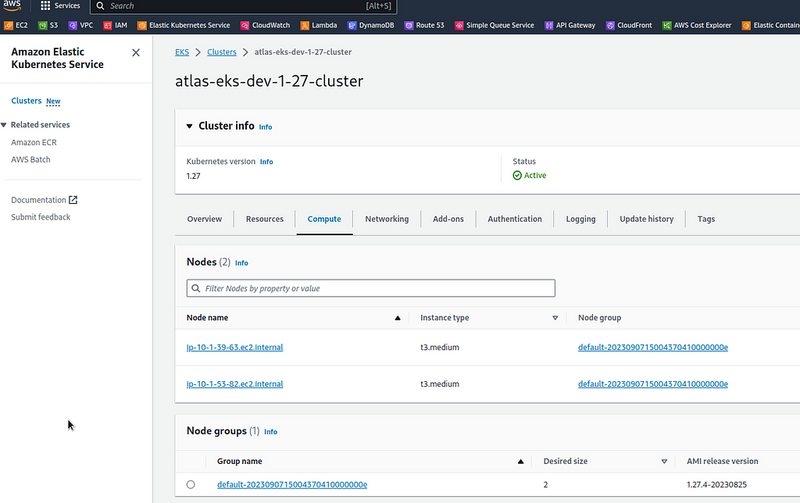

Check with the terraform plan, deploy, and check the cluster itself:

Create the ~/.kube/config:

$ aws --profile work --region us-east-1 eks update-kubeconfig --name atlas-eks-dev-1-27-cluster --alias atlas-eks-dev-1-27-work-profile

Updated context atlas-eks-dev-1-27-work-profile in /home/setevoy/.kube/config

And check access with can-i:

$ kubectl auth can-i get pod

yes

Additional IAM Role

Create an IAM Role with the eks:DescribeCluster policy, and connect it to the cluster to the system:masters group – using this role, other users will be able to authenticate to the cluster.

In the role, we will need to pass the AWS Account ID in the Principal to limit the ability to execute the AssumeRole operation to users of this account.

In order not to put this as a separate variable in the variables.tf, add the resource data "aws_caller_identity" to the eks.tf:

...

data "aws_caller_identity" "current" {}

And then describe the role itself with the assume_role_policy – who will be allowed to assume this role, and with the inline_policy with permission to execute the eks:DescribeCluster AWS API call:

...

resource "aws_iam_role" "eks_masters_access_role" {

name = "${local.env_name}-masters-access-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRole"

Effect = "Allow"

Sid = ""

Principal = {

AWS: "arn:aws:iam::${data.aws_caller_identity.current.account_id}:root"

}

}

]

})

inline_policy {

name = "${local.env_name}-masters-access-policy"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = ["eks:DescribeCluster*"]

Effect = "Allow"

Resource = "*"

},

]

})

}

tags = {

Name = "${local.env_name}-access-role"

}

}

Return to the module "eks", and add the mapping of this role to the aws_auth_roles input:

...

aws_auth_users = var.eks_aws_auth_users

aws_auth_roles = [

{

rolearn = aws_iam_role.eks_masters_access_role.arn

username = aws_iam_role.eks_masters_access_role.arn

groups = ["system:masters"]

}

]

...

Add some outputs:

...

output "eks_masters_access_role" {

value = aws_iam_role.eks_masters_access_role.arn

}

Let’s deploy the changes:

$ terraform apply

...

Outputs:

...

eks_masters_access_role = "arn:aws:iam::492***148:role/atlas-eks-dev-1-27-masters-access-role"

...

Check the aws-auth ConfigMap itself:

$ kk -n kube-system get cm aws-auth -o yaml

apiVersion: v1

data:

...

mapRoles: |

- "groups":

- "system:bootstrappers"

- "system:nodes"

"rolearn": "arn:aws:iam::492***148:role/default-eks-node-group-20230907145056376500000001"

"username": "system:node:"

- "groups":

- "system:masters"

"rolearn": "arn:aws:iam::492***148:role/atlas-eks-dev-1-27-masters-access-role"

"username": "arn:aws:iam::492***148:role/atlas-eks-dev-1-27-masters-access-role"

mapUsers: |

- "groups":

- "system:masters"

"userarn": "arn:aws:iam::492***148:user/arseny"

"username": "arseny"

...

Add a new profile to the ~/.aws/confing:

...

[profile work]

region = us-east-1

output = json

[profile eks-1-27-masters-role]

role_arn = arn:aws:iam::492***148:role/atlas-eks-dev-1-27-masters-access-role

source_profile = work

Add a new context for the kubectl:

$ aws --profile eks-1-27-masters-role --region us-east-1 eks update-kubeconfig --name atlas-eks-dev-1-27-cluster --alias eks-1-27-masters-role

Updated context eks-1-27-masters-role in /home/setevoy/.kube/config

And check the access:

$ kubectl auth can-i get pod

yes

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system aws-node-99gg6 2/2 Running 0 41h

kube-system aws-node-bllg2 2/2 Running 0 41h

...

In the following part, we will install the rest — Karpenter and various Controllers.

Get “http://localhost/api/v1/namespaces/kube-system/configmaps/aws-auth”: dial tcp: lookup localhost on 10.0.0.1:53: no such host

During the tests, I recreated the cluster to make sure that all the code described above is working.

And when deleting the cluster, Terraform gave an error:

...

Plan: 0 to add, 0 to change, 34 to destroy.

...

╷

│ Error: Get "http://localhost/api/v1/namespaces/kube-system/configmaps/aws-auth": dial tcp: lookup localhost on 10.0.0.1:53: no such host

│

...

The solution is to remove the aws-auth from the state file:

$ terraform state rm module.eks.kubernetes_config_map_v1_data.aws_auth[0]

Of course, this should be done only for the test cluster, not for Production.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (1)

This post gives a clear guide on how to set up controllers in EKS using Terraform. It explains the steps in a simple way, making it easy to follow. Great for anyone learning how to manage Kubernetes!