I wrote about launching Nexus in the Nexus: launch in Kubernetes, and PyPI caching repository configuration post, now I want to add Docker image caching to PyPI, especially since Docker Hub introduces new limits from April 1, 2025 — see Docker Hub usage and limits.

We’ll do it as usual: first run manually locally on a work laptop to see how it works in general, and then add a config for the Helm-chart, deploy it to Kubernetes, and see how to configure ContainerD to use this mirror.

Content

- Running Sonatype Nexus locally with Docker

- Creating a Docker cache repository

- Enabling the Docker Security Realm

- Checking the Docker mirror

- ContainerD and registry mirrors

- Nexus Helm chart values and Kubernetes

- Ingress/ALB for Nexus Docker cache

- Configuring ContainerD mirror on Kubernetes WorkerNodes

- Karpenter and the config for ContainerD

- Useful links

Running Sonatype Nexus locally with Docker

Create a local directory for Nexus data so that the data is saved when Docker restarts, change the user, because otherwise you’ll catch type errors:

...

mkdir: cannot create directory '../sonatype-work/nexus3/log': Permission denied

mkdir: cannot create directory '../sonatype-work/nexus3/tmp': Permission denied

...

Run:

$ mkdir /home/setevoy/Temp/nexus3-data/

$ sudo chown -R 200:200 /home/setevoy/Temp/nexus3-data/

Start Nexus with this directory, and add ports forwarding to access the Nexus instance itself, and to the Docker registry which we will create later:

$ docker run -p 8080:8081 -p 8092:8092 --name nexus3 --restart=always -v /home/setevoy/Temp/nexus3-data:/nexus-data sonatype/nexus3

Wait for everything to start, and get the password for admin:

$ docker exec -ti nexus3 cat /nexus-data/admin.password d549658c-f57a-4339-a589-1f244d4dd21b

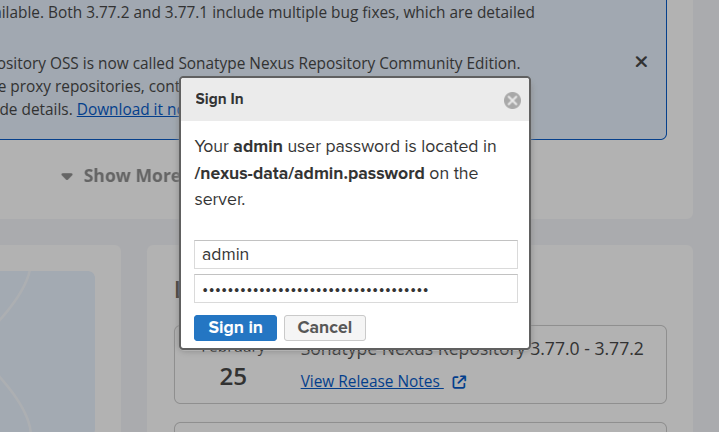

Go to the http://localhost:8080 in a browser, and log in to the system:

You can skip the Setup wizard, or quickly click “Next” and set a new admin password.

Creating a Docker cache repository

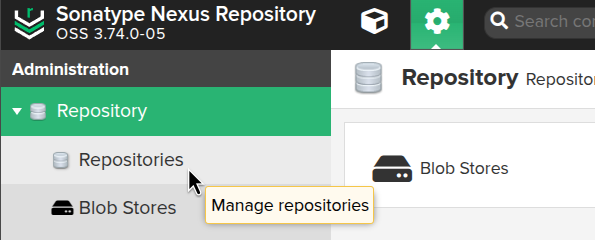

Go to Administration > Repository > Repositories:

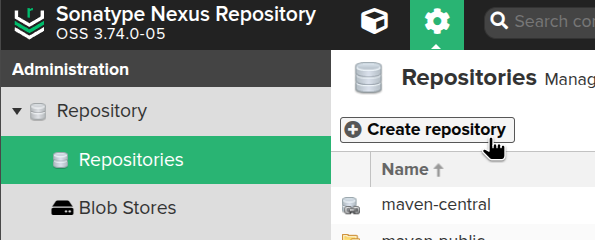

Click Create repository:

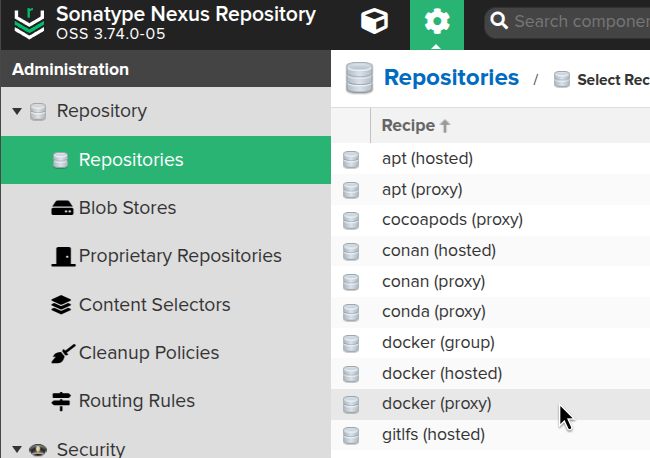

Select the docker (proxy) type:

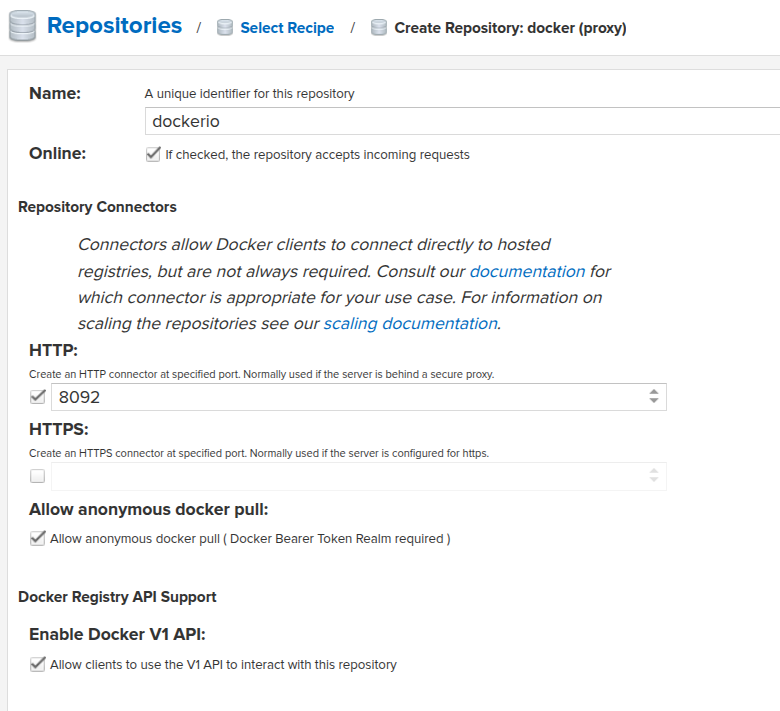

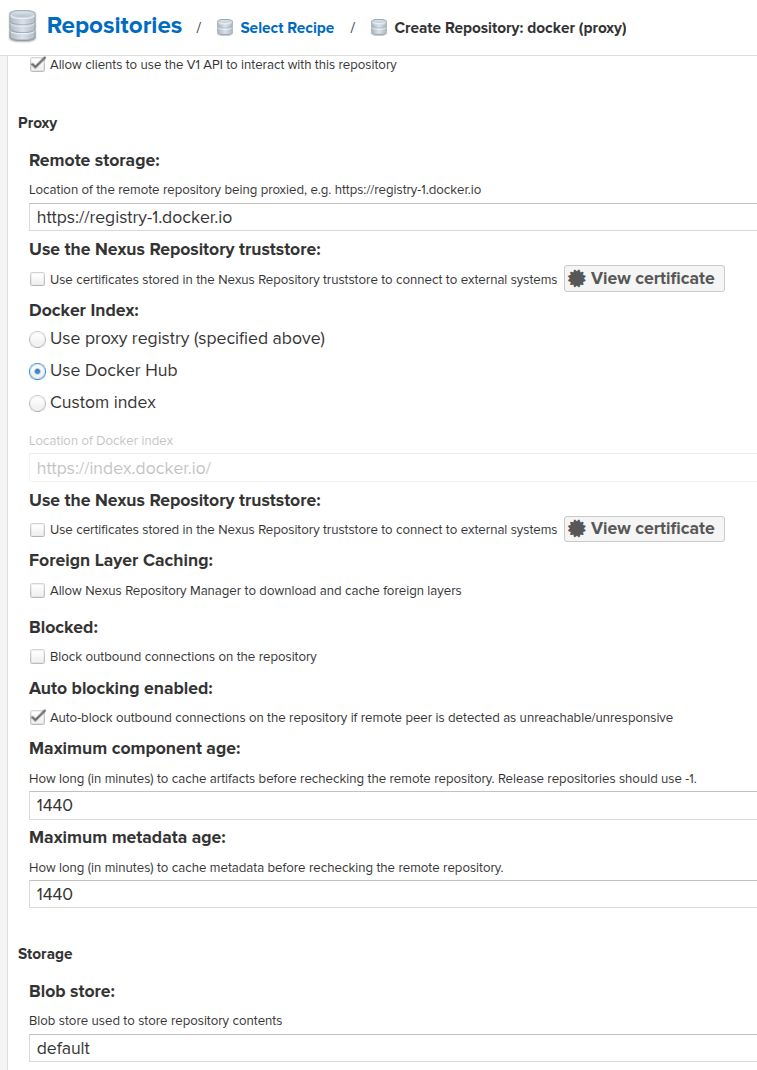

Specify a name, an HTTP port on which connections from the Docker daemon will be accepted, and enable Anonymous Docker pulls:

Next, set the address from which we will pull original images — https://registry-1.docker.io, the rest of the parameters can be left unchanged for now:

You can also create an docker (group), where a single connector for several repositories in Nexus is configured. But I don't need it yet, although I might in the future.

See Grouping Docker Repositories and Using Nexus OSS as a proxy/cache for Docker images.

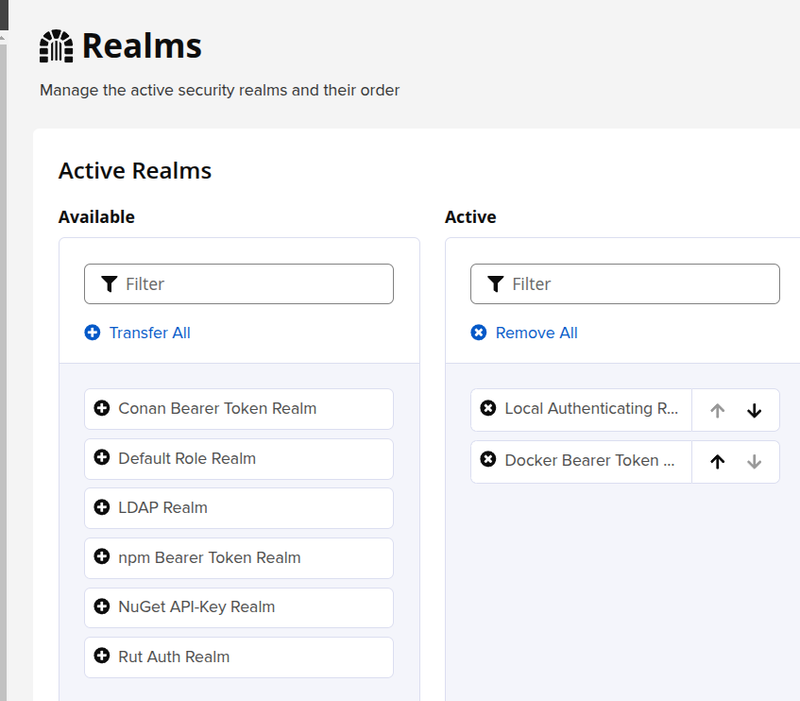

Enabling the Docker Security Realm

Although we don’t use authentication, we need to enable the realm.

Go to Security > Realms, add Docker Bearer Token Realm:

Checking the Docker mirror

Find an IP of the container running Nexus:

$ docker inspect nexus3

...

"NetworkSettings": {

..

"Networks": {

...

"IPAddress": "172.17.0.2",

...

Previously, we’ve set the port for Docker cache in Nexus to 8092.

On the workstation, edit the /etc/docker/daemon.json file, set registry-mirrors and insecure-registries, since we don't have SSL:

{

"insecure-registries": ["http://172.17.0.2:8092"],

"registry-mirrors": ["http://172.17.0.2:8092"]

}

Restart the local Docker service:

$ systemctl restart docker

Run docker info to verify that the changes have been applied:

$ docker info

...

Insecure Registries:

172.17.0.2:8092

::1/128

127.0.0.0/8

Registry Mirrors:

http://172.17.0.2:8092/

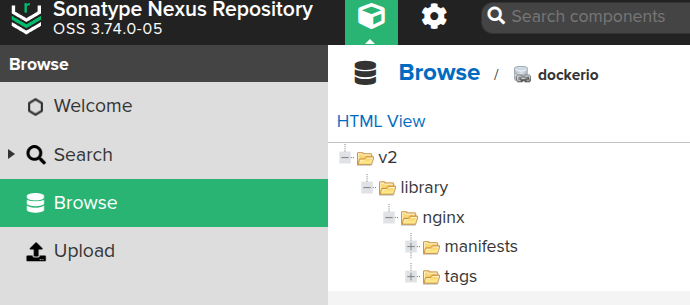

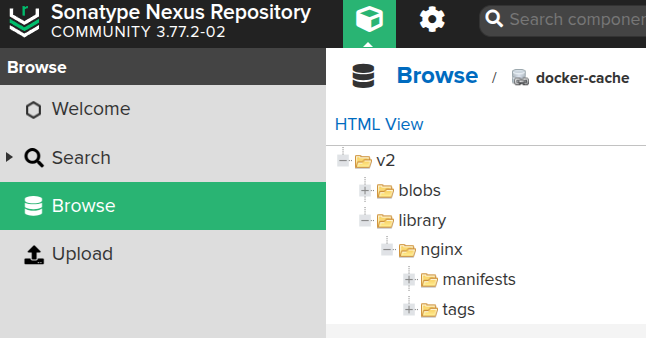

Run docker pull nginx - the request should go through Nexus, and the data should be saved there:

If the data does not appear, there is most likely a problem with authentication.

To check, add debug=true to the /etc/docker/daemon.json:

{

"insecure-registries": ["http://172.17.0.2:8092"],

"registry-mirrors": ["http://172.17.0.2:8092"],

"debug": true

}

Restart the local Docker, run a docker pull and look at the logs with journalctl -u docker:

$ sudo journalctl -u docker --no-pager -f

...

level=debug msg="Trying to pull rabbitmq from http://172.17.0.2:8092/"

level=info msg="Attempting next endpoint for pull after error: Head "http://172.17.0.2:8092/v2/library/rabbitmq/manifests/latest": unauthorized: "

level=debug msg="Trying to pull rabbitmq from https://registry-1.docker.io"

...

The first time I forgot to enable Docker Bearer Token Realm.

And the second time, I had a token for the https://index.docker.io saved in my local ~/.docker/config.json, and Docker tried to use it. In this case, you can simply delete/move the config.json and perform the pull again.

Okay.

What about ContainerD? Because we don’t have Docker in AWS Elastic Kubenretes Service.

ContainerD and registry mirrors

To be honest, containerd was more painful than Nexus. There is its TOML for configurations, and different versions of ContainerD itself and configurations, and deprecated parameters.

This format makes my eyes hurt:

[plugins]

[plugins.'io.containerd.cri.v1.runtime']

[plugins.'io.containerd.cri.v1.runtime'.containerd]

[plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes]

[plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes.runc]

[plugins.'io.containerd.cri.v1.runtime'.containerd.runtimes.runc.options]

Anyway, it worked, so here we go.

Let’s do it locally first, and then we’ll set it up in Kubernetes.

On Arch Linux, we install with pacman:

$ sudo pacman -S containerd crictl

Generate a default configuration for the containerd:

$ sudo mkdir /etc/containerd/

$ containerd config default | sudo tee /etc/containerd/config.toml

In the /etc/containerd/config.toml file, add the necessary parameters for mirrors:

...

[plugins]

[plugins.'io.containerd.cri.v1.images']

...

[plugins.'io.containerd.cri.v1.images'.registry]

[plugins.'io.containerd.cri.v1.images'.registry.mirrors."docker.io"]

endpoint = ["http://172.17.0.2:8092"]

...

Here:

-

plugins.'io.containerd.cri.v1.images: parameters for image service, images management -

registry: registry settings for images service -

mirrors."docker.io": mirrors for the docker.io -

endpoint: where to go when you need to pull an image from the docker.io

Restart containerd:

$ sudo systemctl restart containerd

Check that the new settings have been applied:

$ containerd config dump | grep -A 1 mirrors

[plugins.'io.containerd.cri.v1.images'.registry.mirrors]

[plugins.'io.containerd.cri.v1.images'.registry.mirrors.'docker.io']

endpoint = ['http://172.17.0.2:8092']

Run crictl pull:

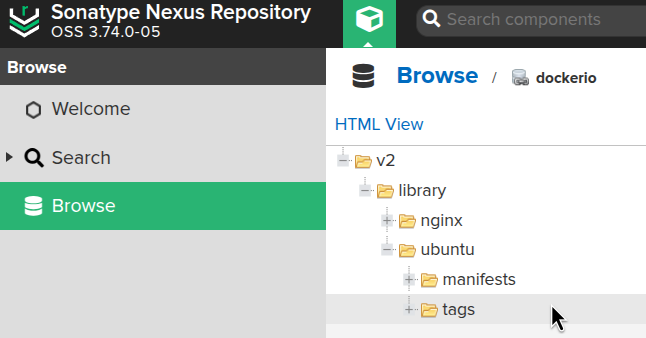

$ sudo crictl pull ubuntu

And check the Nexus:

The Ubuntu image data appears.

Everything seems to be working here, so let’s try to configure the whole thing in Kubernetes.

Nexus Helm chart values and Kubernetes

In the Adding a repository to Nexus using Helm chart values part of my previous post, I wrote a bit about how and what values were added to run from the Nexus Helm chart in Kubernetes for the PyPI cache.

Let’s update them a bit:

- add a separate blob store: connect a separate

persistentVolume, because the default one is only 8 gigabytes, and if this is more or less enough for PyPI cache, it will not be enough for Docker images - add

additionalPorts: here we set the port on which the Docker cache will service requests - enable Ingress

All values are values.yaml.

I deploy from Terraform when setting up a Kubernetes cluster.

All together with PyPI looks like this:

resource "helm_release" "nexus" {

namespace = "ops-nexus-ns"

create_namespace = true

name = "nexus3"

repository = "https://stevehipwell.github.io/helm-charts/"

#repository_username = data.aws_ecrpublic_authorization_token.token.user_name

#repository_password = data.aws_ecrpublic_authorization_token.token.password

chart = "nexus3"

version = "5.7.2"

# also:

# Environment:

# INSTALL4J_ADD_VM_PARAMS: -Djava.util.prefs.userRoot=${NEXUS_DATA}/javaprefs -Xms1024m -Xmx1024m -XX:MaxDirectMemorySize=2048m

values = [

<<-EOT

# use existing Kubernetes Secret with admin's password

rootPassword:

secret: nexus-root-password

key: password

# enable storage

persistence:

enabled: true

storageClass: gp2-retain

# create additional PersistentVolume to store Docker cached data

extraVolumes:

- name: nexus-docker-volume

persistentVolumeClaim:

claimName: nexus-docker-pvc

# mount the PersistentVolume into the Nexus' Pod

extraVolumeMounts:

- name: nexus-docker-volume

mountPath: /data/nexus/docker-cache

resources:

requests:

cpu: 100m

memory: 2000Mi

limits:

cpu: 500m

memory: 3000Mi

# enable to collect Nexus metrics to VictoriaMetrics/Prometheus

metrics:

enabled: true

serviceMonitor:

enabled: true

# use dedicated ServiceAccount

# still, EKS Pod Identity isn't working (yet?)

serviceAccount:

create: true

name: nexus3

automountToken: true

# add additional TCP port for the Docker caching listener

service:

additionalPorts:

- port: 8082

name: docker-proxy

containerPort: 8082

hosts:

- nexus-docker.ops.example.co

# to be able to connect from Kubernetes WorkerNodes, we have to have a dedicated AWS LoadBalancer, not only Kubernetes Service with ClusterIP

ingress:

enabled: true

annotations:

alb.ingress.kubernetes.io/group.name: ops-1-30-internal-alb

alb.ingress.kubernetes.io/target-type: ip

ingressClassName: alb

hosts:

- nexus.ops.example.co

# define the Nexus configuration

config:

enabled: true

anonymous:

enabled: true

blobStores:

# local EBS storage; 8 GB total default size ('persistence' config above)

# is attached to a repository in the 'repos.pip-cache' below

- name: default

type: file

path: /nexus-data/blobs/default

softQuota:

type: spaceRemainingQuota

limit: 500

# dedicated sorage for PyPi caching

- name: PyPILocalStore

type: file

path: /nexus-data/blobs/pypi

softQuota:

type: spaceRemainingQuota

limit: 500

# dedicated sorage for Docker caching

- name: DockerCacheLocalStore

type: file

path: /data/nexus/docker-cache

softQuota:

type: spaceRemainingQuota

limit: 500

# enable Docker Bearer Token Realm

realms:

enabled: true

values:

- NexusAuthenticatingRealm

- DockerToken

# cleanup policies for Blob Storages

# is attached to epositories below

cleanup:

- name: CleanupAll

notes: "Cleanup content that hasn't been updated in 14 days downloaded in 28 days."

format: ALL_FORMATS

mode: delete

criteria:

isPrerelease:

lastBlobUpdated: "1209600"

lastDownloaded: "2419200"

repos:

- name: pip-cache

format: pypi

type: proxy

online: true

negativeCache:

enabled: true

timeToLive: 1440

proxy:

remoteUrl: https://pypi.org

metadataMaxAge: 1440

contentMaxAge: 1440

httpClient:

blocked: false

autoBlock: true

connection:

retries: 0

useTrustStore: false

storage:

blobStoreName: default

strictContentTypeValidation: false

cleanup:

policyNames:

- CleanupAll

- name: docker-cache

format: docker

type: proxy

online: true

negativeCache:

enabled: true

timeToLive: 1440

proxy:

remoteUrl: https://registry-1.docker.io

metadataMaxAge: 1440

contentMaxAge: 1440

httpClient:

blocked: false

autoBlock: true

connection:

retries: 0

useTrustStore: false

storage:

blobStoreName: DockerCacheLocalStore

strictContentTypeValidation: false

cleanup:

policyNames:

- CleanupAll

docker:

v1Enabled: false

forceBasicAuth: false

httpPort: 8082

dockerProxy:

indexType: "REGISTRY"

cacheForeignLayers: "true"

EOT

]

}

Deploy, open the port:

$ kk -n ops-nexus-ns port-forward svc/nexus3 8081

Check the Realm:

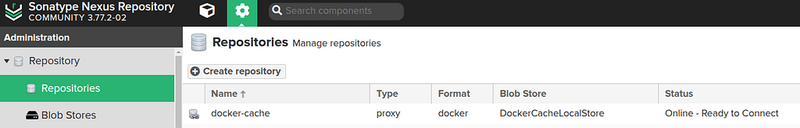

Check the Docker repository itself:

Good.

Ingress/ALB for Nexus Docker cache

Since ContainerD on EC2 is not a Kubernetes service, it does not have access to Kubernetes Service from ClusterIP. Accordingly, it will not be able to pull images from port 8082 to nexus3.ops-nexus-ns.svc.cluster.local.

In the Helm chart, it is possible to create a separate Ingress and set all the parameters:

...

# to be able to connect from Kubernetes WorkerNodes, we have to have a dedicated AWS LoadBalancer, not only Kubernetes Service with ClusterIP

ingress:

enabled: true

annotations:

alb.ingress.kubernetes.io/group.name: ops-1-30-internal-alb

alb.ingress.kubernetes.io/target-type: ip

ingressClassName: alb

hosts:

- nexus.ops.example.co

...

I use the alb.ingress.kubernetes.io/group.name annotation to combine multiple Kubernetes Ingresses through a single AWS LoadBalancer, see Kubernetes: a single AWS Load Balancer for different Kubernetes Ingresses.

An important note here: in the Ingress parameters, you do not need to add ports and hosts that are specified in the Service.

That is, for the:

...

# add additional TCP port for the Docker caching listener

service:

additionalPorts:

- port: 8082

name: docker-proxy

containerPort: 8082

hosts:

- nexus-docker.ops.example.co

...

A route to Ingress will be automatically created in the Helm chart:

$ kk -n ops-nexus-ns get ingress nexus3 -o yaml

...

spec:

ingressClassName: alb

rules:

- host: nexus.ops.example.co

http:

paths:

- backend:

service:

name: nexus3

port:

name: http

path: /

pathType: Prefix

- host: nexus-docker.ops.example.co

http:

paths:

- backend:

service:

name: nexus3

port:

name: docker-proxy

path: /

pathType: Prefix

See ingress.yaml.

Next.

Configuring ContainerD mirror on Kubernetes WorkerNodes

We have already seen it locally, and in an AWS EKS cluster basically everything is the same.

The only thing is that locally we have a v2.0.3 version, and in the AWS EKS it’s the 1.7.25, so the configuration format will be slightly different.

On AWS EKS WorkerNode/EC2, check the /etc/containerd/config.toml file:

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d:/etc/docker/certs.d"

...

So far, we’re adding a new mirror here manually — this is where there are some differences from what we did locally. See examples in Configure Registry Credentials Example — GCR with Service Account Key Authentication.

That is, for containerd version 1 — configuration version == 2, and for containerd version 2 — configuration version == 3…? Okay, man…

On an EC2, the config will look like this:

[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["http://nexus-docker.ops.example.co"]

We don’t specify the port, because AWS ALB will handle this through the hostname, which will route the request to the correct Listener.

Restart the service:

[root@ip-10-0-32-218 ec2-user]# systemctl restart containerd

Perform a pull of some image:

[root@ip-10-0-46-186 ec2-user]# crictl pull nginx

Let’s check the Nexus:

Everything is there.

It remains to add the ContainerD settings when creating a server with Karpenter.

Karpenter and the config for ContainerD

My EC2NodeClasses for Karpenter are created in Terraform, see Terraform: building EKS, part 3 — Karpenter installation.

Of course, it is better to perform all these operations on a test environment, or create a separate NodeClass and NodePool.

In my case, the ~ec2-user/.ssh/authorized_keys file for SSH is configured there via a AWS EC2 UserData (see AWS: Karpenter and SSH for Kubernetes WorkerNodes), and here we also can add the creation of a file for ContainerD mirror.

By default, we have seen that containerd will check the following directories:

...

[plugins."io.containerd.grpc.v1.cri".registry]

config_path = "/etc/containerd/certs.d:/etc/docker/certs.d"

...

So, we can add a new file there.

But do you remember that joke — “Now forget everything you learned at university”?

Well, forget everything we did with containerd configurations above, because it's already deprecated. Now it's cool and trendy to work with the Registry Host Namespace.

The idea is that a directory for the registry is created in /etc/containerd/certs.d with the hosts.toml file, and the registry settings are described in that file.

In our case, it will look like this:

[root@ip-10-0-45-117 ec2-user]# tree /etc/containerd/certs.d/

/etc/containerd/certs.d/

└── docker.io

└── hosts.toml

And the hosts.toml file's content:

server = "https://docker.io"

[host."http://nexus-docker.ops.example.co"]

capabilities = ["pull", "resolve"]

Ok. We describe this whole thing in the UserData of our testing EC2NodeClass.

So here is our “favorite” YAML — I’ll give you the entire configuration to avoid problems with indents, because I got a little headache with it:

resource "kubectl_manifest" "karpenter_node_class_test_latest" {

yaml_body = <<-YAML

apiVersion: karpenter.k8s.aws/v1

kind: EC2NodeClass

metadata:

name: class-test-latest

spec:

kubelet:

maxPods: 110

blockDeviceMappings:

- deviceName: /dev/xvda

ebs:

volumeSize: 40Gi

volumeType: gp3

amiSelectorTerms:

- alias: al2@latest

role: ${module.eks.eks_managed_node_groups["${local.env_name_short}-default"].iam_role_name}

subnetSelectorTerms:

- tags:

karpenter.sh/discovery: "atlas-vpc-${var.aws_environment}-private"

securityGroupSelectorTerms:

- tags:

karpenter.sh/discovery: ${var.env_name}

tags:

Name: ${local.env_name_short}-karpenter

nodeclass: test

environment: ${var.eks_environment}

created-by: "karpenter"

karpenter.sh/discovery: ${module.eks.cluster_name}

userData: |

#!/bin/bash

set -e

mkdir -p ~ec2-user/.ssh/

touch ~ec2-user/.ssh/authorized_keys

echo "${var.karpenter_nodeclass_ssh}" >> ~ec2-user/.ssh/authorized_keys

chmod 600 ~ec2-user/.ssh/authorized_keys

chown -R ec2-user:ec2-user ~ec2-user/.ssh/

mkdir -p /etc/containerd/certs.d/docker.io

cat <<EOF | tee /etc/containerd/certs.d/docker.io/hosts.toml

server = "https://docker.io"

[host."http://nexus-docker.ops.example.co"]

capabilities = ["pull", "resolve"]

EOF

systemctl restart containerd

YAML

depends_on = [

helm_release.karpenter

]

}

To create an EC2, I have a dedicated testing Pod that has tolerations and nodeAffinity (see Kubernetes: Pods and WorkerNodes – control the placement of the Pods on the Nodes), through which Karpenter should create EC2 with the_"class-test-latest"_ EC2NodeClass:

apiVersion: v1

kind: Pod

metadata:

name: test-pod

spec:

containers:

- name: nginx

image: nginx

command: ['sleep', '36000']

restartPolicy: Never

tolerations:

- key: "TestOnly"

effect: "NoSchedule"

operator: "Exists"

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: component

operator: In

values:

- test

Deploy the changes, deploy the Pod, log in to EC2 and check the config:

[root@ip-10-0-45-117 ec2-user]# cat /etc/containerd/certs.d/docker.io/hosts.toml

server = "https://docker.io"

[host."http://nexus-docker.ops.example.co"]

capabilities = ["pull", "resolve"]

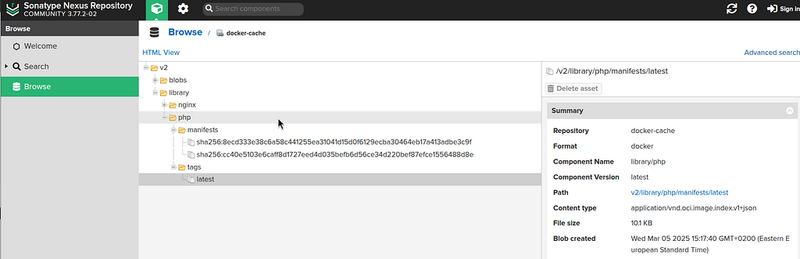

Once again, run a pull of some PHP image:

[root@ip-10-0-43-82 ec2-user]# crictl pull php

And check the Nexus:

Done.

Useful links

- Proxy Repository for Docker

- Using Nexus OSS as a proxy/cache for Docker images

- Configuring Docker private registry and Docker proxy on Nexus Artifactory

- Nexus Proxy Docker Repository with Nginx and Traefik

- How to overwrite the Docker Hub default registry in the ContainerD Runtime

- containerd Registry Configuration

- Registry Configuration — Introduction

- Container Runtime Interface (CRI) CLI

- Configure Image Registry

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)