So, we have a deployed Kubernetes cluster — see the Terraform: Creating EKS, Part 1 — VPCs, Subnets, and Endpoints series.

And we have a GitHub Actions workflow to deploy it — see GitHub Actions: Deploying Dev/Prod environments with Terraform.

It’s time to start deploying our backend to Kubernetes.

Here we will use GitHub Actions again — we will build a Docker image with our Backend API service, store it in AWS Elastic Container Service, and then deploy a Helm chart to which we will pass a new Docker Tag in the values.

For now, we have only one working environment, Dev, but later we will add Staging and Production. In addition, we need to be able to deploy a feature environment to the same Dev EKS, but with custom values for some variables.

However, we will now do it in the test repository "atlas-test" and with the test Helm chart.

- Release flow planning

- Setup AWS

- - Terraform: creating an IAM Role

- Workflow: Deploy Dev manually

- - Triggers

- - Environments and Variables

- - Job: Docker Build

- - Job: Helm deploy

- - - Passing variables between GitHub Action Jobs

- - Job: creating Git Tag

- - - Git commit message format

- Workflow: Deploy Feature Environment

- - Conditions for running Jobs with if and github.event context

- - - Job: Deploy when creating a Pull Request with the “deploy” label

- - - Job: Destroy on closing a Pull Request with the “deploy” label

- - Creating Feature Environment

- Bonus: GitHub Deployments

Release flow planning

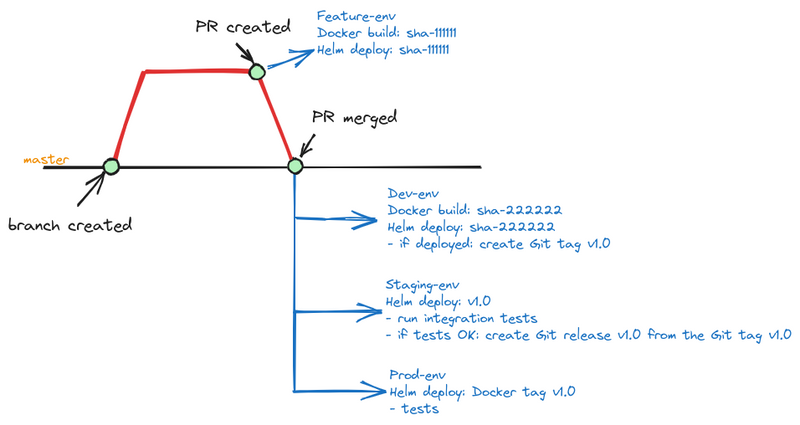

How will we release it? So far, we have decided with the following flow:

That is:

- a developer creates a branch, writes code, tests locally in Docker Compose

- after completing work on the feature — he creates a Pull Request with the label “deploy”

-

workflow: Deploy Feature Env

— a trigger: the creation of a Pull Request with the label “deploy” — builds a Docker image and tags it withgit commit sha --short— push it to the ECR — create a feature environment in GitHub — deploys to Kubernetes Dev in a feature-env namespace, where the developer can additionally test changes in conditions close to real ones — after the PR merge to the master branch: -

workflow: Deploy Dev

— a trigger: push to master or manually — builds a Docker image and tags it withgit commit sha --short— deploy to Dev — if the deployment was successful (Helm did not generate any errors, Pods started, i.e. readiness and liveness Probes passed) — create a Git Tag — tag an existing Docker image with this tag -

workflow: Deploy Stage

— a trigger: a Git Tag created — deploys an existing Docker image with this tag — integration tests are launched (mobile, web) — if the tests pass, create a GitHub Release — changelog, etc. -

workflow: Deploy Prod

— a trigger: a GitHub Release was created — deploys the existing Docker image with the tag of this release — tests are performed - manual deploy

- from any existing image to Dev or Staging

Today, we’ll create two workflows — Deploy Dev and Deploy and Destroy Feature-env.

Setup AWS

First, we need to have an ECR and an IAM Role.

ECR to store the images that will be deployed, and the IAM Role will use GitHub Action to access the ECR and log in to EKS during the deployment.

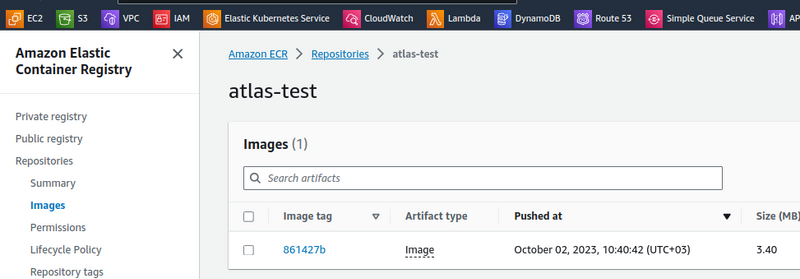

We already have a repository in ECR, also called “atlas-test”, created manually for now — later we will transfer ECR management to Terraform.

However, AWS IAM roles for GitHub projects that will deploy to Kubernetes can be created immediately at the stage of creating an EKS cluster.

Terraform: creating an IAM Role

For deploying from GitHub to AWS, we use OpenID Connect, which means that an authenticated GitHub user (or in our case, a GitHub Actions Runner) can come to AWS and run AssumeRole there, and then with the policies of this role, pass authorization in AWS — checking what he can do there.

To deploy from GitHub to EKS, we need policies on:

-

eks:DescribeClusterandeks:ListClusters: to authorize in the EKS-cluster -

ecr: push and read images from the ECR repository

In addition, for this role, we will set a restriction on which GitHub repository the AssumeRole can be executed from.

In the EKS project, add a github\_projects variable with a type list, which will contain all the GitHub projects that we will allow deploying to this cluster:

...

variable "github_projects" {

type = list(string)

default = [

"atlas-test"

]

description = "GitHub repositories to allow access to the cluster"

}

Describe the role itself, where in the for\_each loop we'll go through all the elements of the github\_projects list:

data "aws_caller_identity" "current" {}

resource "aws_iam_role" "eks_github_access_role" {

for_each = var.github_projects

name = "${local.env_name}-github-${each.value}-access-role"

assume_role_policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = "sts:AssumeRoleWithWebIdentity"

Effect = "Allow"

Sid = ""

Principal = {

Federated : "arn:aws:iam::${data.aws_caller_identity.current.account_id}:oidc-provider/token.actions.githubusercontent.com"

}

Condition: {

StringLike: {

"token.actions.githubusercontent.com:sub": "repo:GitHubOrgName/${each.value}:*"

},

StringEquals: {

"token.actions.githubusercontent.com:aud": "sts.amazonaws.com"

}

}

}

]

})

inline_policy {

name = "${local.env_name}-github-${each.value}-access-policy"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"eks:DescribeCluster*",

"eks:ListClusters"

]

Effect = "Allow"

Resource = module.eks.cluster_arn

},

{

Action = [

"ecr:GetAuthorizationToken",

"ecr:BatchGetImage",

"ecr:BatchCheckLayerAvailability",

"ecr:CompleteLayerUpload",

"ecr:GetDownloadUrlForLayer",

"ecr:InitiateLayerUpload",

"ecr:PutImage",

"ecr:UploadLayerPart"

]

Effect = "Allow"

Resource = "*"

},

]

})

}

tags = {

Name = "${local.env_name}-github-${each.value}-access-policy"

}

}

Here:

- in the

assume\_role\_policy, allowAssumeRoleWithWebIdentityof this role for the token.actions.githubusercontent.com Identity Profile from AWS IAM, and the repository repo:GitHubOrgName:atlas-test - in the

inline\_policy: - allow

eks:DescribeClusterof the cluster to where we will deploy - allow

eks:ListClustersof all clusters - allow operations in ECR to all repositories

On ECR, it would be better to limit it to specific repositories, but this is still in the test, and it is not yet known what the naming of the repositories will be.

See Pushing an image, AWS managed policies for Amazon Elastic Container Registry, and Amazon EKS identity-based policy examples.

Next, you need to add these created roles to the aws\_auth\_roles, where we already have a Role:

...

aws_auth_roles = [

{

rolearn = aws_iam_role.eks_masters_access_role.arn

username = aws_iam_role.eks_masters_access_role.arn

groups = ["system:masters"]

}

]

...

In the locals build a new list(map(any)) - the github\_roles.

Then in the aws\_auth\_roles using the flatten() function create a new list that includes the eks\_masters\_access\_role and roles from the github\_roles:

...

locals {

vpc_out = data.terraform_remote_state.vpc.outputs

github_roles = [ for role in aws_iam_role.eks_github_access_role : {

rolearn = role.arn

username = role.arn

groups = ["system:masters"]

}]

aws_auth_roles = flatten([

{

rolearn = aws_iam_role.eks_masters_access_role.arn

username = aws_iam_role.eks_masters_access_role.arn

groups = ["system:masters"]

},

local.github_roles

])

}

...

For now, we’re using the system:masters group here because it's still in development and I'm not setting up RBAC yet. But see User-defined cluster role binding should not include system:masters group as subject.

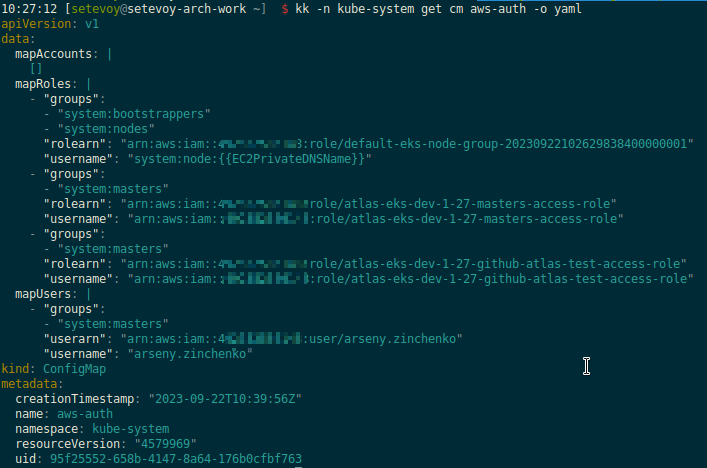

Deploy, and check the aws-auth ConfigMap, where we now have a new object in the mapRoles:

Workflow: Deploy Dev manually

Now, having roles, we can create Workflows.

We’ll start with manual deployment to the Dev because it’s the easiest. And then, having a working build and processes, we will do the rest.

Triggers

What are the conditions to launch a build?

-

workflow\_dispatch: - from any branch or tag

- on

pushto master: in the Backend repository, the master branch has a push restriction only from Pull Requests, so there will be no other pushes here

You can also do an additional check in jobs, for example:

- name: Build

if: github.event_name == 'pull_request' && github.event.action == 'closed' && github.event.pull_request.merged == true

See Trigger workflow only on pull request MERGE.

Environments and Variables

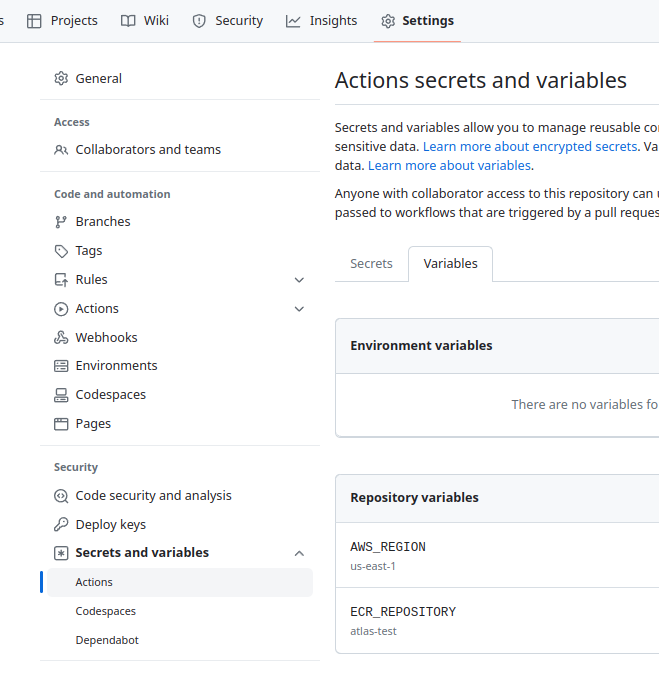

At the repository level, add variables — go to Settings > Secrets and variables > Actions:

ECR\_REPOSITORYAWS\_REGION

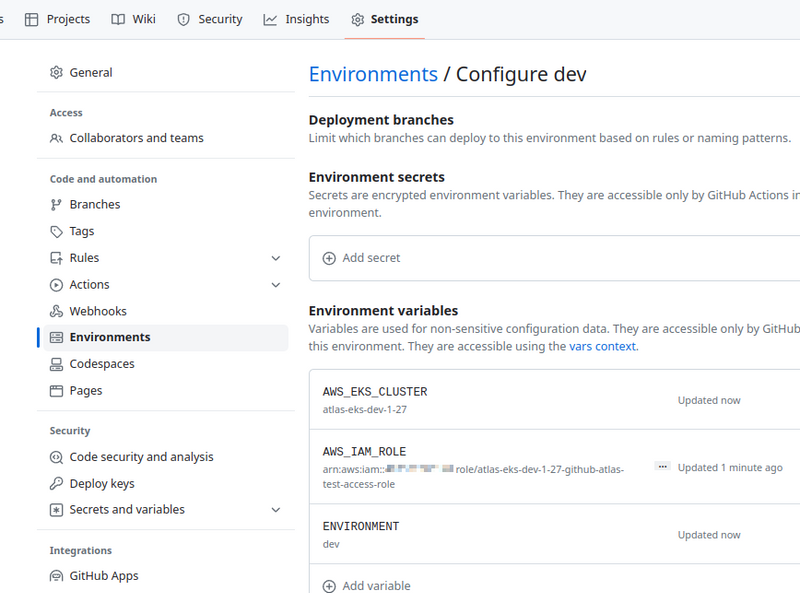

Create a new GitHub Environment “dev” and set it up:

-

AWS\_IAM\_ROLE: arn is taken from the outputs of the EKS deploy with Terraform -

AWS\_EKS\_CLUSTER: the name is taken from the outputs of the EKS deploy with Terraform -

ENVIRONMENT: "dev"

Job: Docker Build

We will test with a minimal Dockerfile — create it in the root of the repository:

FROM alpine

Create a .github/workflows directory:

$ mkdir -p .github/workflows

And a file in it — .github/workflows/deploy-dev.yml:

name: Deploy to EKS

on:

workflow_dispatch:

push:

branches: [master]

permissions:

id-token: write

contents: read

jobs:

build-docker:

name: Build Docker image

runs-on: ubuntu-latest

environment: dev

steps:

- name: "Setup: checkout code"

uses: actions/checkout@v3

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: ${{ vars.AWS_IAM_ROLE }}

role-session-name: github-actions-test

aws-region: ${{ vars.AWS_REGION }}

- name: "Setup: Login to Amazon ECR"

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

with:

mask-password: 'true'

- name: "Setup: create commit_sha"

id: set_sha

run: echo "sha_short=$(git rev-parse --short HEAD)" >> $GITHUB_OUTPUT

- name: "Build: create image, set tag, push to Amazon ECR"

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: ${{ vars.ECR_REPOSITORY }}

IMAGE_TAG: ${{ steps.set_sha.outputs.sha_short }}

run: |

docker build -t $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG -f Dockerfile .

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

Here, we have a job that runs from the GitHub Environment == dev, and:

- with the

actions/checkoutcheck out the code on GitHub Runner - with the

aws-actions/configure-aws-credentialslog in to AWS by executing the AssumeRole of the role we created earlier - with the

aws-actions/amazon-ecr-loginlog in to AWS ECR (I was wondering how it works, but - 54,000 lines of JS code!) - will generate the

output sha\_short, which will include the Commit ID - and will perform

docker buildanddocker push

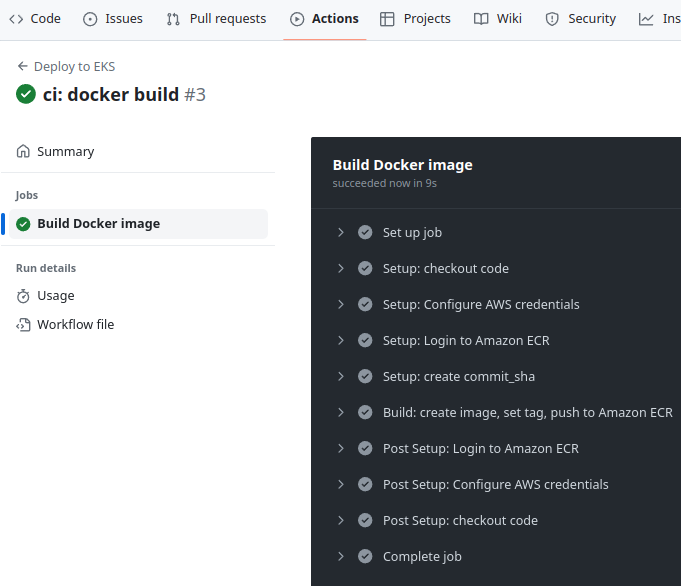

Push it to the repository, merge it to the master, and run the build:

Check the image in the ECR:

Job: Helm deploy

The next step is to deploy the Helm chart with EKS. Let’s quickly make a test chart:

$ mkdir -p helm/templates

In the helm directory, create a Chart.yaml file:

apiVersion: v2

name: test-chart

description: A Helm chart

type: application

version: 0.1.0

appVersion: "1.16.0"

And templates/deployment.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

spec:

replicas: 1

selector:

matchLabels:

app: test-app

template:

metadata:

labels:

app: test-app

spec:

containers:

- name: test-container

image: {{ .Values.image.repo}}:{{ .Values.image.tag }}

imagePullPolicy: Always

And file values.yaml:

image:

repo: 492***148.dkr.ecr.us-east-1.amazonaws.com/atlas-test

tag: latest

Passing variables between GitHub Action Jobs

Next, there is a question about the Workflow itself, namely — how to pass the Docker tag that we created in the build-docker job?

We can create a deployment with Helm in the same job and thus will be able to use the same IMAGE\_TAG variable.

Or we can create a separate job, and pass the tag value between jobs.

It doesn't make much sense to split it into two separate jobs, but first, it looks more logical in the build, and secondly, I want to try all sorts of things in GitHub Actions, so let's pass the variable value between the jobs.

To do this, add the outputs in the first job:

...

jobs:

build-docker:

name: Build Docker image

runs-on: ubuntu-latest

environment: dev

outputs:

image_tag: ${{ steps.set_sha.outputs.sha_short }}

...

And then we’ll use it in a new Helm job, where we pass it as values: image.tag:

```header is written

...

deploy-helm:

name: Deploy Helm chart

runs-on: ubuntu-latest

environment: dev

needs: build-docker

steps:

- name: "Setup: checkout code"

uses: actions/checkout@v3

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: ${{ vars.AWS_IAM_ROLE }}

role-session-name: github-actions-test

aws-region: ${{ vars.AWS_REGION }}

- name: "Setup: Login to Amazon ECR"

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

with:

mask-password: 'true'

- name: Deploy Helm

uses: bitovi/github-actions-deploy-eks-helm@v1.2.6

with:

cluster-name: ${{ vars.AWS_EKS_CLUSTER }}

namespace: ${{ vars.ENVIRONMENT }}-testing-ns

name: test-release

# may enable roll-back on fail

#atomic: true

values: image.tag=${{ needs.build-docker.outputs.image_tag }}

timeout: 60s

helm-extra-args: --debug

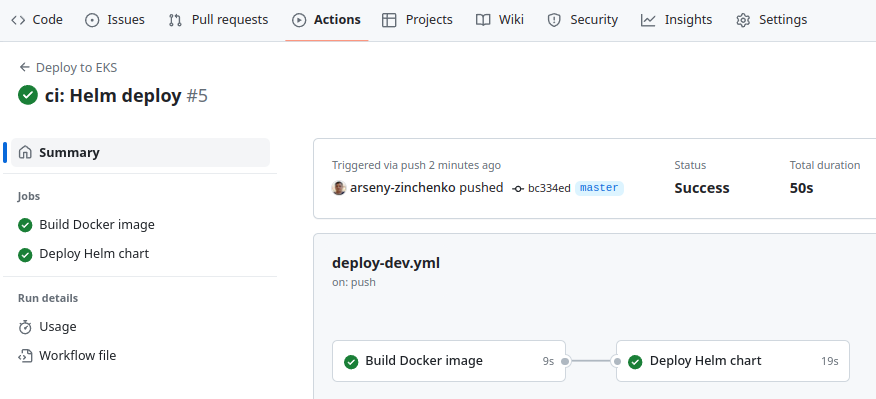

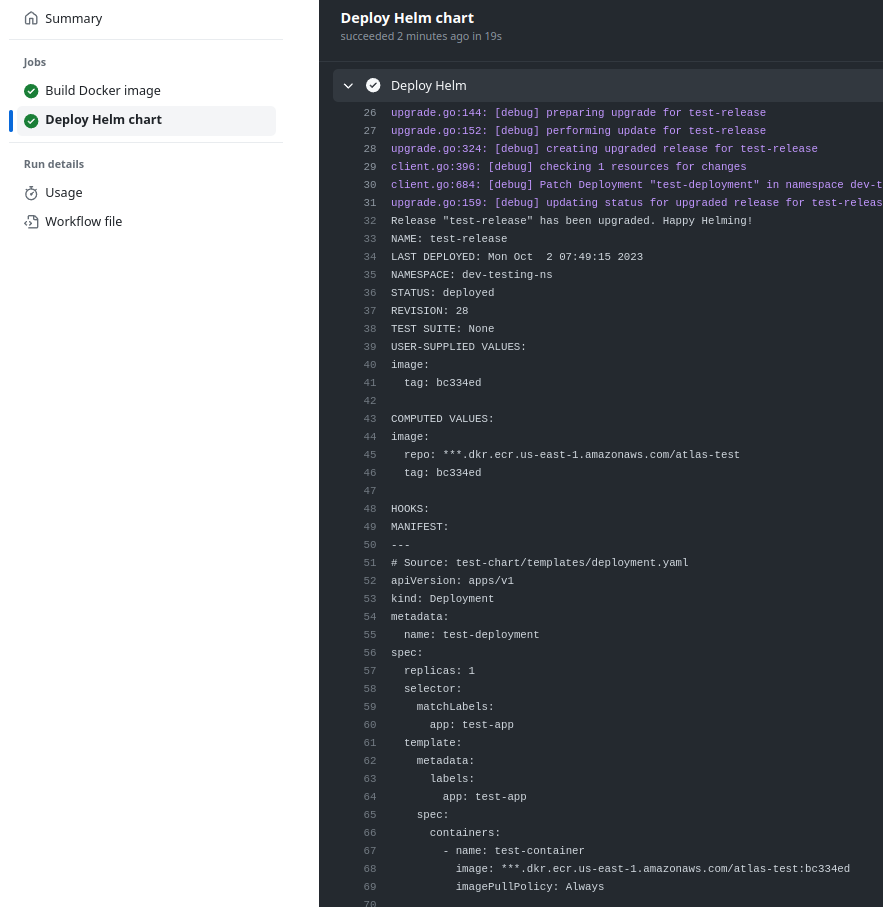

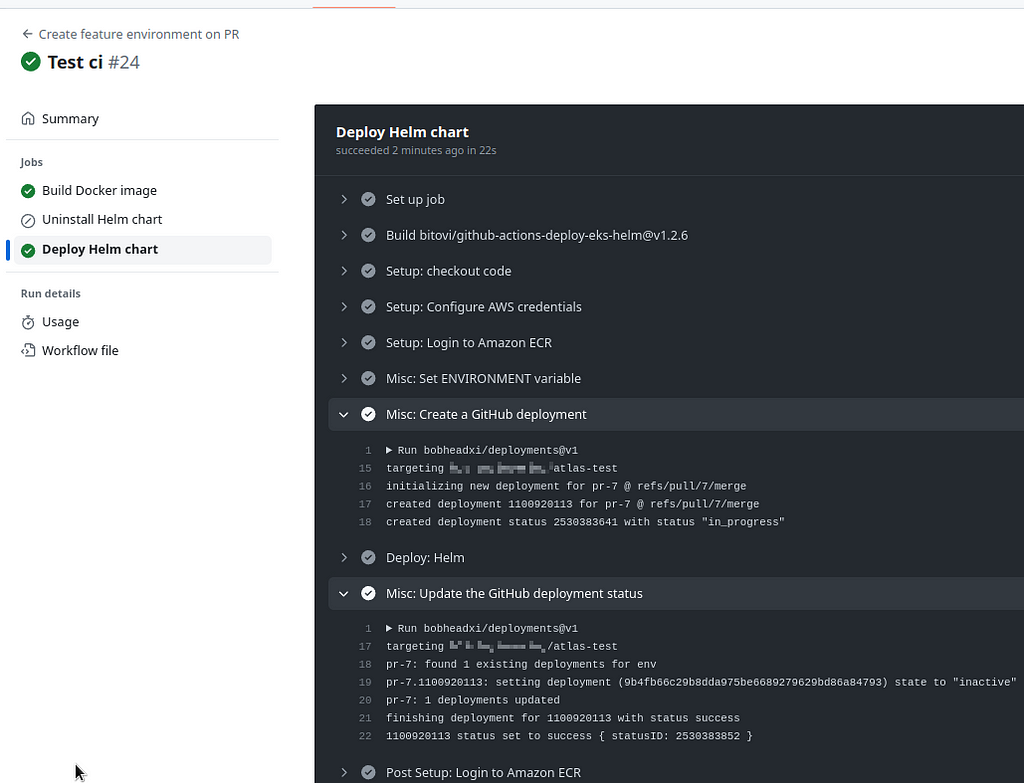

We push the changes and check the deploy:

#### Job: creating Git Tag

The next step is to create a Git Tag. Here we can use the [github-tag-action](https://github.com/mathieudutour/github-tag-action) Action, which under the hood checks the commit header and, depending on it, increments the _major_, _minor,_ or _patch_ version of the tag.

So let’s take a look at the Commit Message Format first, although this is actually a topic that could be covered in a separate post.

#### Git commit message format

See [Understanding Semantic Commit Messages Using Git and Angular](https://nitayneeman.com/posts/understanding-semantic-commit-messages-using-git-and-angular/).

So, in short, the header is written in the format “_type(scope): subject_”, that is, for example — `git commit -m "ci(actions): add new workflow"`.

At the same time, the `type` can be conditionally divided into _development_ and _production_, that is, changes that relate to development/developers, or changes that relate to the production environment and end-users:

- **development** :

- `build` (formerly `chore`): changes that are related to the build and packages (`npm build`, `npm install`, etc.)

- `ci`: CI/CD changes (workflow files, `terraform apply`, etc.)

- `docs`: changes in project documentation

- `refactor`: code refactoring - new variable names, code simplification

- `style`: code changes in indentation, commas, quotation marks, etc.

- `test`: changes in the tests on the code - unit test, integration, etc

- **production** :

- `feat`: new features, functionality

- `fix`: bug fixes

- `perf`: changes related to performance

So add a new `job`:

...

create_tag:

name: "Create Git version tag"

runs-on: ubuntu-latest

timeout-minutes: 5

needs: deploy-helm

permissions:

contents: write

outputs:

new_tag: ${{ steps.tag_version.outputs.new_tag }}

steps:

- name: "Checkout"

uses: actions/checkout@v3

- name: "Misc: Bump version and push tag"

id: tag_version

uses: mathieudutour/github-tag-action@v6.1

with:

github_token: ${{ secrets.GITHUB_TOKEN }}

tag_prefix: eks-

In the job, we specify the `needs` to run only after Helm deploys, add `permissions` so that `github-tag-action` can add tags to the repository, and add `tag\_prefix`, because the Backend repository, where all this will work later, already has standard tags with the "v" prefix. And the token in `secrets.GITHUB\_TOKEN` is present by default in the Action itself.

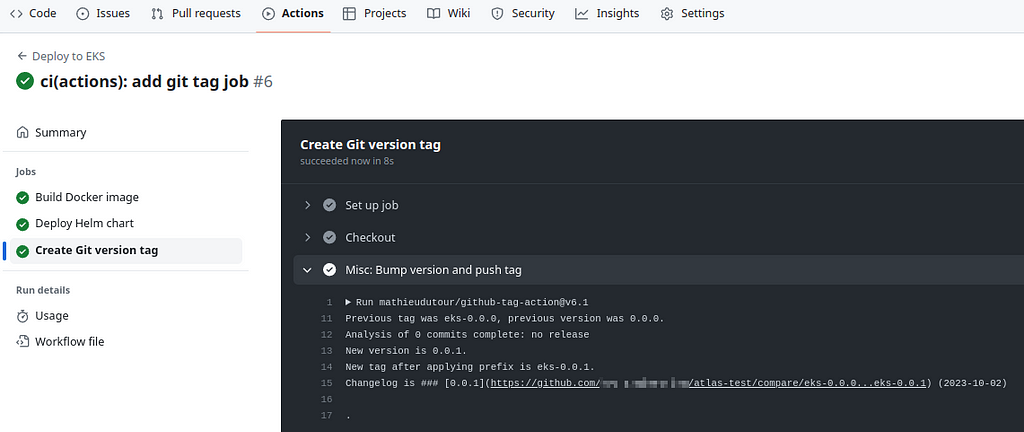

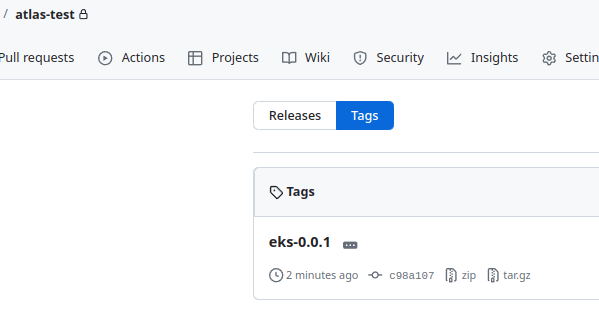

Push with the `git add -A && git commit -m "ci(actions): add git tag job" && git push`, and we have a new tag:

### Workflow: Deploy Feature Environment

Okay — we have a Docker build, and we have a Helm-chart deployment. Everything is deployed to the Dev environment, and everything works.

Let’s add one more workflow — for deploying to EKS Dev, but not as a Dev environment, but to a temporary Kubernetes Namespace so that developers can test their features independently of the dev environment.

To do this, we need:

- trigger a workflow when creating a Pull Request with the “deploy” label

- create a custom name for the new Namespace — use Pull Request ID

- build Docker

- deploy the Helm chart in a new Namespace

Create a new file —` create-feature-env-on-pr.yml`.

There will be three jobs in it:

- Docker build

- Deploy feature-env

- Destroy feature-env

#### Conditions for running Jobs with `if` and `github.event` context

The “Docker build” and “Deploy” jobs should run when a Pull Request is created and labeled “deploy”, and the “Destroy” job should run when a Pull Request labeled “deploy” is closed.

For the workflow trigger, we set the condition `on.pull\_request` - then we will have a [PullRequestEvent](https://docs.github.com/en/rest/overview/github-event-types?apiVersion=2022-11-28#event-payload-object-for-pullrequestevent) with a set of fields that we can check.

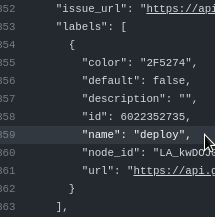

For some reason, the documentation has no the `label` field and that [was discussed back in 2017](https://github.com/google/go-github/issues/521) (!), but in fact, we have that label there.

Here, an additional `step` can help a lot, where you can display the entire payload.

Create a branch for tests, and add the first job in the file `create-feature-env-on-pr.yml`:

name: "Create feature environment on PR"

on:

pull_request:

types: [opened, edited, closed, reopened, labeled, unlabeled, synchronize]

permissions:

id-token: write

contents: read

concurrency:

group: deploy-${{ github.event.number }}

cancel-in-progress: false

jobs:

print-event:

name: Print event

runs-on: ubuntu-latest

steps:

- name: Dump GitHub context

env:

GITHUB_CONTEXT: ${{ toJson(github.event) }}

run: |

echo "$GITHUB_CONTEXT"

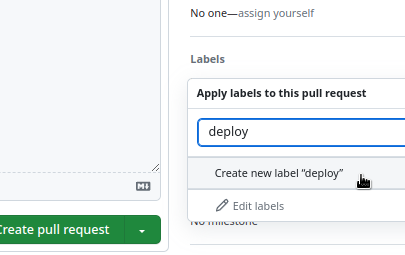

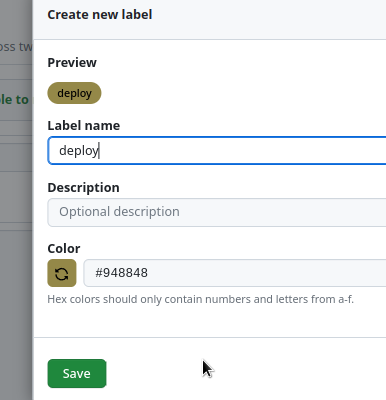

Push to the repository, open a Pull Request, and create a new label:

And our workflow started:

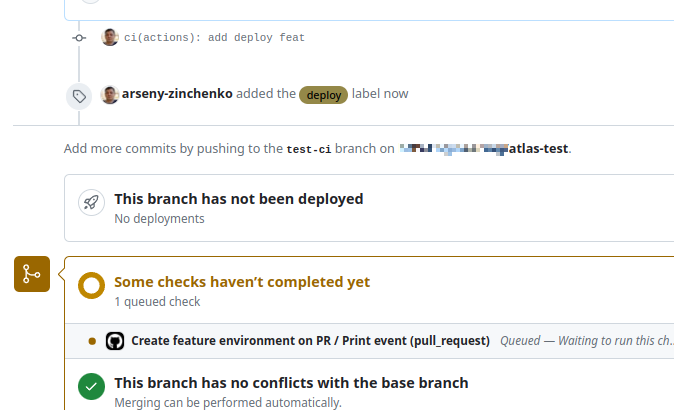

And it gave us all the data from the `event`:

Now that we have the data, we can think about how we will check the conditions for the jobs:

- Create an Environment if a Pull Request: `opened`, `edited`, `reopened`, `synchronize` (if a new commit is added to the source-branch)

- but Pull Request can be without a label — then we don’t need to deploy it

- or a label can be added to an existing Pull Request — then the event will be `labeled`, and we need to deploy

- Delete an Environment if a Pull Request: closed

- but it can be without a label — then we don’t need to run the uninstall job

- or the “deploy” label can be removed from an existing Pull Request — then the event will be `unlabeled`, and we need to remove the Environment

The conditions for launching Workflow now look like this:

on:

pull_request:

types: [opened, edited, closed, reopened, labeled, unlabeled, synchronize]

#### Job: Deploy when creating a Pull Request with the “deploy” label

So, we have already seen above that when creating a Pull Request with the “deploy” label, we have `"action": "open"` and `pull\_request.labels[].name: "deploy"`:

Then we can check the condition as:

if: contains(github.event.pull_request.labels.*.name, 'deploy')

See [`contains`](https://docs.github.com/en/actions/learn-github-actions/expressions#contains).

But if the event was for the closing of a Pull Request, it will still have the "deploy" label and will trigger our job.

Therefore, add one more condition:

if: contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed'

So a test job that will run the “deploy” may look like this:

...

jobs:

print-event:

name: Print event

runs-on: ubuntu-latest

steps:

- name: Dump GitHub context

env:

GITHUB_CONTEXT: ${{ toJson(github.event) }}

run: |

echo "$GITHUB_CONTEXT"

deploy:

name: Deploy

if: contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed'

runs-on: ubuntu-latest

steps:

- name: Run deploy

run: echo "This is deploy"

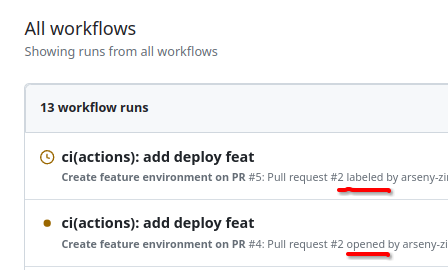

Push it, create a PR, check it, and see that the job has launched twice:

Because when creating a PR with a label, we have two events: the actual creation of the PR, that is, the “`opened`" event, and the addition of a label, the "`labeled`" event.

It looks a bit weird to me, but apparently, GitHub couldn't do with just one event in this case.

Therefore, we can simply remove the opened from the `on.pull\_request` triggers - and when creating a PR with a label, the job will be started only by the "`labeled`" event.

Another thing that looks kinky, although we don't use it here, but:

- to check a _string_ in the `contains()` we use the form `contains(github.event.pull\_request.labels.\*.name, 'deploy')` - first, we specify the object in which to search, _then the string_ we are looking for

- but to check several _strings_ in the `contains()` - the format is `contains('["labeled", "closed"]', github.event.action)` - that is, _first a list of strings to check_, then the object in which we are looking for them

Okay, anywhere, let’s move on: we can have another condition for launching — when the “deploy” label was added to an already created PR, that was created without the “deploy” label, and therefore did not trigger ed our job — and then we need to launch the job.

We can test this with the following conditions:

github.event.action == 'labeled' && github.event.label.name == 'deploy'

And to select only one from all our conditions — the first or this one — we can use the [“or”](https://docs.github.com/en/actions/learn-github-actions/expressions#operators) operator — “`||`", that is, our `if` will look like this:

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed') ||

(github.event.action == 'labeled' && github.event.label.name == 'deploy')'

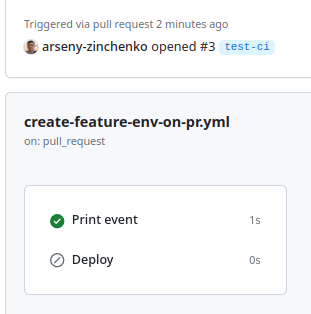

Then, for the test, create a PR without a label — and only the “Print event” job will work:

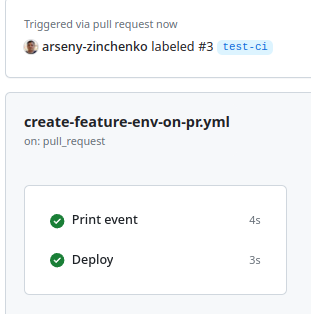

Then add the “deploy” label, and both jobs were run together:

#### Job: Destroy on closing a Pull Request with the “deploy” label

The only thing left to do is to make a `job` to delete the feature-env - when the Pull Request with the "deploy" label is closed.

The conditions here are similar to those we used for the deployments:

...

destroy:

name: Destroy

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action == 'closed') ||

(github.event.action == 'unlabeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

steps:

- name: Run destroy

run: echo "This is dstroy"

- run a job if `action == 'closed' and labels.\*.name == 'deploy'` **OR**

- run a job if `action == 'unlabeled' and event.label.name == 'deploy'`

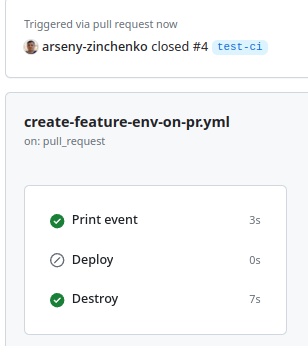

Let’s check — create a PR with the label “deploy” — the Deploy job is launched, merge this PR — and we have a Destroy job run:

So the full workflow file now looks like this:

name: "Create feature environment on PR"

on:

pull_request:

types: [opened, edited, closed, reopened, labeled, unlabeled, synchronize]

permissions:

id-token: write

contents: read

concurrency:

group: deploy-${{ github.event.number }}

cancel-in-progress: false

jobs:

print-event:

name: Print event

runs-on: ubuntu-latest

steps:

- name: Dump GitHub context

env:

GITHUB_CONTEXT: ${{ toJson(github.event) }}

run: |

echo "$GITHUB_CONTEXT"

deploy:

name: Deploy

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed') ||

(github.event.action == 'labeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

steps:

- name: Run deploy

run: echo "This is deploy"

destroy:

name: Destroy

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action == 'closed') ||

(github.event.action == 'unlabeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

steps:

- name: Run destroy

run: echo "This is dstroy"

Well, everything seems to be working.

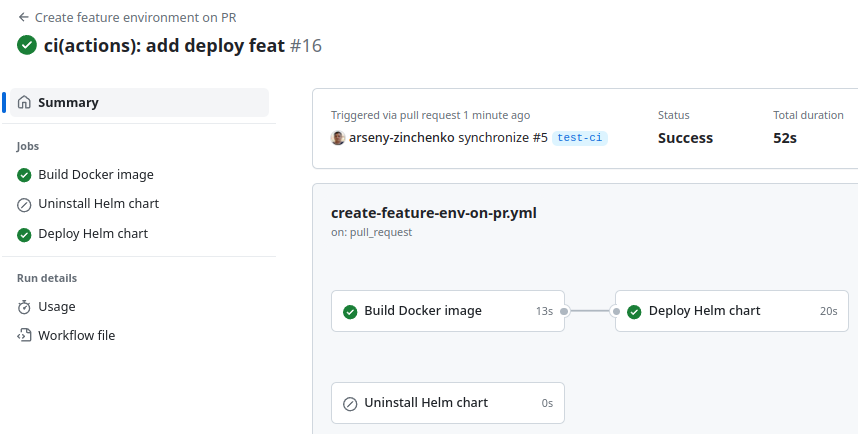

#### Creating Feature Environment

Actually, everything here is already familiar for us — we’ll use the jobs that we made for the Deploy Dev Workflow, but just change a couple of parameters.

In the same `create-feature-env-on-pr.yml` file describe the jobs.

In the first, Docker build, nothing changes - just add `if`:

...

build-docker:

name: Build Docker image

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed') ||

(github.event.action == 'labeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

timeout-minutes: 30

environment: dev

outputs:

image_tag: ${{ steps.set_sha.outputs.sha_short }}

steps:

- name: "Setup: checkout code"

uses: actions/checkout@v3

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: ${{ vars.AWS_IAM_ROLE }}

role-session-name: github-actions-test

aws-region: ${{ vars.AWS_REGION }}

- name: "Setup: Login to Amazon ECR"

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

with:

mask-password: 'true'

- name: "Setup: create commit_sha"

id: set_sha

run: echo "sha_short=$(git rev-parse --short HEAD)" >> $GITHUB_OUTPUT

- name: "Build: create image, set tag, push to Amazon ECR"

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: ${{ vars.ECR_REPOSITORY }}

IMAGE_TAG: ${{ steps.set_sha.outputs.sha_short }}

run: |

docker build -t $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG -f Dockerfile .

docker push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG

And in the Helm-deploy job, we will also add an `if`, and a new `step` - "_Misc: Set ENVIRONMENT variable_", where from the `github.event.number` we will create a new value for the `ENVIRONMENT` variable, and `ENVIRONMENT` we will pass to a Kubernetes Namespace where the chart will be deployed:

...

deploy-helm:

name: Deploy Helm chart

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed') ||

(github.event.action == 'labeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

timeout-minutes: 15

environment: dev

needs: build-docker

steps:

- name: "Setup: checkout code"

uses: actions/checkout@v3

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: ${{ vars.AWS_IAM_ROLE }}

role-session-name: github-actions-test

aws-region: ${{ vars.AWS_REGION }}

- name: "Setup: Login to Amazon ECR"

id: login-ecr

uses: aws-actions/amazon-ecr-login@v1

with:

mask-password: 'true'

- name: "Misc: Set ENVIRONMENT variable"

id: set_stage

run: echo "ENVIRONMENT=pr-${{ github.event.number }}" >> $GITHUB_ENV

- name: "Deploy: Helm"

uses: bitovi/github-actions-deploy-eks-helm@v1.2.6

with:

cluster-name: ${{ vars.AWS_EKS_CLUSTER }}

namespace: ${{ env.ENVIRONMENT }}-testing-ns

name: test-release

#atomic: true

values: image.tag=${{ needs.build-docker.outputs.image_tag }}

timeout: 60s

helm-extra-args: --debug

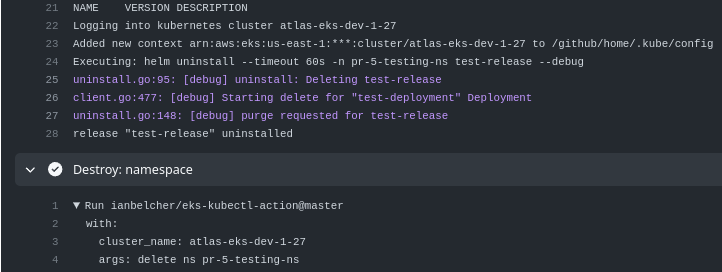

And lastly, we add a new job that will uninstall the Helm release, and a separate step to remove the namespace because Helm itself cannot do this during `uninstall`, see [Add option to delete the namespace created during install](https://github.com/helm/helm/issues/1464). To do this, use [`ianbelcher/eks-kubectl-action`](https://github.com/ianbelcher/eks-kubectl-action):

...

destroy-helm:

name: Uninstall Helm chart

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action == 'closed') ||

(github.event.action == 'unlabeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

timeout-minutes: 15

environment: dev

steps:

- name: "Setup: checkout code"oyments, we'll use

uses: actions/checkout@v3

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v2

with:

role-to-assume: ${{ vars.AWS_IAM_ROLE }}

role-session-name: github-actions-test

aws-region: ${{ vars.AWS_REGION }}

- name: "Misc: Set ENVIRONMENT variable"

id: set_stage

run: echo "ENVIRONMENT=pr-${{ github.event.number }}" >> $GITHUB_ENV

- name: "Destroy: Helm"

uses: bitovi/github-actions-deploy-eks-helm@v1.2.6

with:

cluster-name: ${{ vars.AWS_EKS_CLUSTER }}

namespace: ${{ env.ENVIRONMENT }}-testing-ns

action: uninstall

name: test-release

#atomic: true

timeout: 60s

helm-extra-args: --debug

- name: "Destroy: namespace"

uses: ianbelcher/eks-kubectl-action@master

with:

cluster_name: ${{ vars.AWS_EKS_CLUSTER }}

args: delete ns ${{ env.ENVIRONMENT }}-testing-ns

Let’s push, create a Pull Request, and we have a new deploy:

Check the Namespace:

$ kk get ns pr-5-testing-ns

NAME STATUS AGE

pr-5-testing-ns Active 95s

$ kk -n pr-5-testing-ns get pod

NAME READY STATUS RESTARTS AGE

test-deployment-77f6dcbd95-cg2gf 0/1 CrashLoopBackOff 3 (35s ago) 78s

Merge the Pull Request, and Helm release with the Namespace were deleted:

Check it:

$ kk get ns pr-5-testing-ns

Error from server (NotFound): namespaces "pr-5-testing-ns" not found

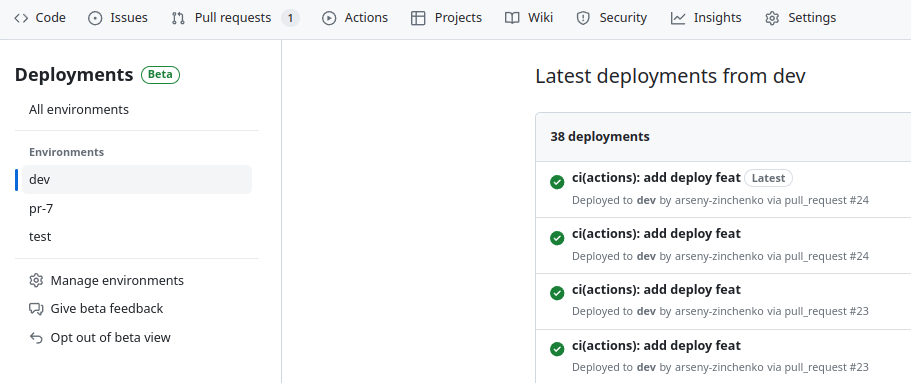

### Bonus: GitHub Deployments

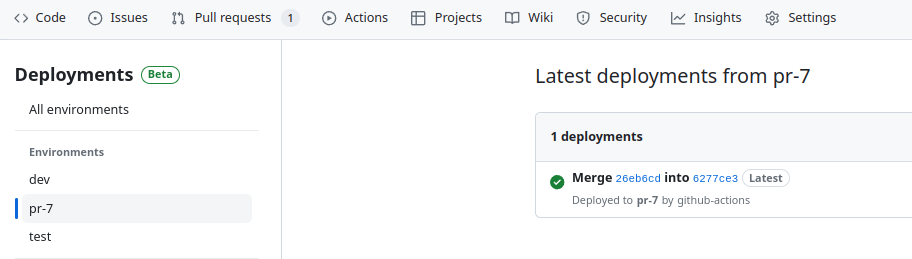

GitHub has a new feature called [Deployments](https://docs.github.com/en/free-pro-team@latest/rest/deployments/deployments?apiVersion=2022-11-28), which is still in Beta, but you can already use it:

The idea behind the Deployments is that for each GitHub Environments, you can see a list of all deployments to it, with statuses and commits:

To work with Deployments, we’ll use the [`bobheadxi/deployments`](https://github.com/bobheadxi/deployments) Action, which accepts an `input` called `env`. If in the `env` we'll pass an Environment that does not exist in the repository's Settings, it will be created.

In addition, here we have a set of _steps_ - `start`, `finish`, `deactivate-env`, and `delete-env`.

During the deployment, we need to call the `start` and pass a name of the environment. When the deployment is completed, call `finish` to pass the status of the deployment. And when deleting the environment, call `delete-env`.

In the `deploy-helm` job in the `create-feature-env-on-pr.yml` workflow, add `permissions` and new steps.

The step "_Misc: Create a GitHub deployment_" before calling "_Deploy: Helm_", and the "_Misc: Update the GitHub deployment status_" - after the Helm install:

...

permissions:

id-token: write

contents: read

deployments: write

...

deploy-helm:

name: Deploy Helm chart

if: |

(contains(github.event.pull_request.labels.*.name, 'deploy') && github.event.action != 'closed') ||

(github.event.action == 'labeled' && github.event.label.name == 'deploy')

runs-on: ubuntu-latest

timeout-minutes: 15

needs: build-docker

...

- name: "Misc: Set ENVIRONMENT variable"

id: set_stage

run: echo "ENVIRONMENT=pr-${{ github.event.number }}" >> $GITHUB_ENV

- name: "Misc: Create a GitHub deployment"

uses: bobheadxi/deployments@v1

id: deployment

with:

step: start

token: ${{ secrets.GITHUB_TOKEN }}

env: ${{ env.ENVIRONMENT }}

- name: "Deploy: Helm"

uses: bitovi/github-actions-deploy-eks-helm@v1.2.6

with:

cluster-name: ${{ vars.AWS_EKS_CLUSTER }}

namespace: ${{ env.ENVIRONMENT }}-testing-ns

name: test-release

#atomic: true

values: image.tag=${{ needs.build-docker.outputs.image_tag }}

timeout: 60s

helm-extra-args: --debug

- name: "Misc: Update the GitHub deployment status"

uses: bobheadxi/deployments@v1

if: always()

with:

step: finish

token: ${{ secrets.GITHUB_TOKEN }}

status: ${{ job.status }}

env: ${{ steps.deployment.outputs.env }}

deployment_id: ${{ steps.deployment.outputs.deployment_id }}

...

Push the changes to the repository, create a PR, and we have a new environment “`pr-7`":

And that’s all for now.

* * *

_Originally published at [RTFM: Linux, DevOps, and system administration](https://rtfm.co.ua/en/github-actions-docker-build-to-aws-ecr-and-helm-chart-deployment-to-aws-eks/)_

Top comments (0)