AWS: CDK and Python - configure an IAM OIDC Provider, and install Kubernetes Controllers

So we have an AWS EKS cluster built with AWS CDK and Python — AWS: CDK and Python — building an EKS cluster, and general impressions of CDK, and we have an idea of how IRSA works — AWS: EKS, OpenID Connect, and ServiceAccounts.

The next step after deploying the cluster is to configure the OIDC Identity Provider in AWS IAM and to add two controllers — ExternalDNS to work with Route53, and AWS ALB Controller to create AWS Load Balancers when creating Ingress in an EKS cluster.

For authentication in AWS, both controllers will use the IRSA model — IAM Roles for ServiceAccounts, that is, in the Kubernetes Pod with the controller we’ll connect a ServiceAccount that will allow the use of an IAM role with an IAM Policies with the necessary permissions.

The WorkerNodes autoscaling controller will be added later: previously, I’ve used the Cluster AutoScaler, but this time I want to try Karpenter, so I’ll make a separate post for that.

We continue to eat cactus use the AWS CDK with Python. It will be used to create IAM resources and deploy Helm charts with controllers directly from the CloudFormation stack of the cluster.

I tried to deploy the controllers as a separate stack but spent an hour or trying to figure out how to get the AWS CDK to pass values from one stack to another via CloudFormation Exports and Outputs, but finally, I gave up and did it all in one stack class. May be will try another time.

EKS cluster, VPC, and IAM

Creating a cluster is described in one of the previous posts — AWS: CDK and Python — building an EKS cluster, and general impressions of CDK.

What do we have now?

A class to create a stack:

...

class AtlasEksStack(Stack):

def __init__ (self, scope: Construct, construct_id: str, stage: str, region: str, **kwargs) -> None:

super(). __init__ (scope, construct_id, **kwargs)

# egt AWS_ACCOUNT

aws_account = kwargs['env'].account

# get AZs from the $region

availability_zones = ['us-east-1a', 'us-east-1b']

...

The aws_account is passed from the app.py when creating the AtlasEksStack() class object:

...

AWS_ACCOUNT = os.environ["AWS_ACCOUNT"]

...

eks_stack = AtlasEksStack(app, f'eks-{EKS_STAGE}-1-26',

env=cdk.Environment(account=AWS_ACCOUNT, region=AWS_REGION),

stage=EKS_STAGE,

region=AWS_REGION

)

...

And we will continue to use it for the AWS IAM configuration.

We also have a separate VPC:

...

vpc = ec2.Vpc(self, 'Vpc',

ip_addresses=ec2.IpAddresses.cidr("10.0.0.0/16"),

vpc_name=f'eks-{stage}-1-26-vpc',

enable_dns_hostnames=True,

enable_dns_support=True,

availability_zones=availability_zones,

...

)

...

And the EKS cluster itself:

...

print(cluster_name)

cluster = eks.Cluster(

self, 'EKS-Cluster',

cluster_name=cluster_name,

version=eks.KubernetesVersion.V1_26,

vpc=vpc,

...

)

...

Next, we need to add the creation of OIDC in IAM, and the deployment of Helm charts with controllers.

OIDC Provider configuration in AWS IAM

We’ll use boto3 (this is one of the things that I don't really like about AWS CDK - that a lot of things have to be done not with the methods/constructs of the CDK itself, but with "crutches" in the form of boto3 or other modules/libraries).

We need to get the OIDC Issuer URL, and get its thumbprint — then we can use the create_open_id_connect_provider.

OIDC Provider URL can be obtained using boto3.client('eks'):

...

import boto3

...

############

### OIDC ###

############

eks_client = boto3.client('eks')

# Retrieve the cluster's OIDC provider details

response = eks_client.describe_cluster(name=cluster_name)

# [https://oidc.eks.us-east-1.amazonaws.com/id/2DC***124](https://oidc.eks.us-east-1.amazonaws.com/id/2DC***124)

oidc_provider_url = response['cluster']['identity']['oidc']['issuer']

...

Next, with the help of the libraries ssl and hashlib we get the thumbprint of the oidc.eks.us-east-1.amazonaws.com endpoint's certificate :

...

import ssl

import hashlib

...

# AWS EKS OIDC root URL

eks_oidc_url = "oidc.eks.us-east-1.amazonaws.com"

# Retrieve the SSL certificate from the URL

cert = ssl.get_server_certificate((eks_oidc_url, 443))

der_cert = ssl.PEM_cert_to_DER_cert(cert)

# Calculate the thumbprint for the create_open_id_connect_provider()

oidc_provider_thumbprint = hashlib.sha1(der_cert).hexdigest()

...

And now with boto3.client('iam') and create_open_id_connect_provider() we can create the IAM OIDC Identity Provider:

...

from botocore.exceptions import ClientError

...

# Create IAM Identity Privder

iam_client = boto3.client('iam')

# to catch the "(EntityAlreadyExists) when calling the CreateOpenIDConnectProvider operation"

try:

response = iam_client.create_open_id_connect_provider(

Url=oidc_provider_url,

ThumbprintList=[oidc_provider_thumbprint],

ClientIDList=["sts.amazonaws.com"]

)

except ClientError as e:

print(f"\n{e}")

...

Here, we wrap everything in a the try/except, because during further updates of the stack boto3.client('iam') sees that the Provider already exists, and it crashes with an error EntityAlreadyExists.

Installing ExternalDNS

Let’s add the ExternalDNS first — it has a fairly simple IAM Policy, so we’ll test how CDK works with Helm charts.

IRSA for ExternalDNS

Here, the first step is to create an IAM Role that our ServiceAccount can assume for ExternalDNS, and which will allow ExternalDNS to perform actions with the domain zone in Route53 because now ExternalDNS has a ServiceAccount, but it gives an error:

msg=”records retrieval failed: failed to list hosted zones: WebIdentityErr: failed to retrieve credentials\ncaused by: AccessDenied: Not authorized to perform sts:AssumeRoleWithWebIdentity\n\tstatus code: 403

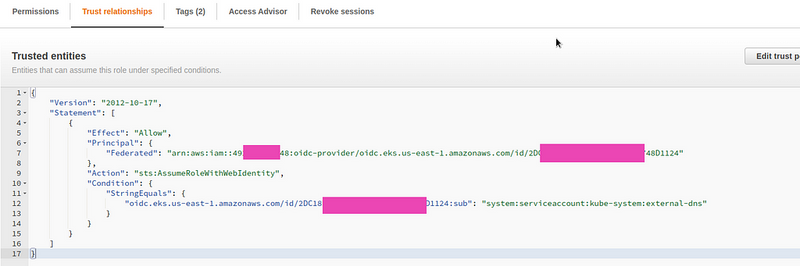

Trust relationships

In the Trust relationships of this role, we must specify a Principal in the form of the ARN created by the OIDC Provider, in the Action — sts:AssumeRoleWithWebIdentity, and in the Condition - if the request comes from a ServiceAccount that will be created by the ExternalDNS Helm-chart.

Let’s create a couple of variables:

...

# arn:aws:iam::492 ***148:oidc-provider/oidc.eks.us-east-1.amazonaws.com/id/2DC*** 124

oidc_provider_arn = f'arn:aws:iam::{aws_account}:oidc-provider/{oidc_provider_url.replace("https://", "")}'

# deploy ExternalDNS to a namespace

controllers_namespace = 'kube-system'

...

The oidc_provider_arn is formed from the variable oidc_provider_url obtained earlier in response = eks_client.describe_cluster(name=cluster_name).

Describe the creation of a role using iam.Role():

...

# Create an IAM Role to be assumed by ExternalDNS

external_dns_role = iam.Role(

self,

'EksExternalDnsRole',

# for Role's Trust relationships

assumed_by=iam.FederatedPrincipal(

federated=oidc_provider_arn,

conditions={

'StringEquals': {

f'{oidc_provider_url.replace("https://", "")}:sub': f'system:serviceaccount:{controllers_namespace}:external-dns'

}

},

assume_role_action='sts:AssumeRoleWithWebIdentity'

)

)

...

As a result, we should get a role with the following Trust relationships:

The next step is an IAM Policy.

IAM Policy for ExternalDSN

If you deploy the stack now, ExternalDSN will get a permissions error:

msg=”records retrieval failed: failed to list hosted zones: AccessDenied: User: arn:aws:sts::492***148:assumed-role/eks-dev-1–26-EksExternalDnsRoleB9A571AF-7WM5HPF5CUYM/1689063807720305270 is not authorized to perform: route53:ListHostedZones because no identity-based policy allows the route53:ListHostedZones action\n\tstatus code: 403

So we need to describe two iam.PolicyStatement() - one for working with the domain zone, and the second for accessing route53:ListHostedZones API call.

Make them separate, because for route53:ChangeResourceRecordSets in the resources we have to have restrictions on only one specific zone but for permission on route53:ListHostedZones the resources block should be in the form of "*". i.e. "all":

...

# A Zone ID to create records in by ExternalDNS

zone_id = "Z04***FJG"

# to be used in domainFilters

zone_name = example.co

# Attach an IAM Policies to that Role so ExternalDNS can perform Route53 actions

external_dns_policy = iam.PolicyStatement(

actions=[

'route53:ChangeResourceRecordSets',

'route53:ListResourceRecordSets'

],

resources=[

f'arn:aws:route53:::hostedzone/{zone_id}',

]

)

list_hosted_zones_policy = iam.PolicyStatement(

actions=[

'route53:ListHostedZones'

],

resources=['*']

)

external_dns_role.add_to_policy(external_dns_policy)

external_dns_role.add_to_policy(list_hosted_zones_policy)

...

Now we can add the ExternalDNS Helm chart itself.

AWS CDK and ExternalDNS Helm chart

Here we use the aws-cdk.aws-eks.add_helm_chart().

In the values enable the serviceAccount, and in its annotations pass the 'eks.amazonaws.com/role-arn': external_dns_role.role_arn:

...

# Install ExternalDNS Helm chart

external_dns_chart = cluster.add_helm_chart('ExternalDNS',

chart='external-dns',

repository='https://charts.bitnami.com/bitnami',

namespace=controllrs_namespace,

release='external-dns',

values={

'provider': 'aws',

'aws': {

'region': region

},

'serviceAccount': {

'create': True,

'annotations': {

'eks.amazonaws.com/role-arn': external_dns_role.role_arn

}

},

'domainFilters': [

f"{zone_name}"

],

'policy': 'upsert-only'

}

)

...

Let’s deploy and look under ExternalDNS — we can see both our domain-filter and environment variables for the IRSA work:

$ kubectl -n kube-system describe pod external-dns-85587d4b76-hdjj6

…

Args:

— metrics-address=:7979

— log-level=info

— log-format=text

— domain-filter=test.example.co

— policy=upsert-only

— provider=aws

…

Environment:

AWS_DEFAULT_REGION: us-east-1

AWS_STS_REGIONAL_ENDPOINTS: regional

AWS_ROLE_ARN: arn:aws:iam::492***148:role/eks-dev-1–26-EksExternalDnsRoleB9A571AF-7WM5HPF5CUYM

AWS_WEB_IDENTITY_TOKEN_FILE: /var/run/secrets/eks.amazonaws.com/serviceaccount/token

…

Check the logs:

…

time=”2023–07–11T10:28:28Z” level=info msg=”Applying provider record filter for domains: [example.co. .example.co.]”

time=”2023–07–11T10:28:28Z” level=info msg=”All records are already up to date”

…

And let’s test if it’s working.

Testing ExternalDNS

To check — let’s create a simple Service with the Loadbalancer type, in its annotations add the external-dns.alpha.kubernetes.io/hostname to trigger the ExternalDNS to create a DNS record in the Route53:

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

annotations:

external-dns.alpha.kubernetes.io/hostname: "nginx.test.example.co"

spec:

type: LoadBalancer

selector:

app: nginx

ports:

- name: nginx-http-svc-port

protocol: TCP

port: 80

targetPort: nginx-http

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginxdemos/hello

ports:

- containerPort: 80

name: nginx-http

Check ExternalDNS logs:

…

time=”2023–07–11T10:30:29Z” level=info msg=”Applying provider record filter for domains: [example.co. .example.co.]”

time=”2023–07–11T10:30:29Z” level=info msg=”Desired change: CREATE cname-nginx.test.example.co TXT [Id: /hostedzone/Z04***FJG]”

time=”2023–07–11T10:30:29Z” level=info msg=”Desired change: CREATE nginx.test.example.co A [Id: /hostedzone/Z04***FJG]”

time=”2023–07–11T10:30:29Z” level=info msg=”Desired change: CREATE nginx.test.example.co TXT [Id: /hostedzone/Z04***FJG]”

time=”2023–07–11T10:30:29Z” level=info msg=”3 record(s) in zone example.co. [Id: /hostedzone/Z04***FJG] were successfully updated”

…

And check the domain itself:

$ curl -I nginx.test.example.co

HTTP/1.1 200 OK

“It works!” ©

All code for OIDC and ExternalDNS

All the code together now looks like this:

...

############

### OIDC ###

############

eks_client = boto3.client('eks')

# Retrieve the cluster's OIDC provider details

response = eks_client.describe_cluster(name=cluster_name)

# [https://oidc.eks.us-east-1.amazonaws.com/id/2DC***124](https://oidc.eks.us-east-1.amazonaws.com/id/2DC***124)

oidc_provider_url = response['cluster']['identity']['oidc']['issuer']

# AWS EKS OIDC root URL

eks_oidc_url = "oidc.eks.us-east-1.amazonaws.com"

# Retrieve the SSL certificate from the URL

cert = ssl.get_server_certificate((eks_oidc_url, 443))

der_cert = ssl.PEM_cert_to_DER_cert(cert)

# Calculate the thumbprint for the create_open_id_connect_provider()

oidc_provider_thumbprint = hashlib.sha1(der_cert).hexdigest()

# Create IAM Identity Privder

iam_client = boto3.client('iam')

# to catch the "(EntityAlreadyExists) when calling the CreateOpenIDConnectProvider operation"

try:

response = iam_client.create_open_id_connect_provider(

Url=oidc_provider_url,

ThumbprintList=[oidc_provider_thumbprint],

ClientIDList=["sts.amazonaws.com"]

)

except ClientError as e:

print(f"\n{e}")

###################

### Controllers ###

###################

### ExternalDNS ###

# arn:aws:iam::492 ***148:oidc-provider/oidc.eks.us-east-1.amazonaws.com/id/2DC*** 124

oidc_provider_arn = f'arn:aws:iam::{aws_account}:oidc-provider/{oidc_provider_url.replace("https://", "")}'

# deploy ExternalDNS to a namespace

controllers_namespace = 'kube-system'

# Create an IAM Role to be assumed by ExternalDNS

external_dns_role = iam.Role(

self,

'EksExternalDnsRole',

# for Role's Trust relationships

assumed_by=iam.FederatedPrincipal(

federated=oidc_provider_arn,

conditions={

'StringEquals': {

f'{oidc_provider_url.replace("https://", "")}:sub': f'system:serviceaccount:{controllers_namespace}:external-dns'

}

},

assume_role_action='sts:AssumeRoleWithWebIdentity'

)

)

# A Zone ID to create records in by ExternalDNS

zone_id = "Z04***FJG"

# to be used in domainFilters

zone_name = "example.co"

# Attach an IAM Policies to that Role so ExternalDNS can perform Route53 actions

external_dns_policy = iam.PolicyStatement(

actions=[

'route53:ChangeResourceRecordSets',

'route53:ListResourceRecordSets'

],

resources=[

f'arn:aws:route53:::hostedzone/{zone_id}',

]

)

list_hosted_zones_policy = iam.PolicyStatement(

actions=[

'route53:ListHostedZones'

],

resources=['*']

)

external_dns_role.add_to_policy(external_dns_policy)

external_dns_role.add_to_policy(list_hosted_zones_policy)

# Install ExternalDNS Helm chart

external_dns_chart = cluster.add_helm_chart('ExternalDNS',

chart='external-dns',

repository='https://charts.bitnami.com/bitnami',

namespace=controllers_namespace,

release='external-dns',

values={

'provider': 'aws',

'aws': {

'region': region

},

'serviceAccount': {

'create': True,

'annotations': {

'eks.amazonaws.com/role-arn': external_dns_role.role_arn

}

},

'domainFilters': [

zone_name

],

'policy': 'upsert-only'

}

)

...

Let’s go to the ALB Controller.

Installing AWS ALB Controller

In general, everything is the same here, the only thing I had to mess with was the IAM Policy, because if we have only two permissions for ExternalDNS, and we can describe them directly when creating this Policy, for the ALB Controller the policy must be taken from GitHub, because it is quite large.

IAM Policy from a GitHub URL

Here we use requests (crutches again):

...

import requests

...

alb_controller_version = "v2.5.3"

url = f"https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/{alb_controller_version}/docs/install/iam_policy.json"

response = requests.get(url)

response.raise_for_status() # Check for any download errors

# format as JSON

policy_document = response.json()

document = iam.PolicyDocument.from_json(policy_document)

...

Here, we receive the policy file, form it in JSON, and then from this JSON we form the policy document itself.

IAM Role for the ALB Controller

Next, we create an IAM Role with the similar to ExternalDNS Trust relationships, only change its conditions - specify the ServiceAccount that will be created for the AWS ALB Controller:

...

alb_controller_role = iam.Role(

self,

'AwsAlbControllerRole',

# for Role's Trust relationships

assumed_by=iam.FederatedPrincipal(

federated=oidc_provider_arn,

conditions={

'StringEquals': {

f'{oidc_provider_url.replace("https://", "")}:sub': f'system:serviceaccount:{controllers_namespace}:aws-load-balancer-controller'

}

},

assume_role_action='sts:AssumeRoleWithWebIdentity'

)

)

alb_controller_role.attach_inline_policy(iam.Policy(self, "AwsAlbControllerPolicy", document=document))

...

AWS CDK та AWS ALB Controller Helm-чарт

Now, install the Helm chart itself with the necessary values - enable a ServiceAccount, in its annotations specify the ARM role that was created above, and set the clusterName:

...

# Install AWS ALB Controller Helm chart

alb_controller_chart = cluster.add_helm_chart('AwsAlbController',

chart='aws-load-balancer-controller',

repository='https://aws.github.io/eks-charts',

namespace=controllers_namespace,

release='aws-load-balancer-controller',

values={

'image': {

'tag': alb_controller_version

},

'serviceAccount': {

'name': 'aws-load-balancer-controller',

'create': True,

'annotations': {

'eks.amazonaws.com/role-arn': alb_controller_role.role_arn

},

'automountServiceAccountToken': True

},

'clusterName': cluster_name,

'replicaCount': 1

}

)

...

Testing AWS ALB Controller

Let’s create a simple Pod, a Service, and an Ingress which must trigger the ALB Controller to create an AWS ALB LoadBalancer:

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: alb

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-http-svc-port

port:

number: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

annotations:

external-dns.alpha.kubernetes.io/hostname: "nginx.test.example.co"

spec:

selector:

app: nginx

ports:

- name: nginx-http-svc-port

protocol: TCP

port: 80

targetPort: nginx-http

---

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginxdemos/hello

ports:

- containerPort: 80

name: nginx-http

Deploy and check the Ingress resource:

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

nginx-ingress <none> * internal-k8s-default-nginxing- ***-***.us-east-1.elb.amazonaws.com 80 34m

The only thing here that didn’t work the first time is the aws-iam-token attach to the Pod: that's why in the values I've set the 'automountServiceAccountToken': True, although it already has a default value true.

But after several redeploys with cdk deploy, the token was created and connected to the Pod:

...

- name: AWS_ROLE_ARN

value: arn:aws:iam::492***148:role/eks-dev-1-26-AwsAlbControllerRole4AC4054B-1QYCGEG2RZUD7

- name: AWS_WEB_IDENTITY_TOKEN_FILE

value: /var/run/secrets/eks.amazonaws.com/serviceaccount/token

...

In general, that’s all.

As usual with CDK it’s a pain and suffering due to the lack of proper documentation and examples, but with the help of ChatGPT and the tutorials it did work.

Also, it would probably be good to move the creation of resources at least to separate functions instead of doing everything with the AtlasEksStack.__init__(), but that can be done later.

The next step is to launch VictoriaMetrics in Kubernetes, and then we will start working on Karpenter.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)