We distilled 160 conversations with tech leaders across enterprises, startups, NASDAQ companies, and legacy organizations, from team leaders to CTOs and VP R&Ds. Here are their Top 5 list of data engineering trends that will likely come to life in 2023.

1. Data Contracts.

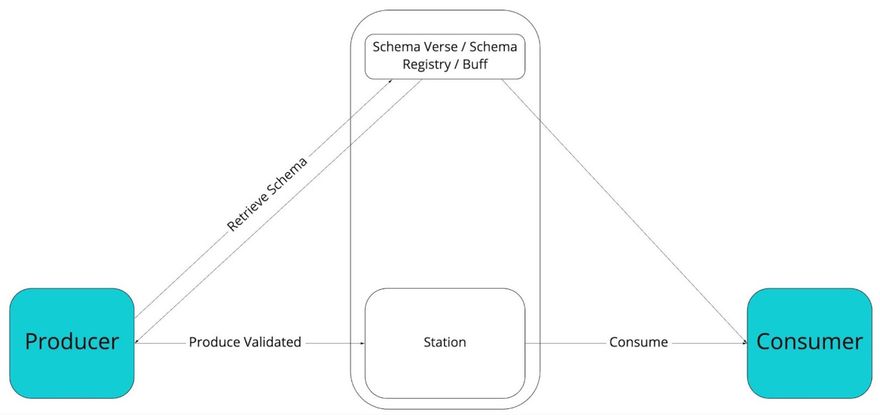

“Data Contracts are API-based agreements between Software Engineers who own services and Data Consumers that understand how the business works in order to generate well-modeled, high-quality, trusted data.”If you take a good look at it, you have at least ten different data producers and multiple consumers, written in different languages, interacting with various databases, SQL, No-SQL, and the holy grail data models. It's a mess.Data contracts are still a management or operational concept, but we are starting to see more and more traction and conversation around it (Chad Sanderson covers the subject in depth in his newsletter).The end goals of data contracts are to a) increase the quality of produced data. b) Easier maintenance. c) Apply governance and standardization over a federated data platform

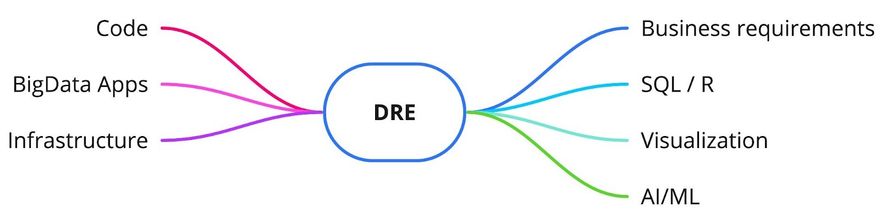

2. A new role - Data Reliability Engineer (DRE).

One of the most common challenges that leaders have raised is how to narrow the technological gap between the different data stakeholders -

Engineers to Analysts, BIs, and Scientists.

This gap is not only the source of over-complicated architectures but also one of the significant cost generators. The BIs, analysts, and scientists each have a stack with dedicated languages like SQL and R. Besides technical differences, there are also different interests and sort of a bubble-like environment that is much different than any other group of teams that assemble one unit with one clear goal, like the famous triangle - IT, DevOps, and Devs. Due to the growing complexity of data and the increase in in-house investments in making data much more cost-effective, accessible, and a real growth engine, a new position must be filled. Just as the SRE (Site Reliability Engineer) narrowed the gap between the developers and DevOps engineers, so will the DRE, by having a swiss army knife of capabilities starting with business understanding and requirements, on to data structures and SQL, to theory concepts in ML an AI, and lastly in how to create a straight to the point pipelines that will gather the needed data to fulfill the other layers.

3. Streaming and Real-time.

Data is growing too fast to process as a whole. That’s a simple truth.

These days we can find super intelligent and efficient algorithms that will process output in milliseconds, but in order to bring the data in, each pull will take minutes and hours. That example demonstrates that if the entire process could be refactored and generate results per a single event or in small batches, the output would take a reasonable amount of time. Not hours.

It is one example of many more, but not all use cases can happen in real time, and refactoring is hard. The mindset should be real-time from the get-go.

4. Tracking stream lineage.

The troubleshooting barriers must be lowered to enable the “streaming” growth and increase usability. Most of the respondents said that they are using a message broker to enable real-time pipelines; for them, a message broker is a black box. Something comes in, something comes out, and at the end of the pipeline some events are dropped, and some get ingested. The lack of a pattern for failures with debugging experience that requires an assembly of different teams and engineers keeps architects from deepening in real-time.To overcome this barriers, engineers needs better observability, context-based, that can display the full evolution of a single event all the way from the first producer (stage in a pipeline), to the very last consumer. Several products and projects started to address this challenge including Memphis.dev with embedded event’s journey, Confluent, OpenLineage, monte carlo and more.

5.Event sourcing is coming back.

How do you struct or emphsize a user’s journey?

Let’s take for example a journey of some users in an ecommerce store.

- They entered the store

- They searched

- They found something / They didnt

- They purchase something within their 2 minutes of entrance / They arrived to the checkout and went out

Eventually, you can describe it in 10X different SQL tables, or in one No-SQL document, do some joins / aggregations and eventually, after the user already gone far away from your store, perform some actions. Well, there is a better approach for store and action and its called event sourcing. In simple words, it means that there is a queue and into that queue you push every event that certain user has made instead into a database. Until now, pretty straightforward, but we want to perform some real-time actions derived from their behavior pattern while the user is in our store. Btw, We at memphis.dev are pushing to also enable this use case.

To conclude, even though it seems we hear it all the time, data keeps growing, arriving from multiple sources in different shapes and sizes.

Mastering the streams and lake can benefit any organization by reducing costs, increasing sales, becoming more efficient, and, most importantly, understanding the customer on the other side.

Article by Yaniv Ben Hemo Co-Founder & CEO Memphis.dev

Join 4500+ others and sign up for our data engineering newsletter.

Top comments (3)

Γεια, η κατανόηση του event sourcing και η διαχείριση μεγάλων δεδομένων σε πραγματικό χρόνο είναι θεμελιώδη για την ανάπτυξη και την αποτελεσματικότητα κάθε σύγχρονης ψηφιακής πλατφόρμας. Ένας φίλος μου πρότεινε το vegashero με εξαιρετικά προνόμια στην Greece και η νίκη μου στο Gates of Olympus ήταν η ιδανική εκτόνωση μετά από μια μέρα γεμάτη ανάλυση αρχιτεκτονικής δεδομένων. Ήταν ο καλύτερος τρόπος να κλείσει η μέρα μου με θετική ενέργεια και κέρδη.

Nice article @avital_trifsik and @yanivbh1 (or is it @yaniv_benhemo_d4d5b0c5ad? Happy to merge these two accounts for you if you can email social@ops.io to confirm some details with us!).

@avital_trifsik I've made you an Admin of the memphis.dev organization so you can reassign posts to their original authors at the top of the post.

To publish under a different author's name as an org admin:

Nice work on reassigning author name, @avital_trifsik 🙌 The Memphis{dev} organization page is looking great!