As a “top pick” in the direct mail automation space, Lob prints and sends millions of mailpieces each year. As such, it's been Lob's commitment from the get-go to minimize our footprint and build a more sustainable approach to direct mail. As a part of this initiative, in addition to ensuring we use responsibly sourced raw materials, we plant two trees for every one that we use in the production of our mailpieces, through our partnership with Eden Reforestation Projects. (For more on our partnership check out this blog post/video: “The Power of Trees: Partnering for Positive Environmental and Community Impact.”)

As awesome as that is, this is not a sustainability story, it’s about the technology we use to accomplish this and why we shifted (excuse the pun) tools.

During a hackathon in 2017, our Engineering team developed automation that runs every month. This involves a Postgres query that pulls how much mail (letters, checks, postcards, etc.) Lob sent the previous month, a calculation the equivalent in paper, which is then translated into a number of trees. (Then we offset our carbon footprint by sponsoring Eden Reforestation to plant double that number of trees.)

Snippet of query:

The result is a summary communication sent via Slack to the community and stakeholders.

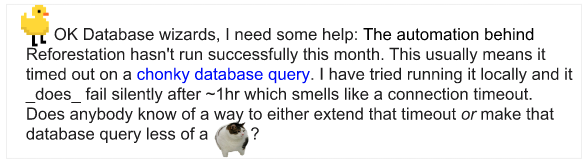

Unfortunately, as of late, the automation was pretty flaky: often the query would time out requiring an engineer to run it again in off-peak hours when the Postgres database was under less load; this is not a scalable solution and was yielding less and less success.

Since the query looked fine (accurate), an initial idea was to break it out into separate queries and then combine the results. But this and some other brainstorms just seemed like a hack; sure they would fix the current problem but eventually, those would get big enough to timeout too. So, Platform Engineer Elijah Voigt turned to the rubber ducky channel.

Enter Jack Meussdorfer from the Data team to the rescue.

His keen sense of smell indicated this was a too much/big data problem. He suggested instead of running the query against PostgreSQL, we run it instead against Amazon Redshift, which the Data team already uses for similar data pipelines.

In short, Postgres is a transactional db, whereas Redshift is specifically designed for large-scale data storage and analysis. Because Redshift stores data in a different way—it uses column stores instead of row stores—there is less overhead on queries spanning a large selection of data. (This also means differences in how constraints and indexes are implemented.) Redshift also has a higher capability of processing large amounts of data because it runs massively parallel processing (MPP). If you want a deep dive check out this article Redshift vs. Postgres: Detailed Comparison of Performance and Functionality.)

Is that really the right tool for the task?

Since the necessary data already exists in Redshift, Jack suggested we:

- Update the query to be compatible with Redshift (alter the query syntax and add the proper schema/tables) and then

- Point the Reforestation query at Redshift.

Boom!** Lifting and shifting the data source from Postgres to Redshift solved the problem immediately: the query went from timing out after 1 hour to literally taking 40 seconds.**

The takeaway? Rubber ducky rules. And, it’s important to evaluate whether you are using the right tool for the job at hand. Postgres was the right tool for the job in 2017, but our data changed—which means our problem changed. While we are big fans of Postgres, our automation needed to scale to our new needs, so for this important query, it’s Redshift FTW.

Top comments (0)