For many years we have been told that one of the major advantages of using containers is portability, meaning, the ability to move our application between different platforms or even different cloud providers.

In this blog post, we will review some of the aspects of designing an architecture based on Kubernetes, allowing application portability between different cloud providers.

Before we begin the conversation, we need to recall that each cloud provider has its opinionated way of deploying and consuming services, with different APIs and different service capabilities.

There are multiple ways to design a workload, from traditional use of VMs to event-driven architectures using message queues and Function-as-a-Service.

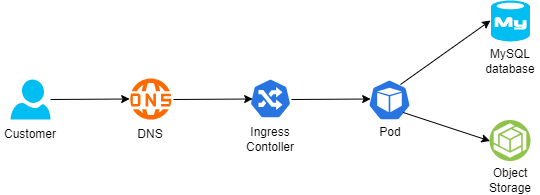

Below is an example of architecture:

- A customer connecting from the public Internet, resolving the DNS name from a global DNS service (such as Cloudflare)

- The request is sent to an Ingress controller, who forwards the traffic to a Kubernetes Pod exposing an application

- For static content retrieval, the application is using an object storage service through a Kubernetes CSI driver

- For persistent data storage, the application uses a backend-managed MySQL database

The 12-factor app methodology angle

When talking about the portability and design of modern applications, I always like to look back at the 12-factor app methodology:

Config

To be able to deploy immutable infrastructure on different cloud providers, we should store variables (such as SDLC stage, i.e., Dev, Test, Prod) and credentials (such as API keys, passwords, etc.) outside the containers.

Some examples:

- AWS Systems Manager Parameter Store (for environment variables or static credentials) or AWS Secrets Manager (for static credentials)

- Azure App Configuration (for environment variables or configuration settings) or Azure Key Vault (for static credentials)

- Google Config Controller (for configuration settings), Google Secret Manager (for static credentials), or GKE Workload Identity (for access to Cloud SQL or Google Cloud Storage)

- HashiCorp Vault (for environment variables or static credentials)

Backing services

To truly design for application portability between cloud providers, we need to architect our workload where a Pod can be attached and re-attached to a backend service.

For storage services, it is recommended to prefer services that Pods can connect via CSI drivers.

Some examples:

- Amazon S3 (for object storage), Amazon EBS (for block storage), or Amazon EFS (for NFS storage)

- Azure Blob (for object storage), Azure Disk (for block storage), or Azure Files (for NFS or CIFS storage)

- Google Cloud Storage (for object storage), Compute Engine Persistent Disks (for block storage), or Google Filestore (for NFS storage)

For database services, it is recommended to prefer open-source engines deployed in a managed service, instead of cloud opinionated databases.

Some examples:

- Amazon RDS for MySQL (for managed MySQL) or Amazon RDS for PostgreSQL (for managed PostgreSQL)

- Azure Database for MySQL (for managed MySQL) or Azure Database for PostgreSQL (for managed PostgreSQL)

- Google Cloud SQL for MySQL (for managed MySQL) or Google Cloud SQL for PostgreSQL (for managed PostgreSQL)

Port binding

To allow customers access to our application, Kubernetes exports a URL (i.e., DNS name) and a network port.

Since load-balancers offered by the cloud providers are opinionated to their eco-systems, we should use an open-source or third-party solution that will allow us to replace the ingress controller and enforce network policies (for example to be able to enforce both inbound and pod-to-pod communication), or a service mesh solution (for mTLS and full layer 7 traffic inspection and enforcement).

Some examples:

- NGINX (an open-source ingress controller), supported by Amazon EKS, Azure AKS, or Google GKE

- Calico CNI plugin (for enforcing network policies), supported by Amazon EKS, Azure AKS, or Google GKE

- Cilium CNI plugin (for enforcing network policies), supported by Amazon EKS, Azure AKS, or Google GKE

- Istio (for service mesh capabilities), supported by Amazon EKS, Azure AKS, or Google GKE

Logs

Although not directly related to container portability, logs are an essential part of application and infrastructure maintenance – to be specific observability, i.e., the ability to collect logs, metrics, and traces), to be able to anticipate issues before they impact customers.

Although each cloud provider has its own monitoring and observability services, it is recommended to consider open-source solutions, supported by all cloud providers, and stream logs to a central service, to be able to continue monitoring the workloads, regardless of the cloud platform.

Some examples:

- Prometheus – monitoring and alerting solution

- Grafana – visualization and alerting solution

- OpenTelemetry – a collection of tools for exporting telemetry data

Immutability

Although not directly mentioned in the 12 factors app methodology, to allow portability of containers between different Kubernetes environments, it is recommended to build a container image from scratch (i.e., avoid using cloud-specific container images), and package the minimum number of binaries and libraries. As previously mentioned, to create an immutable application, you should avoid storing credentials, or any other unique identifiers (including unique configurations) or data inside the container image.

Infrastructure as Code

Deployment of workloads using Infrastructure as Code, as part of a CI/CD pipeline, will allow deploying an entire workload (from pulling the latest container image, backend infrastructure, and Kubernetes deployment process) in a standard way between different cloud providers, and different SDLC stages (Dev, Test, Prod).

Some examples:

- OpenTofu – An open-source fork of Terraform, allows deploying entire cloud environments using Infrastructure as Code

- Helm Charts – An open-source solution for software deployment on top of Kubernetes

Summary

In this blog post, I have reviewed the concept of container portability for allowing organizations the ability to decrease the reliance on cloud provider opinionated solutions, by using open-source solutions.

Not all applications are suitable for portability, and there are many benefits of using cloud-opinionated solutions (such as serverless services), but for simple architectures, it is possible to design for application portability.

About the author

Eyal Estrin is a cloud and information security architect, an AWS Community Builder, and the author of the books Cloud Security Handbook and Security for Cloud Native Applications, with more than 20 years in the IT industry.

You can connect with him on social media (https://linktr.ee/eyalestrin).

Opinions are his own and not the views of his employer.

Top comments (0)

Some comments may only be visible to logged-in visitors. Sign in to view all comments.