I’ve decided to write this bite-sized article after reading Linux: Execute commands in parallel with parallel-ssh, but I was confronted with a particular use case in which I didn’t have access to the internet and I faced constraints that prevented me from installing new packages.

Without much at hand, I’ve defined a server list, and scripted the following approach:

#!/bin/bash

## #####################################################################

## run concurrently command passed as argv on multiple remote servers ##

## UPDATE: servers array and user variable ##

########################################################################

# define an array of remote servers

servers=("server1.fqdn" "server2.fqdn" "server3.fqdn")

# Function to execute command on a remote server

execute_command() {

server=$1

command=$2

# define USER for the ssh connection

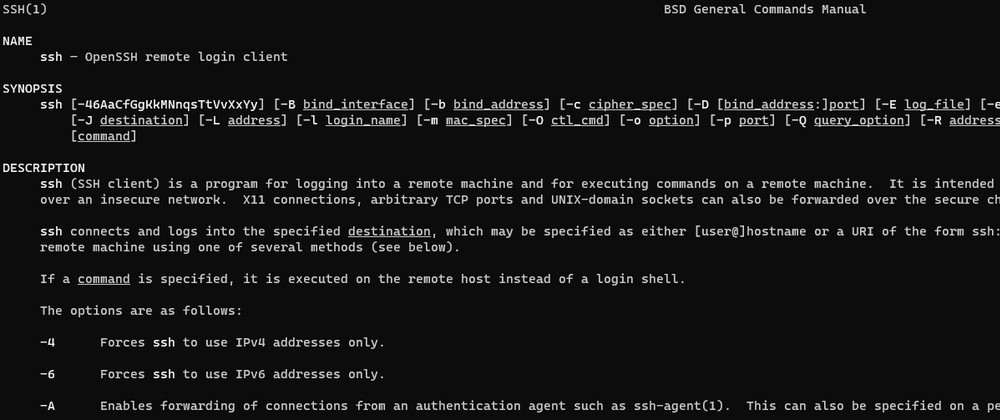

ssh user@"$server" "$command"

}

for server in "${servers[@]}"

do

# exec arg command as background process

execute_command "$server" "$1" &

done

# wait for all background processes to complete

wait

The script initiates multiple SSH connections and uses wait and & operator approach to achieve a rudimentary concurrency, and the usage is pretty straightforward:

./pssh.sh "hostname -A"

Also if you fancy a sequential one-liner, define hosts_file that contains the servers, replace user and run:

while read -u10 line;do ssh user@$line 'hostname -A';done 10<hosts_file

Or if you prefer to pass a script to the one-liner, just replace script.sh with the desired script.

while read -u10 line;do echo $line;ssh user@$line 'bash -s' < script.sh;done 10< servers.txt

Top comments (0)