We are preparing to transfer infrastructure management from AWS CDK to Terraform.

I’ve already written about planning it in the Terraform: planning a new project with Dev/Prod environments post, but there I didn’t write about one very important option — creating a lock for state files.

State file locking is used to avoid situations where multiple Terraform instances are started at the same time, either by engineers or automatically in CI/CD. With the state lock, when they are trying to make changes to the same state file at the same time, Terraform will block another instance from running until the first instance will not complete its work and release the lock.

In our case, the entire infrastructure is in AWS, so AWS S3 will be used as the backend for state storage, and a table in DynamoDB will be used to create lock files.

Documentation — State Locking.

So what we will do today:

- a DynamoDB table and an S3 bucket that will be managed by Terraform itself

- Terraform will authenticate to AWS with AssumeRole

- we will describe the creation of an S3 bucket and a DynamoDB table

- will create these resources using a local state

- import the local state into the created bucket

- and will test how State Lock works

IAM Role

Terraform will work through a dedicated IAM Role, see Use AssumeRole to provision AWS resources across accounts.

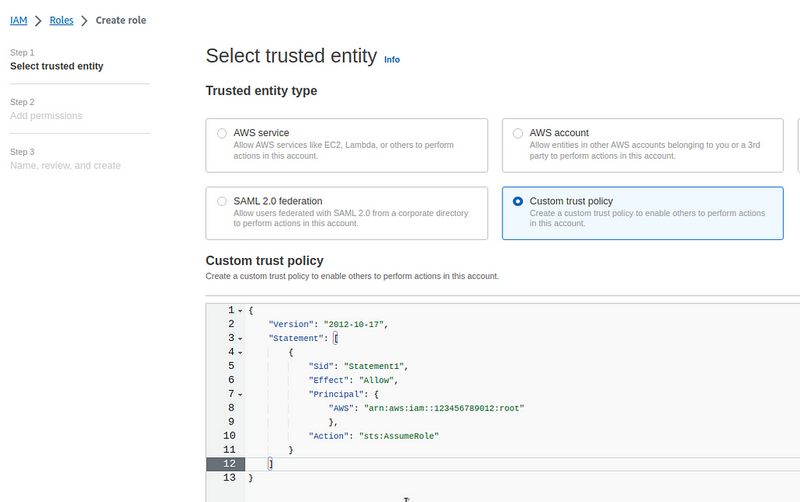

Go to IAM > Create Role, select Custom Trust Policy, and describe it:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Statement1",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::123456789012:root"

},

"Action": "sts:AssumeRole"

}

]

}

Instead of 123456789012, set an ID of your AWS account, and in the root we set that any authenticated IAM User from this account can perform the sts:AssumeRole API call to use this role.

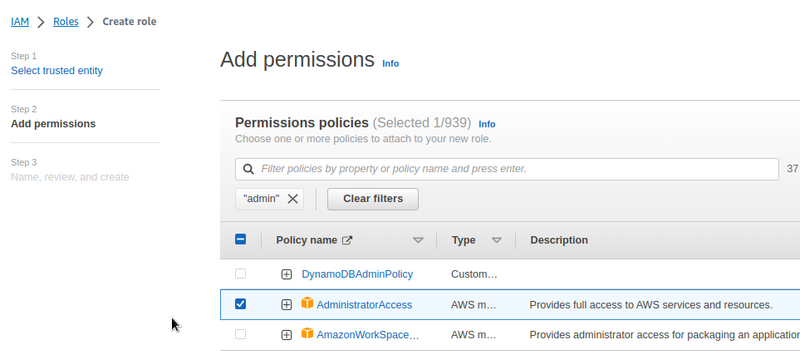

For now, we can set the AdministratorAccess, later it will be possible to configure more strict permissions:

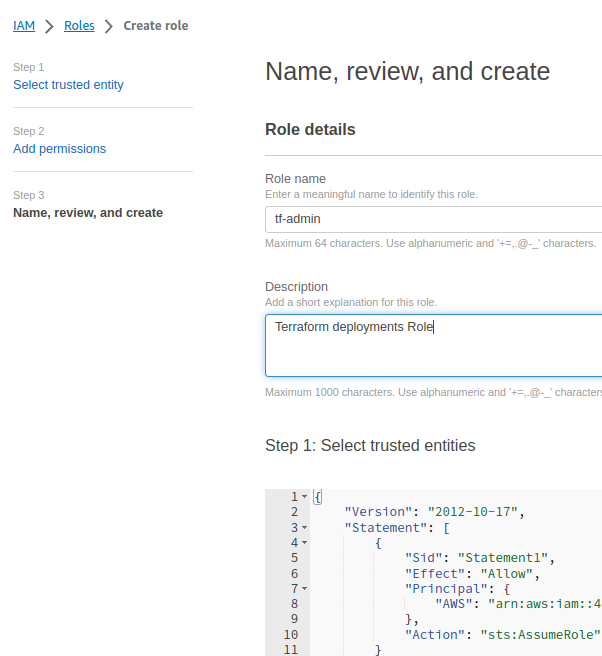

Save the Role:

And let’s check if it’s working.

To the local ~/.aws/config file add a new profile:

[profile tf-admin]

role_arn = arn:aws:iam::492***148:role/tf-admin

source_profile = default

And run sts get-caller-identity using that profile:

$ aws --profile tf-admin sts get-caller-identity

{

"UserId": "ARO***ZEF:botocore-session-1693297579",

"Account": "492***148",

"Arn": "arn:aws:sts::492***148:assumed-role/tf-admin/botocore-session-1693297579"

}

Okay, now we can move on to Terraform itself.

Configuring a Terraform project

Add a version of the modules that we will use.

The latest version of the AWS provider can be found here>>>, and the version of Terraform itself is available here>>>.

Create a versions.tf file:

terraform {

required_version = ">= 1.5"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.14.0"

}

}

}

Add a providers.tf file with the AWS connection parameters:

provider "aws" {

region = "us-east-1"

assume_role {

role_arn = "arn:aws:iam::492***148:role/tf-admin"

}

}

Now, need to check that Terraform can perform AssumeRole.

Run initialization:

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding hashicorp/aws versions matching ">= 5.14.0"...

- Installing hashicorp/aws v5.14.0...

- Installed hashicorp/aws v5.14.0 (signed by HashiCorp)

...

And do terraform plan:

$ terraform plan

No changes. Your infrastructure matches the configuration.

Terraform has compared your real infrastructure against your configuration and found no differences, so no changes are needed.

Creating AWS S3 for the backend

OK, Terraform has connected to our AWS account, let’s proceed.

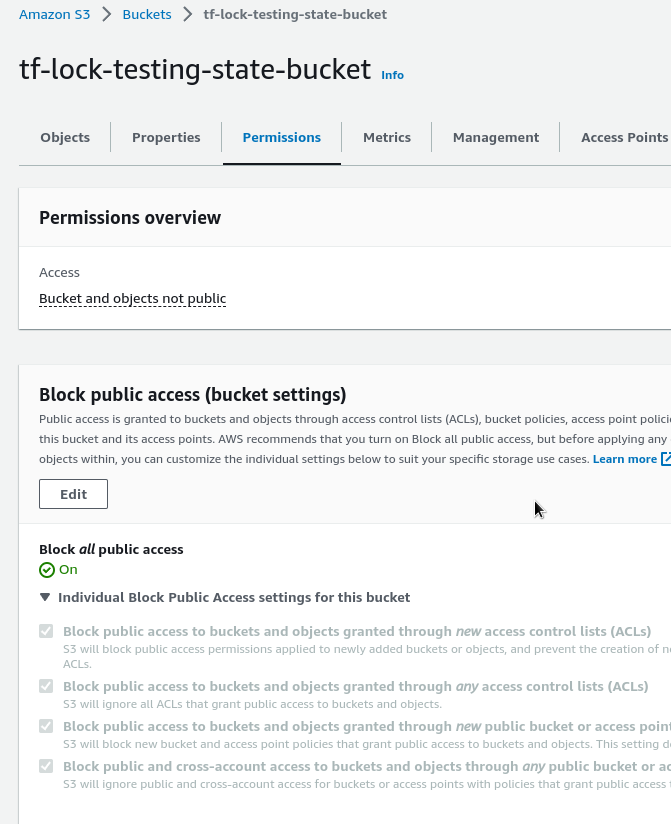

For the basket where the state files will be stored, we need to have:

- encryption: enabled by default for AWS S3, but can be configured with a custom key from AWS KMS

- access control: disable public access to objects in the basket

- versioning: configure versioning to have a history of changes in state files

Create a backend.tf file and create a KMS key and bucket resources:

resource "aws_kms_key" "tf_lock_testing_state_kms_key" {

description = "This key is used to encrypt bucket objects"

deletion_window_in_days = 10

}

# create state-files S3 buket

resource "aws_s3_bucket" "tf_lock_testing_state_bucket" {

bucket = "tf-lock-testing-state-bucket"

lifecycle {

prevent_destroy = true

}

}

# enable S3 bucket versioning

resource "aws_s3_bucket_versioning" "tf_lock_testing_state_versioning" {

bucket = aws_s3_bucket.tf_lock_testing_state_bucket.id

versioning_configuration {

status = "Enabled"

}

}

# enable S3 bucket encryption

resource "aws_s3_bucket_server_side_encryption_configuration" "tf_lock_testing_state_encryption" {

bucket = aws_s3_bucket.tf_lock_testing_state_bucket.id

rule {

apply_server_side_encryption_by_default {

kms_master_key_id = aws_kms_key.tf_lock_testing_state_kms_key.arn

sse_algorithm = "aws:kms"

}

bucket_key_enabled = true

}

}

# block S3 bucket public access

resource "aws_s3_bucket_public_access_block" "tf_lock_testing_state_acl" {

bucket = aws_s3_bucket.tf_lock_testing_state_bucket.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

In the same file, add a DynamoDB table resource for the state lock:

...

# create DynamoDB table

resource "aws_dynamodb_table" "tf_lock_testing_state_ddb_table" {

name = "tf-lock-testing-state-ddb-table"

billing_mode = "PAY_PER_REQUEST"

hash_key = "LockID"

attribute {

name = "LockID"

type = "S"

}

}

Check if everything is correct:

$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# aws_dynamodb_table.tf_lock_testing_state_ddb_table will be created

+ resource "aws_dynamodb_table" "tf_lock_testing_state_ddb_table" {

+ arn = (known after apply)

+ billing_mode = "PAY_PER_REQUEST"

+ hash_key = "LockID"

...

Plan: 5 to add, 0 to change, 0 to destroy.

And run terraform apply to create resources:

$ terraform apply

...

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

...

Apply complete! Resources: 5 added, 0 changed, 0 destroyed.

Check the bucket:

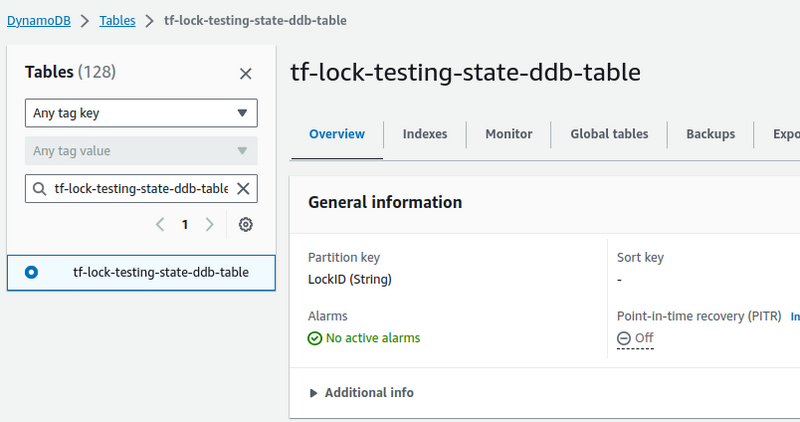

And DynamoDB table:

Configuring Terraform Backend and State Lock

Now we can add a backend with the dynamodb\_table parameter to create a lock.

Add a terraform.backend.s3 block to the backend.t file:

Add terraform.backend.s3 block to the backend.tf:

terraform {

backend "s3" {

bucket = "tf-lock-testing-state-bucket"

key = "tf-lock-testing-state-bucket.tfstate"

region = "us-east-1"

dynamodb_table = "tf-lock-testing-state-ddb-table"

encrypt = true

}

}

...

Run terraform init again, and import the local state into the bucket:

$ terraform initow we can add a backe

Initializing the backend...

Acquiring state lock. This may take a few moments...

Do you want to copy existing state to the new backend?

...

Enter a value: yes

Releasing state lock. This may take a few moments...

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Reusing previous version of hashicorp/aws from the dependency lock file

- Using previously-installed hashicorp/aws v5.14.0

Terraform has been successfully initialized!

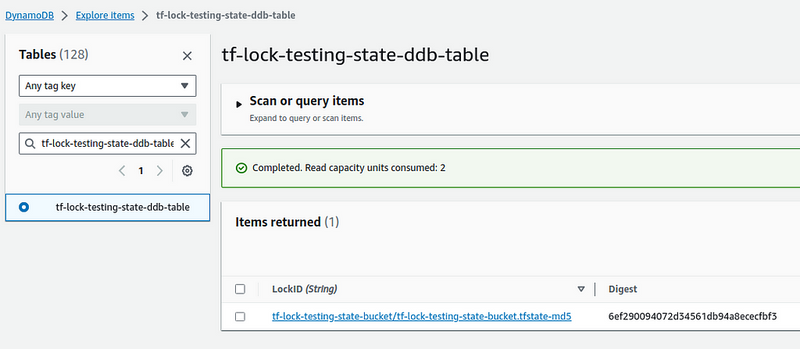

Check the DynamoDB table again, must have a lock record here:

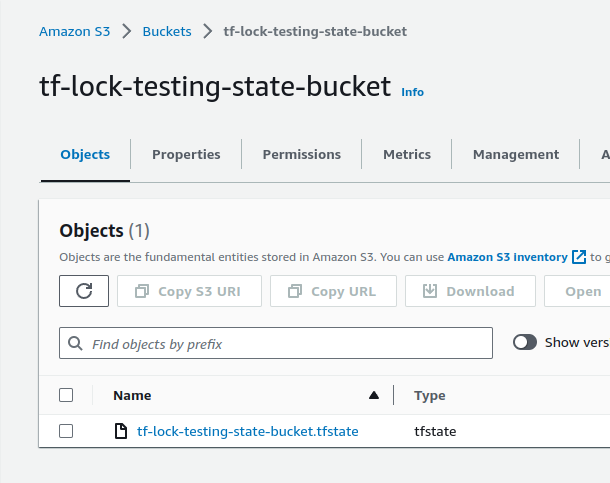

And the state file itself in the S3:

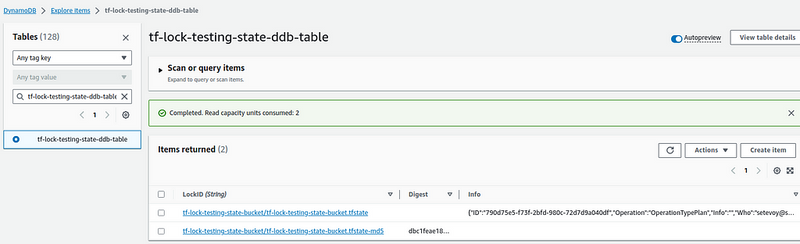

If you’ll take a look at the DynamoDB table during the plan or apply execution, you can see the lock itself with the Operation and Who fields, describing the current operation running:

testing State Lock

Add main.tf file with an EC2 resource:

resource "aws_instance" "ec2_lock_test" {

ami = "ami-0d2fcfe4f5c4c5b56"

instance_type = "t2.micro"

tags = {

Name = "EC2 Instance with remote state"

}

}

Copy all the project’s files to a new directory:

$ mkdir test-lock

$ cp -r * test-lock/

cp: cannot copy a directory, 'test-lock', into itself, 'test-lock/test-lock'

In the current directory run terraform apply, but do not answer yes, so that the lock created in DynamoDB will be kept:

$ terraform apply

Acquiring state lock. This may take a few moments...

...

Go to the second directory, and run init and apply there again:

$ cd test-lock/

$ terraform init && terraform apply

...

Acquiring state lock. This may take a few moments...

╷

│ Error: Error acquiring the state lock

│

│ Error message: ConditionalCheckFailedException: The conditional request failed

│ Lock Info:

│ ID: 98dd894b-065f-8f63-f695-d4dcea702807

│ Path: tf-lock-testing-state-bucket/tf-lock-testing-state-bucket.tfstate

│ Operation: OperationTypeApply

...

And here we have an error creating a lock because there is already a process that uses our state file.

Terraform State Lock tricks

force-unlock

Sometimes it happens that Terraform does not release the lock, for example, if the Internet went down during the operation.

Then you can release the state using force-unlock with a Lock ID argument:

$ terraform force-unlock 98dd894b-065f-8f63-f695-d4dcea702807

Do you really want to force-unlock?

Terraform will remove the lock on the remote state.

...

Enter a value: yes

Terraform state has been successfully unlocked!

lock-timeout

Sometimes it is necessary that Terraform does not stop work as soon as it sees that a lock record already exists. For example, in an CI pipeline two jobs can be launched at the same time, and then the second one will stop with an error.

In this case, we can add the lock-timeout parameter. In that case, Terraform will wait for the specified period of time and try to execute the lock again:

$ terraform apply -lock-timeout=180s

Done.

Originally published at https://rtfm.co.ua on September 3, 2023.

Top comments (0)