An example of creating a Terraform module to automate log collection from AWS Load Balancers in Grafana Loki.

See how the scheme works in the Grafana Loki: collecting AWS LoadBalancer logs from S3 with Promtail Lambda blog.

In short, ALB writes logs to an S3 bucket, from where they are picked up by a Lambda function with Promtail and sent to Grafana Loki.

What’s the idea with the Terraform module?

- we have an EKS environment — currently one cluster, but later there may be several

- there are applications — backend APIs, monitoring for devops, etc.

- each application can have one or more of its own environments — Dev, Staging, Prod

- for applications, there is AWS ALB, from which we need to collect logs

Terraform’s code for collecting logs is quite large — aws_s3_bucket, aws_s3_bucket_public_access_block, aws_s3_bucket_policy, aws_s3_bucket_notification, and Lambda functions.

At the same time, we have several projects in different teams, and each project can have several environments — some have only Ops or only Dev, some have Dev, Staging, Prod.

Therefore, in order not to repeat the code in each project and to be able to change some configurations in this system, I decided to make this code in a dedicated module, and then use it in projects and pass the necessary parameters.

But the main reason is a bit of a mess when creating resources for logging through several environments — one environment is the EKS cluster itself, and the other environment is the services themselves, such as monitoring or Backend API

That is, I want to do something like that:

- in the root module of the project, we have a variable for the EKS cluster —

$environmentwith the value ops/dev/prod (currently we have one cluster and, accordingly, oneenvironment == "ops") - pass another variable to the logging module from the root module —

app_environmentswith the values dev/staging/prod, plus the names of services, commands, etc.

So, in the root module, that is, in the project, we will call a new ALB Logs module in a loop for each value from the environment, and inside the module, we will create resources in a loop for each of the app_environments.

First, we’ll do everything locally in the existing project, and then we’ll upload the new module to a GitHub repository and connect it to the project from the repository.

Creating a module

We have the following file structure in the project’s repository — we will test in the project that creates resources for monitoring, but it doesn’t matter, using that one just because it has a backend and other parameters for Terraform already configured here:

$ tree .

.

|-- Makefile

|-- acm.tf

|-- backend.hcl

|-- backend.tf

|-- envs

| |-- ops

| | `-- ops-1-28.tfvars

|-- iam.tf

|-- lambda.tf

|-- outputs.tf

|-- providers.tf

|-- s3.tf

|-- variables.tf

`-- versions.tf

Create a directory for the modules and a directory for the module itself:

$ mkdir -p modules/alb-s3-logs

Creating an S3 bucket

Let’s start with a simple bucket — describe it in the file modules/alb-s3-logs/s3.tf:

resource "aws_s3_bucket" "alb_s3_logs" {

bucket = "test-module-alb-logs"

}

Next, include it in the main module, in the project itself in the main.tf:

module "alb_logs_test" {

source = "./modules/alb-s3-logs"

}

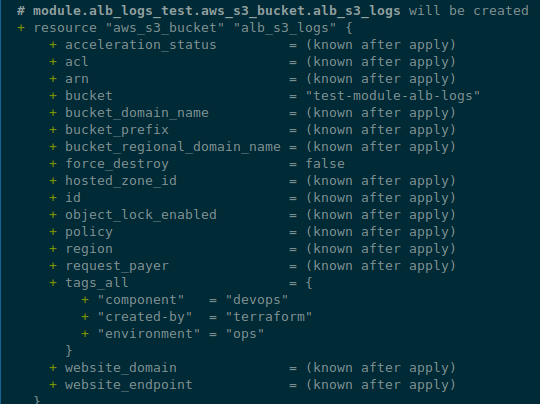

Run terraform init and check with terraform plan:

Good.

Next, we need to add a few inputs to our new module (see Terraform: Modules, Outputs, and Variables) to form the name of the bucket, and to have values for app_environments.

Create a new file modules/alb-s3-logs/variables.tf:

variable "eks_env" {

type = string

description = "EKS environment passed from a root module (the 'environment' variable)"

}

variable "eks_version" {

type = string

description = "EKS version passed from a root module"

}

variable "component" {

type = string

description = "A component passed from a root module"

}

variable "application" {

type = string

description = "An application passed from a root module"

}

variable "app_environments" {

type = set(string)

description = "An application's environments"

default = [

"dev",

"prod"

]

}

Next, in the module, update the aws_s3_bucket resource - add for_each (see Terraform: count, for_each, and for loops) for all values from the app_environments:

resource "aws_s3_bucket" "alb_s3_logs" {

# ops-1-28-backend-api-dev-alb-logs

# <eks_env>-<eks_version>-<component>-<application>-<app_env>-alb-logs

for_each = var.app_environments

bucket = "${var.eks_env}-${var.eks_version}-${var.component}-${var.application}-${each.value}-alb-logs"

# to drop a bucket, set to `true` first

# apply

# then remove the block

force_destroy = false

}

Or we can do better — move the formation of the bucket names to locals:

locals {

# ops-1-28-backend-api-dev-alb-logs

# <eks_env>-<eks_version>-<component>-<application>-<app_env>-alb-logs

bucket_names = { for env in var.app_environments : env => "${var.eks_env}-${var.eks_version}-${var.component}-${var.application}-${env}-alb-logs" }

}

resource "aws_s3_bucket" "alb_s3_logs" {

for_each = local.bucket_names

bucket = each.value

# to drop a bucket, set to `true` first

# run `terraform apply`

# then remove the block

# and run `terraform apply` again

force_destroy = false

}

Here, we take each element from the app_environments list, create the env variable, and form a map[] with the name bucket_names, where in the key we will have a value from the env, and in the value - a name of the bucket.

Update the module call in the project — add parameters:

module "alb_logs_test" {

source = "./modules/alb-s3-logs"

#bucket = "${var.eks_env}-${var.eks_version}-${var.component}-${var.application}-${each.value}-alb-logs"

# i.e. 'ops-1-28-backend-api-dev-alb-logs'

eks_env = var.environment

eks_version = local.env_version

component = "backend"

application = "api"

}

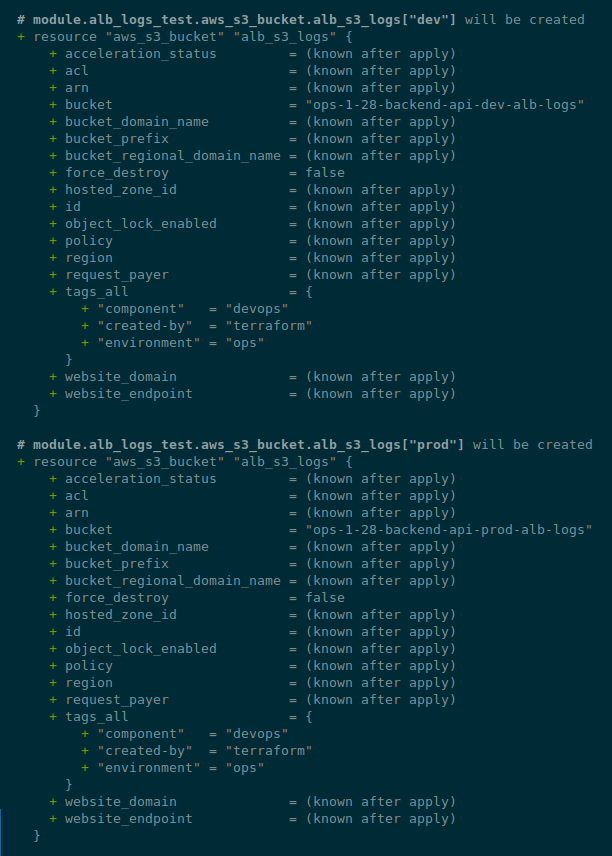

Let’s check again:

Creating an aws_s3_bucket_public_access_block resource

Add an aws_s3_bucket_public_access_block resource to the file modules/alb-s3-logs/s3.tf - we go through all the buckets from the aws_s3_bucket.alb_s3_logs resource in a loop:

...

# block S3 bucket public access

resource "aws_s3_bucket_public_access_block" "alb_s3_logs_backend_acl" {

for_each = aws_s3_bucket.alb_s3_logs

bucket = each.value.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

Creating a Promtail Lambda

Next, let’s add the creation of Lambda functions — each bucket will have its own function with its own variables for labels in Loki.

That is, for the “ops-1–28-backend-api-dev-alb-logs” bucket we will create a Promtail Lambda instance which will have the “component=backend, logtype=alb, environment=dev” values in the EXTRA_LABELS variables.

To create functions, we need new variables:

-

vpc_id: for a Lambda Security Group -

vpc_private_subnets_cidrs: for the rules in the Security Group - where access will be allowed -

vpc_private_subnets_ids: for the functions themselves - in which subnets to run them -

promtail_image: a Docker image URL to an AWS ECR, from which Lambda will be created -

loki_write_address: for Promtail - where to send logs

We get the VPC data in the project itself from the data "terraform_remote_state" resource (see Terraform: terraform_remote_state - getting Outputs of other state files), which takes it from another project that manages our VPCs:

# connect to the atlas-vpc Remote State to get the 'outputs' data

data "terraform_remote_state" "vpc" {

backend = "s3"

config = {

bucket = "tf-state-backend-atlas-vpc"

key = "${var.environment}/atlas-vpc-${var.environment}.tfstate"

region = var.aws_region

dynamodb_table = "tf-state-lock-atlas-vpc"

}

}

And then in the locals a vpc_out object is created with the VPC data. A URL for Loki is also generated there:

locals {

...

# get VPC info

vpc_out = data.terraform_remote_state.vpc.outputs

# will be used in Lambda Promtail 'LOKI_WRITE_ADDRESS' env. variable

# will create an URL: 'https://logger.1-28.ops.example.co:443/loki/api/v1/push'

loki_write_address = "https://logger.${replace(var.eks_version, ".", "-")}.${var.environment}.example.co:443/loki/api/v1/push"

}

Add new variables to the variables.tf of the module:

...

variable "vpc_id" {

type = string

description = "ID of the VPC where to create security group"

}

variable "vpc_private_subnets_cidrs" {

type = list(string)

description = "List of IPv4 CIDR ranges to use in Security Group rules and for Lambda functions"

}

variable "vpc_private_subnets_ids" {

type = list(string)

description = "List of subnet ids when Lambda Function should run in the VPC. Usually private or intra subnets"

}

variable "promtail_image" {

type = string

description = "Loki URL to push logs from Promtail Lambda"

default = "492***148.dkr.ecr.us-east-1.amazonaws.com/lambda-promtail:latest"

}

variable "loki_write_address" {

type = string

description = "Loki URL to push logs from Promtail Lambda"

}

Creating a security_group_lambda resource

Create a modules/alb-logs/lambda.tf file, and start with the module security_group_lambda from the module terraform-aws-modules/security-group/aws, which will create a Security Group for us - we have one SG for all such logging functions:

data "aws_prefix_list" "s3" {

filter {

name = "prefix-list-name"

values = ["com.amazonaws.us-east-1.s3"]

}

}

module "security_group_lambda" {

source = "terraform-aws-modules/security-group/aws"

version = "~> 5.1.0"

name = "${var.eks_env}-${var.eks_version}-loki-logger-lambda-sg"

description = "Security Group for Lambda Egress"

vpc_id = var.vpc_id

egress_cidr_blocks = var.vpc_private_subnets_cidrs

egress_ipv6_cidr_blocks = []

egress_prefix_list_ids = [data.aws_prefix_list.s3.id]

ingress_cidr_blocks = var.vpc_private_subnets_cidrs

ingress_ipv6_cidr_blocks = []

egress_rules = ["https-443-tcp"]

ingress_rules = ["https-443-tcp"]

}

In the main.tf file of the project, add new parameters to the module:

module "alb_logs_test" {

source = "./modules/alb-s3-logs"

#bucket = "${var.eks_env}-${var.eks_version}-${var.component}-${var.application}-${each.value}-alb-logs"

# i.e. 'ops-1-28-backend-api-dev-alb-logs'

eks_env = var.environment

eks_version = local.env_version

component = "backend"

application = "api"

vpc_id = local.vpc_out.vpc_id

vpc_private_subnets_cidrs = local.vpc_out.vpc_private_subnets_cidrs

vpc_private_subnets_ids = local.vpc_out.vpc_private_subnets_ids

loki_write_address = local.loki_write_address

}

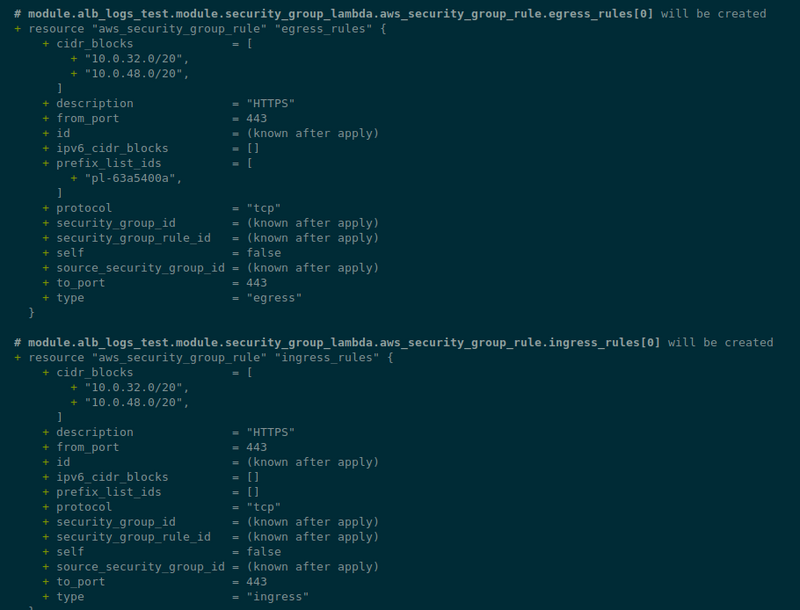

Run terraform init and terraform plan:

Now we can add a Lambda.

Creating a promtail_lambda module

Next is the function itself.

In it, we will need to specify allowed_triggers - a name of the bucket from which we can notify about the creation of new objects in the bucket, and for each bucket we want to create a separate function with its own variables for labels in Loki.

To do this, we create a module "promtail_lambda" module, where we will again loop through all the buckets as we did with the aws_s3_bucket_public_access_block.

But in the function parameters, we need to pass the current value from the app_environments - "dev" or "prod".

To do this, we can use the each.key, because when we create a resource "aws_s3_bucket" "alb_s3_logs" with for_each = var.app_environments or for_each = local.bucket_names - then we get an object in which the key will be each value from the var.app_environments, and the value will be the details of the bucket.

Let’s see what it looks like.

In our module, add an output - you can do it directly in the file modules/alb-s3-logs/s3.tf:

output "buckets" {

value = aws_s3_bucket.alb_s3_logs

}

In the root module, in the project itself, in the main.tf file, add another output that uses the output of the module:

...

output "alb_logs_buckets" {

value = module.alb_logs_test.buckets

}

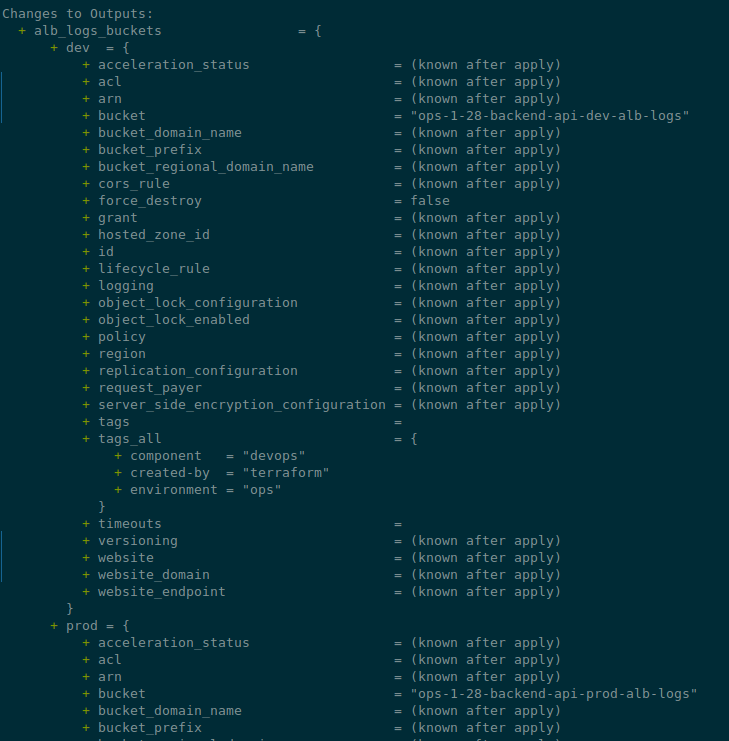

Run terraform plan, and we have the following result:

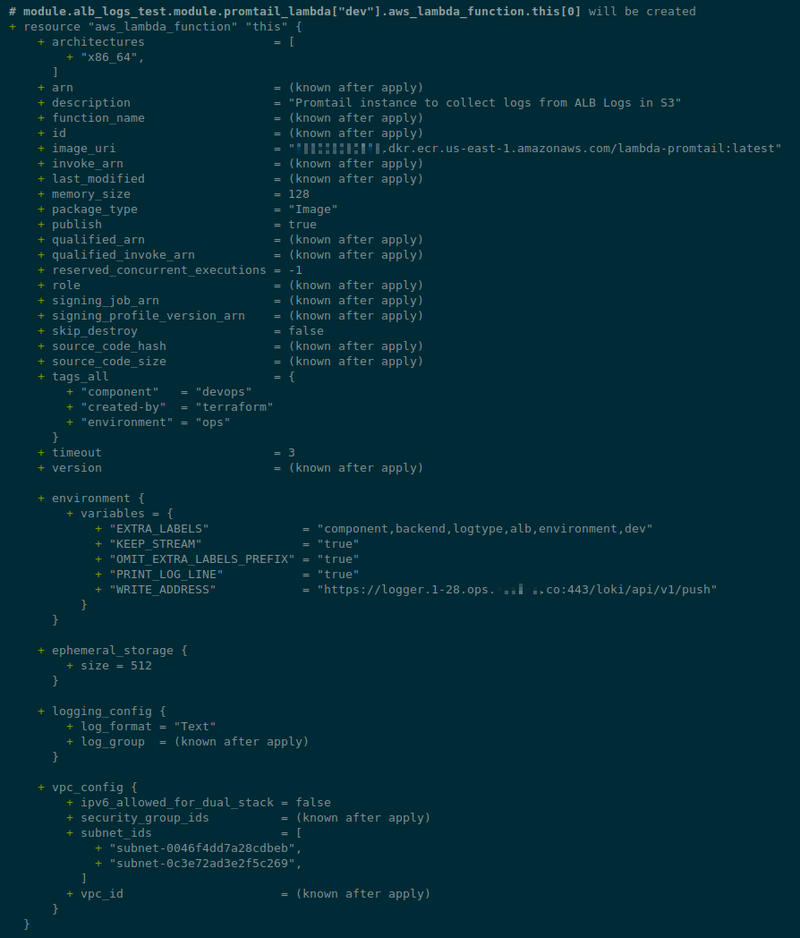

So, our Lambda module will be like this:

...

module "promtail_lambda" {

source = "terraform-aws-modules/lambda/aws"

version = "~> 7.2.1"

# key: dev

# value: ops-1-28-backend-api-dev-alb-logs

for_each = aws_s3_bucket.alb_s3_logs

# <eks_env>-<eks_version>-<component>-<application>-<app_env>-alb-logs-logger

# bucket name: ops-1-28-backend-api-dev-alb-logs

# lambda name: ops-1-28-backend-api-dev-alb-logs-loki-logger

function_name = "${each.value.id}-loki-logger"

description = "Promtail instance to collect logs from ALB Logs in S3"

create_package = false

# https://github.com/terraform-aws-modules/terraform-aws-lambda/issues/36

publish = true

image_uri = var.promtail_image

package_type = "Image"

architectures = ["x86_64"]

# labels: "component,backend,logtype,alb,environment,dev"

# will create: component=backend, logtype=alb, environment=dev

environment_variables = {

EXTRA_LABELS = "component,${var.component},logtype,alb,environment,${each.key}"

KEEP_STREAM = "true"

OMIT_EXTRA_LABELS_PREFIX = "true"

PRINT_LOG_LINE = "true"

WRITE_ADDRESS = var.loki_write_address

}

vpc_subnet_ids = var.vpc_private_subnets_ids

vpc_security_group_ids = [module.security_group_lambda.security_group_id]

attach_network_policy = true

# bucket name: ops-1-28-backend-api-dev-alb-logs

allowed_triggers = {

S3 = {

principal = "s3.amazonaws.com"

source_arn = "arn:aws:s3:::${each.value.id}"

}

}

}

Here in the each.value.id we will have the name of a bucket, and in the environment,${each.key}" - a "dev" or "prod" value.

Check — run terraform init && terraform plan:

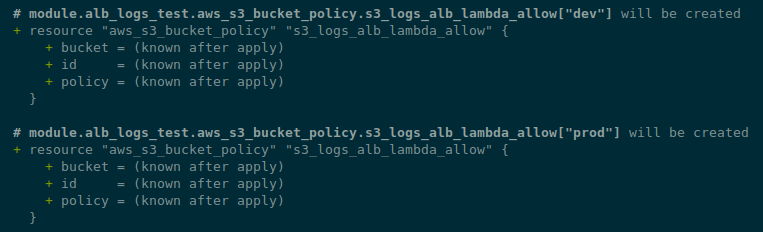

Creating an aws_s3_bucket_policy resource

The next resource we need is an IAM Policy for S3 that will allow ALBs to write, and a permission to read from our Lambda function.

Here we will have two new variables:

-

aws_account_id: passed from the root module -

elb_account_id: we can set the default value for now, because we are in only one region

Add to the variables.tf of the module:

...

variable "aws_account_id" {

type = string

description = "AWS account ID"

}

variable "elb_account_id" {

type = string

description = "AWS ELB Account ID to be used in the ALB Logs S3 Bucket Policy"

default = 127311923021

}

And in the modules/alb-s3-logs/s3.tf file describe the aws_s3_bucket_policy resource:

...

resource "aws_s3_bucket_policy" "s3_logs_alb_lambda_allow" {

for_each = aws_s3_bucket.alb_s3_logs

bucket = each.value.id

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Effect = "Allow"

Principal = {

AWS = "arn:aws:iam::${var.elb_account_id}:root"

}

Action = "s3:PutObject"

Resource = "arn:aws:s3:::${each.value.id}/AWSLogs/${var.aws_account_id}/*"

},

{

Effect = "Allow"

Principal = {

AWS = module.promtail_lambda[each.key].lambda_role_arn

}

Action = "s3:GetObject"

Resource = "arn:aws:s3:::${each.value.id}/*"

}

]

})

}

Here we again use the each.key from our buckets, where we will have the "dev" or "prod" value.

And, accordingly, we can refer to each module "promtail_lambda" resource - because they are also created in a loop - module.alb_logs_test.module.promtail_lambda["dev"].aws_lambda_function.this[0].

Add the aws_account_id parameter in the root module:

module "alb_logs_test" {

source = "./modules/alb-s3-logs"

...

vpc_private_subnets_ids = local.vpc_out.vpc_private_subnets_ids

loki_write_address = local.loki_write_address

aws_account_id = data.aws_caller_identity.current.account_id

}

Check with terraform plan:

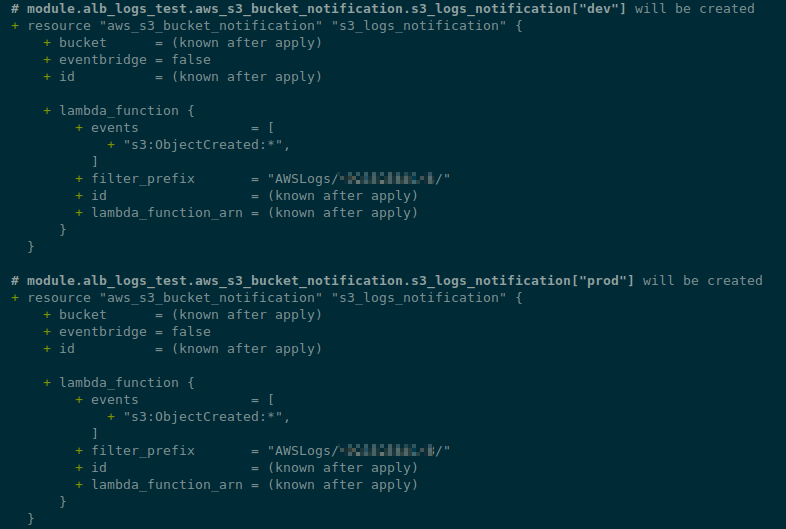

Creating an aws_s3_bucket_notification resource

The last resource is aws_s3_bucket_notification, which will create a notification for a Lambda function when a new object appears in the bucket.

The idea here is the same here — a loop through the buckets, and through env with the each.key:

...

resource "aws_s3_bucket_notification" "s3_logs_notification" {

for_each = aws_s3_bucket.alb_s3_logs

bucket = each.value.id

lambda_function {

lambda_function_arn = module.promtail_lambda[each.key].lambda_function_arn

events = ["s3:ObjectCreated:*"]

filter_prefix = "AWSLogs/${var.aws_account_id}/"

}

}

Check it:

And now we have everything ready — let’s deploy and test.

Checking the work of the Promtail Lambda

Deploy it with terraform apply, check the buckets:

$ aws --profile work s3api list-buckets | grep ops-1-28-backend-api-dev-alb-logs

"Name": "ops-1-28-backend-api-dev-alb-logs",

Create an Ingress with the s3.bucket=ops-1-28-backend-api-dev-alb-logs attribute:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-demo-deployment

spec:

replicas: 1

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- name: nginx-demo-container

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-demo-service

spec:

selector:

app: nginx-demo

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP":80}]'

alb.ingress.kubernetes.io/load-balancer-attributes: access_logs.s3.enabled=true,access_logs.s3.bucket=ops-1-28-backend-api-dev-alb-logs

spec:

ingressClassName: alb

rules:

- host: test-logs.ops.example.co

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-demo-service

port:

number: 80

Let’s check it out:

$ kk get ingress example-ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

example-ingress alb test-logs.ops.example.co k8s-opsmonit-examplei-8f89ccef47-1782090491.us-east-1.elb.amazonaws.com 80 39s

Check the contents of the bucket:

$ aws s3 ls ops-1-28-backend-api-dev-alb-logs/AWSLogs/492***148/

2024-02-20 16:56:54 107 ELBAccessLogTestFile

There is a test file, which means ALB can write logs.

Make requests to the endpoint:

$ curl -I http://test-logs.ops.example.co

HTTP/1.1 200 OK

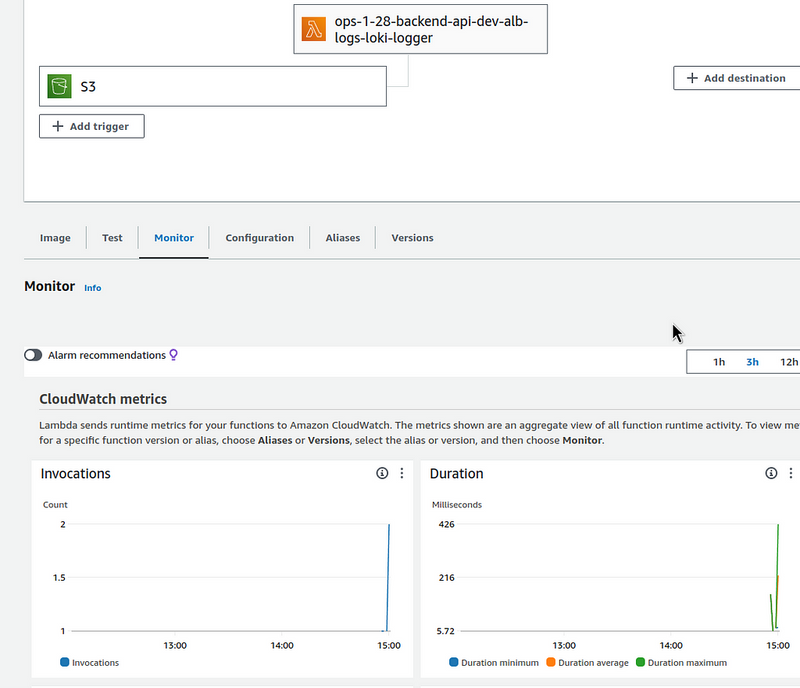

In a couple of minutes, check the corresponding Lambda function:

The invocations are here, all good.

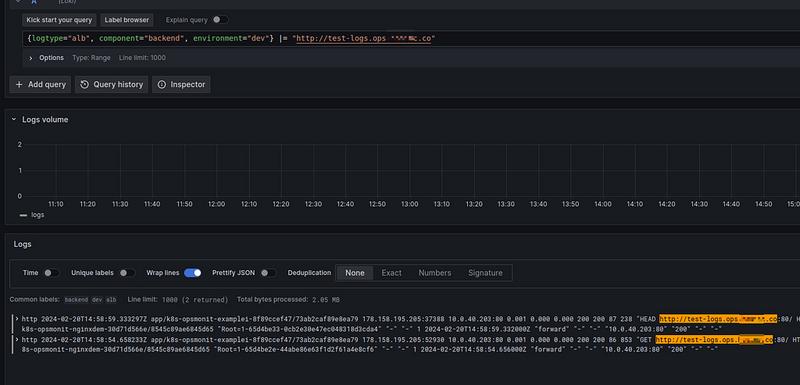

And check the logs in Loki:

Everything is working.

The only thing left to do is to upload our module to a GitHub repository and then use it in some project.

Terraform module from a GitHub repository

Create a new repository, copy the entire module directory into it, the alb-s3-logs folder:

$ cp -r ../atlas-monitoring/terraform/modules/alb-s3-logs/ .

$ tree .

.

|-- README.md

`-- alb-s3-logs

|-- lambda.tf

|-- s3.tf

`-- variables.tf

2 directories, 4 files

Commit and push:

$ ga -A

$ gm "feat: module for ALB logs collect"

$ git push

And update the source in the project for the module:

module "alb_logs_test" {

#source = "./modules/alb-s3-logs"

source = "git@github.com:org-name/atlas-tf-modules//alb-s3-logs"

...

loki_write_address = local.loki_write_address

aws_account_id = data.aws_caller_identity.current.account_id

}

Run terraform init:

$ terraform init

...

Downloading git::ssh://git@github.com/org-name/atlas-tf-modules for alb_logs_test...

- alb_logs_test in .terraform/modules/alb_logs_test/alb-s3-logs

...

And check the resources:

$ terraform plan

...

No changes. Your infrastructure matches the configuration.

All done.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (1)

This is a handy way to check your AWS load balancer logs with Grafana Loki. It makes it easy to keep track of everything. Nice work using Terraform to get it set up!