One day, we looked at our AWS costs on AWS Load Balancers and understood that we needed to decrease the spending a bit.

What we wanted was to have one LoadBalancer, and through it to route requests to different Kubernetes Ingresses and Services in different Namespaces.

The first thing that came to mind was either adding some Service Mesh like Istio or Linkerd to the Kubernetes cluster, or to add an Nginx Ingress Controller and AWS ALB in front of it.

But in the UkrOps Slack I was reminded that AWS Load Balancer Controller, which we use in our AWS Elastic Kubernetes Service cluster, has long been able to do this with IngressGroup.

So, let’s take a look at how this works, and how such a scheme can be added to existing Ingress resources.

Testing Load Balancer Controller IngressGroup

So, the idea is quite simple: in the Kubernetes Ingress manifest, we set another attribute — group.name, and by this attribute, the Load Balancer Controller determines which AWS LoadBalancer this Ingress belongs to.

Then, using the spec.hosts in the Ingress, it determines the hostnames and builds routing to the required Target Groups on the LoadBalancer.

Let’s try a simple example.

First, we create a regular manifest with separate Ingress/ALBs — describe a Namespace, Deployment, Service, and an Ingress:

apiVersion: v1

kind: Namespace

metadata:

name: test-app-1-ns

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-1-deploy

namespace: test-app-1-ns

spec:

replicas: 1

selector:

matchLabels:

app: app-1-pod

template:

metadata:

labels:

app: app-1-pod

spec:

containers:

- name: app-1-container

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: app-1-service

namespace: test-app-1-ns

spec:

selector:

app: app-1-pod

ports:

- protocol: TCP

port: 80

targetPort: 80

type: ClusterIP

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-1-ingress

namespace: test-app-1-ns

annotations:

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP":80}]'

spec:

ingressClassName: alb

rules:

- host: app-1.ops.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: app-1-service

port:

number: 80

And the same, but for the app-2.

Deploy it:

$ kk apply -f .

namespace/test-app-1-ns created

deployment.apps/app-1-deploy created

service/app-1-service created

ingress.networking.k8s.io/app-1-ingress created

namespace/test-app-2-ns created

deployment.apps/app-2-deploy created

service/app-2-service created

ingress.networking.k8s.io/app-2-ingress created

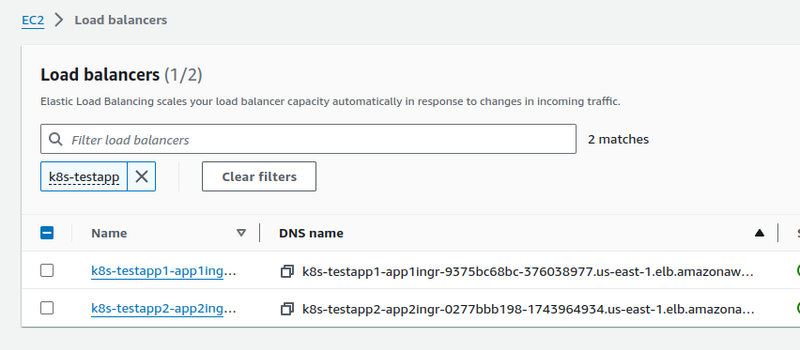

Check the Ingress and its LoadBalancer for the app-1 :

$ kk -n test-app-1-ns get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

app-1-ingress alb app-1.ops.example.com k8s-testapp1-app1ingr-9375bc68bc-376038977.us-east-1.elb.amazonaws.com 80 33s

Here, the ADDRESS is "k8s-testapp1-app1ingr-9375bc68bc-376038977".

Let’s check for the app-2 :

$ kk -n test-app-2-ns get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

app-2-ingress alb app-2.ops.example.com k8s-testapp2-app2ingr-0277bbb198-1743964934.us-east-1.elb.amazonaws.com 80 64s

Here, the ADDRESS is "k8s-testapp2-app2ingr-0277bbb198-1743964934".

Accordingly, we have two Load Balancers in AWS:

Now, add an annotation to both Ingresses — alb.ingress.kubernetes.io/group.name: test-app-alb:

...

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-1-ingress

namespace: test-app-1-ns

annotations:

alb.ingress.kubernetes.io/group.name: test-app-alb

alb.ingress.kubernetes.io/scheme: internet-facing

...

...

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: app-2-ingress

namespace: test-app-2-ns

annotations:

alb.ingress.kubernetes.io/group.name: test-app-alb

alb.ingress.kubernetes.io/scheme: internet-facing

...

Deploy it again:

$ kk apply -f .

namespace/test-app-1-ns unchanged

deployment.apps/app-1-deploy unchanged

service/app-1-service unchanged

ingress.networking.k8s.io/app-1-ingress configured

namespace/test-app-2-ns unchanged

deployment.apps/app-2-deploy unchanged

service/app-2-service unchanged

ingress.networking.k8s.io/app-2-ingress configured

And check the Ingresses and their addresses now.

In the app-1 , this is “k8s-testappalb-95eaaef0c8–2109819642”:

$ kk -n test-app-1-ns get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

app-1-ingress alb app-1.ops.example.com k8s-testappalb-95eaaef0c8-2109819642.us-east-1.elb.amazonaws.com 80 6m19s

And the app-2 — also “k8s-testappalb-95eaaef0c8–2109819642”:

$ kk -n test-app-2-ns get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

app-2-ingress alb app-2.ops.example.com k8s-testappalb-95eaaef0c8-2109819642.us-east-1.elb.amazonaws.com 80 6m48s

And in AWS we now have an only one Load Balancer:

Which has two Listener Rules that, depending on the hostname in the Ingress, will redirect requests to the required Target Groups:

The Ingress Groups — limits and implementation for Production

When using this setup, keep in mind that some LoadBalancer parameters cannot be set in different Ingresses.

For example, if one Ingress has the alb.ingress.kubernetes.io/tags: "component=devops" annotation, and the second Ingress tries to set the component=backend tag, the Load Balancer Controller will not validate such changes and will report a conflict, for example:

aws-load-balancer-controller-7647c5cbc7-2stvx:aws-load-balancer-controller {"level":"error","ts":"2024-09-25T10:50:23Z","msg":"Reconciler error","controller":"ingress","object":{"name":"ops-1-30-external-alb"},"namespace":"","name":"ops-1-30-external-alb","reconcileID":"1091979f-f349-4b96-850f-9e7203bfb8be","error":"conflicting tag component: devops | backend"}

aws-load-balancer-controller-7647c5cbc7-2stvx:aws-load-balancer-controller {"level":"error","ts":"2024-09-25T10:50:44Z","msg":"Reconciler error","controller":"ingress","object":{"name":"ops-1-30-external-alb"},"namespace":"","name":"ops-1-30-external-alb","reconcileID":"19851b0c-ea82-424c-8534-d3324f4c5e60","error":"conflicting tag environment: ops | prod"}

The same about parameters like alb.ingress.kubernetes.io/load-balancer-attributes: access_logs.s3.enabled=true, access_logs.s3.bucket=some-bucket-name, or SecurityGroups parameters.

But with TLS, everything is simpler: for each Ingress, you can pass an ARN of a certificate from the AWS Certificates Manager in the alb.ingress.kubernetes.io/certificate-arn annotation, and it will be configured in the Certificate Listener for SNI:

So, at least for now, I did in this way:

- created a separate GitHub repository

- added a Helm-chart there

- this chart has two manifests for two Ingresses — one with the type

internal, the second -internet-facing, and set all sorts of default parameters there

For example:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ops-external-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/group.name: ops-1-30-external-alb

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:us-east-1:492***148:certificate/88a5ccc0-e729-4fdb-818c-c41c411a3e3e

alb.ingress.kubernetes.io/tags: "environment=ops,component=devops,Name=ops-1-30-external-alb"

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

alb.ingress.kubernetes.io/load-balancer-attributes: access_logs.s3.enabled=true,access_logs.s3.bucket=ops-1-30-devops-ingress-ops-alb-logs

alb.ingress.kubernetes.io/actions.default-action: >

{"Type":"fixed-response","FixedResponseConfig":{"ContentType":"text/plain","StatusCode":"200","MessageBody":"It works!"}}

spec:

ingressClassName: alb

defaultBackend:

service:

name: default-action

port:

name: use-annotation

In the defaultBackend, we set the action when a request comes to a hostname for which there is no separate Listener - here we simply answer "It works!" with the code 200.

And then, in an Ingress projects, we configure their own parameters, for example, the Grafana Ingress:

$ kk -n ops-monitoring-ns get ingress atlas-victoriametrics-grafana -o yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

# the Common ALB group name

alb.ingress.kubernetes.io/group.name: ops-1-30-external-alb

## TLS certificate from AWS ACM

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:us-east-1:492***148:certificate/88a5ccc0-e729-4fdb-818c-c41c411a3e3e

# sucess codes for Target Group health checks

alb.ingress.kubernetes.io/success-codes: "302"

alb.ingress.kubernetes.io/target-type: ip

kubernetes.io/ingress.class: alb

...

name: atlas-victoriametrics-grafana

namespace: ops-monitoring-ns

...

spec:

rules:

- host: monitoring.1-30.ops.example.com

http:

paths:

- backend:

service:

name: atlas-victoriametrics-grafana

port:

number: 80

path: /

pathType: Prefix

And through the alb.ingress.kubernetes.io/group.name: ops-1-30-external-alb, they "connects" to our "default" LoadBalancer.

We’ve been working with this scheme for several weeks now, and so far, everything is good.

Monitoring

Another important nuance is monitoring, because the default CloudWatch metrics, for example, for errors 502/503/504, are created for the entire LoadBalancer.

But in our case, we refused to use CloudWatch metrics at all (and pay for each GetData request to get metrics in CloudWatch Exporter or Yet Another Cloudwatch Exporter).

Instead, we collect all the Access logs from load balancers in Loki, and then use its Recording Rules to generate metrics, where we have the domain name in the labels when requesting the domain for which the error occurred:

...

- record: aws:alb:requests:sum_by:elb_http_codes_by_uri_path:5xx:by_pod_ip:rate:1m

expr: |

sum by (pod_ip, domain, elb_code, uri_path, user_agent) (

rate(

{logtype="alb"}

| pattern `<type> <date> <elb_id> <client_ip> <pod_ip> <request_processing_time> <target_processing_time> <response_processing_time> <elb_code> <target_code> <received_bytes> <sent_bytes> "<request>" "<user_agent>" <ssl_cipher> <ssl_protocol> <target_group_arn> "<trace_id>" "<domain>" "<chosen_cert_arn>" <matched_rule_priority> <request_creation_time> "<actions_executed>" "<redirect_url>" "<error_reason>" "<target>" "<target_status_code>" "<_>" "<_>"`

| domain=~"(^.*api.challenge.example.co|lightdash.example.co)"

| elb_code=~"50[2-4]"

| regexp `.*:443(?P<uri_path>/[^/?]+).* HTTP`

[1m] offset 5m

)

)

...

See Grafana Loki: collecting AWS LoadBalancer logs from S3 with Promtail Lambda and Grafana Loki: LogQL and Recording Rules for metrics from AWS Load Balancer logs.

Also, there is an interesting post about ALB Target Groups binding — A deeper look at Ingress Sharing and Target Group Binding in AWS Load Balancer Controller.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)