This is an excerpt from Chapter 3 of The Definitive Guide to Measuring Web Performance.

There are different ways to measure web performance, which test different things and have different results.

Before you jump into performance tools, you need to understand what kind of performance it is measuring.

Lab Data and Field Data

There are two types of web performance data: lab data and field data.

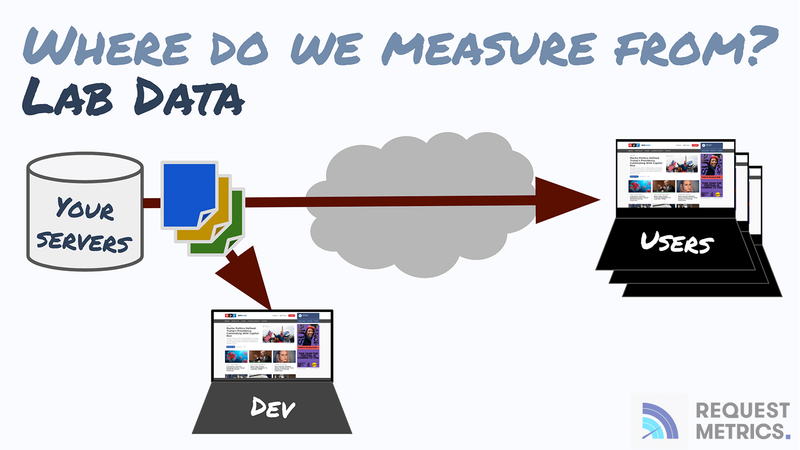

Lab performance data is gathered with a controlled test, such as a Lighthouse report. Lab data describes a single webpage load from a specified location on the network.

This kind of data is often called "Synthetic Testing" because it measures performance from a known device connected to the network. It does not measure the actual performance of any user, but an estimate for what performance will be.

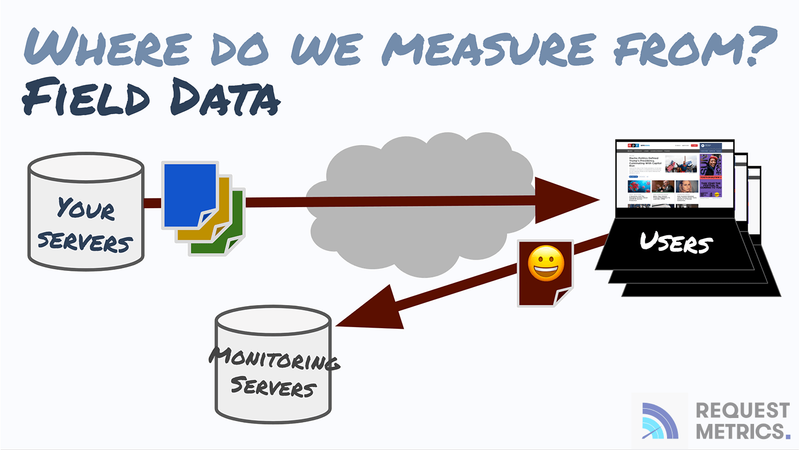

Field performance data is gathered directly from the users of the website using a performance agent. Because field data includes data for each website user, there is much more data to filter and consider.

Field data is often called "Real User Monitoring" because it describes the actual performance experienced by users from a running website.

Field data can produce a lot of data, and not all of it is relevant. To understand field data, you'll get to use statistics! Don't worry, it's not so bad.

Interpreting Performance Data with Statistics

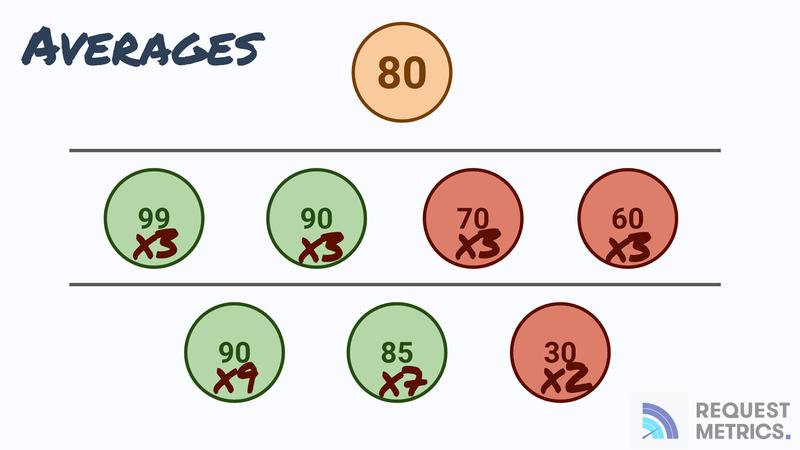

The easiest way to understand sets of data is with averages. But averages aren't great because they are often misleading because of unusual performance distribution.

Look at an example. An average Lighthouse score of 80 can come from either of these situations:

These tell very different stories. The top scores describe a site that has poor performance for half of its users and probably has an issue. The bottom score is being dragged down from a single outlier, and is probably doing okay.

So what can we do instead?

Median and Percentiles

Imagine if all your performance scores were sorted from best to worse. Your median performance is the value where half of your users had a faster experience. It's a good proxy for your typical user and how they experience your website.

Median can also be called the 50th Percentile or p50 because 50% of your users will have a better score.

Performance numbers are often measured by their p50, p75, and p95 scores. Or, the performance experience for "typical users", "most users", and "worst users".

Some code might help. The Lighthouse score of 10 tests is stored in an array. You can get the percentiles like this:

// Performance scores, sorted.

var lighthouseScores = [100, 100, 90, 90, 90, 80, 70, 70, 60, 50];

// Desired percentile to calculate.

var percentile = 0.75;

// Find the index 75% into the array.

var idx = Math.round( (lighthouseScores.length - 1) * percentile );

var p75Score = lighthouseScores[idx];

Or you might have your data in a spreadsheet, where you can use the PERCENTILE function.

Ok, enough background. On to the tools!

Top comments (0)