When a system sends events to PagerDuty, how does PagerDuty know that the alert represents a new problem or a continuing issue? PagerDuty provides several methods for grouping the alerts, either via explicit configuration with Event Orchestrations or Content-Based Alert Grouping or through the use of machine learning (eg, Intelligent Alert Grouping).

You can also get started gathering up related alerts into a parent incident using the dedup_key.

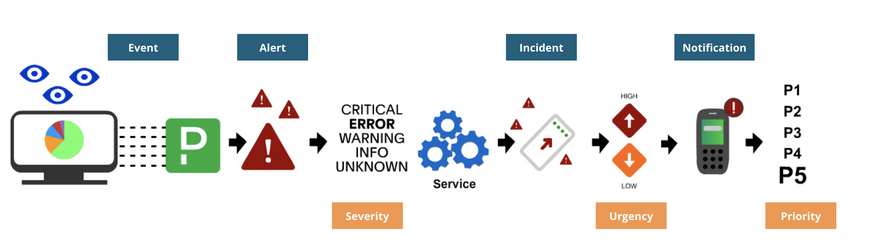

Events -> Alerts

When PagerDuty receives an event, maybe via the events API or an integration, that event is translated into an alert. Alerts can then trigger incidents on that service.

By default, all services are configured to create alerts in PagerDuty.

Events can come from any number of places. Many of the integrations on PagerDuty are for systems that will serve as sources of events, like monitoring tools. Multiple events can be sent from the same source to the same service in PagerDuty. If they all represent the same problem, they should be gathered up in the same event!

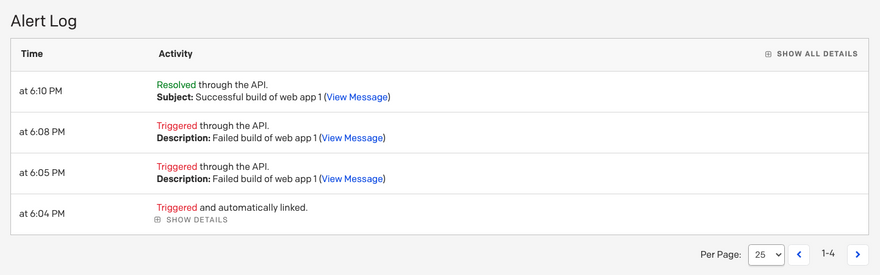

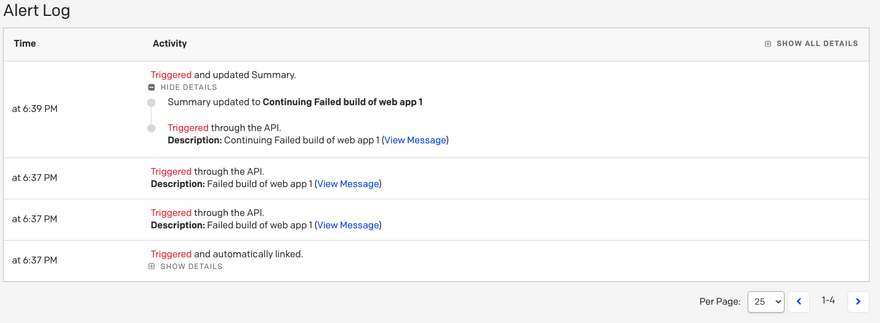

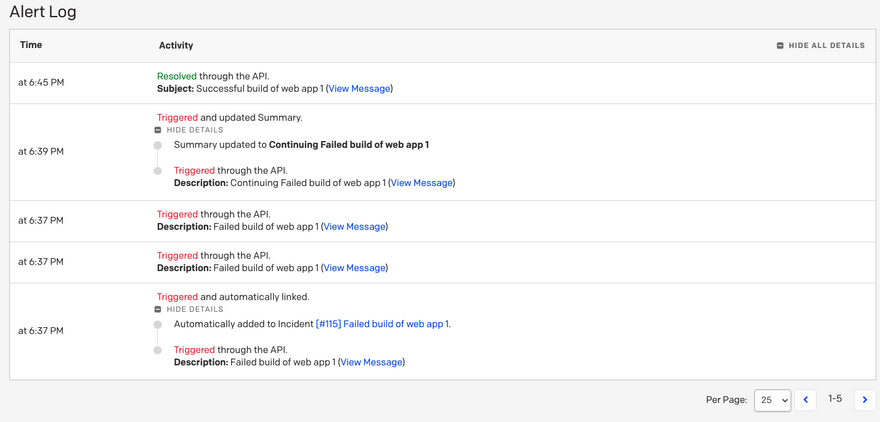

If an incident has a number of alerts associated with it, they’ll show up in the Alert Log and can be queried via the /incidents endpoint in the API. You’ll find the Alert Log for a group of alerts by clicking on the Summary of an alert in the Alerts Table.

What is the dedup_key?

The dedup_key, or “deduplication key” is an optional field in events sent to PagerDuty. Any alert source can set its own key for the events it sends to PagerDuty. If no key is set in the event, PagerDuty will create a new key for that event. In the above screenshot, all the alerts were collected to an open incident using the dedup_key pre-set in the event payload.

You can make things easy on yourself and use strings that have some meaning to the incident response team. The

dedup_keyvalue in an event is a JSON string, so most characters are valid.

If an event doesn’t include its own dedup_key, PagerDuty will create one. Those keys are unique UUIDs. They’ll be returned to the alert-sending process in a JSON payload:

{

"dedup_key":"46bc078e455440bb9d12e67626f5b162",

"message":"Event processed",

"status":"success"

}

The sender can store these for use in future related events.

What does it do for me?

The primary function of the dedup_key is to suppress the creation of new incidents from related events.

Events will also have an event_action. The behavior in PagerDuty will be determined by the combination of dedup_key and event_action:

dedup_key |

event_action |

Behavior |

|---|---|---|

| Not set | trigger |

New alert and / or incident created |

| Not set |

acknowledge or resolve

|

Error: dedup_key is required |

| Set | trigger |

If there is an existing incident created with this dedup_key, group the event into it. If this dedup_key does not have an active incident, create a new incident. |

| Set | acknowledge |

Acknowledge any triggered alerts with this dedup_key. If no triggered alerts, ignore. |

| Set | resolve |

Resolve any triggered or acknowledged alerts with this dedup_key. If there is an incident, resolve that incident. If no active alerts, ignore. |

Several alerts can pile up under a single incident while it’s being triaged and resolved. As long as the incident hasn’t been set to resolved, no new incidents are created and no new notifications are generated.

Gathering up alerts during a running incident this way can definitely help your team manage noise and concentrate on fixing the underlying issues!

Example: Builds Failing

Let’s look at an example of where the use of the dedup_key might come in handy.

A user recently posted a similar scenario in the community forums. If you have questions about PagerDuty, join us there!

Let’s say you have a build pipeline for a software application. Most of the time, the pipeline executes successfully, and all is well. Occasionally, though, the pipeline fails somewhere in the process. How can we let our developers know that they need to go check out what’s going on?

We’ll focus on using the Events API. You can also make use of Change Events for builds, but remember: change events do not alert!

The first time the build fails, it will send a failure message of some sort. The event will route to the appropriate service in PagerDuty via an integration key.

The pipeline process will use a pre-configured dedup_key, web-build-app-1 to identify all failed builds for this application. The event_action is set to trigger to initiate a new incident.

The event payload is a JSON document:

{

"payload": {

"summary": "Failed build of web app 1",

"severity": "critical",

"source": "our custom ci/cd service",

"component": "build",

"group": "web-1",

"class": "build"

},

"routing_key": "$PD_ROUTE_KEY",

"dedup_key": "web-build-app-1",

"event_action": "trigger",

"client": "Sample Monitoring Service",

"client_url": "https://monitoring.service.com"

}

This trigger message will allow PagerDuty to create an incident on the service. Then the folks who own the service can take a look and figure out why the build failed.

If the next build through the pipeline also fails, we probably don’t want another incident to be created. That’s where the dedup_key comes in for this example.

Any additional alerts sent to the same service with the trigger action and the dedup_key set to web-build-app-1 will be grouped together in the same incident. If the data changes between events, the alert will also update. In this example, the event has a new summary.

By setting the dedup_key explicitly when sending a trigger event, our workflow can manage the future activities on the created incident.

If our team works through the problem on the build pipeline and a build goes through successfully, our build system can send a resolve message using that same dedup_key:

{

"payload": {

"summary": "Successful build of web app 1",

"severity": "info",

"source": "our custom ci/cd service",

"component": "build",

"group": "web-1",

"class": "build"

},

"routing_key": "$PD_ROUTE_KEY",

"dedup_key": "web-build-app-1",

"event_action": "resolve",

"client": "Sample Monitoring Service",

"client_url": "https://monitoring.service.com"

}

Note the resolve value for the event_action key!

When this event is received by PagerDuty, the resolve action will result in a resolution for the open incident based on the dedup_key:

Once the alerts and incident have been resolved, any additional successful runs of the build pipeline that create resolve messages will be dropped.

The next time the build pipeline fails, a new trigger alert will be sent, and a brand new incident will be created.

Unlock Self-Healing Systems

Using the dedup_key in systems that send data to PagerDuty will help your team manage alerts from systems that have self-healing capabilities.

One of the features of Event Orchestrations is the ability to suppress alerts. When working with systems that you expect to fix themselves, suppression can be a helpful tool for managing alert fatigue. Suppressed alerts are still available in the alerts table, but won’t immediately send notifications to your teams. Delayed notifications can give your automation and remediation tools time to fix issues before getting humans involved.

These powerful tools give your team flexible methods for dealing with the challenge of managing noise in complex environments.

Top comments (0)