Each data point in a system that produces data on an ongoing basis corresponds to an Event. Event Streams are described as a continuous flow of events or data points. Event Streams are sometimes referred to as Data Streams within the developer community since they consist of continuous data points. Event Stream Processing refers to the action taken on generated Events.

This article discusses Event Streams and Event Stream Processing in great depth, covering topics such as how Event Stream Processing works, the contrast between Event Stream Processing and Batch Processing, its benefits and use cases, and concluding with an illustrative example of Event Stream Processing.

Event Streams: An Overview

Coupling between services is one of the most significant difficulties associated with microservices. Conventional architecture is a “don’t ask, don’t tell” architecture in which data is collected only when requested. Suppose there are three services in issue, A, B, and C. Service A asks the other services, “What is your present state?” and assumes they are always ready to respond. This puts the user in a position if the other services are unavailable.

Retries are utilized by microservices as a workaround to compensate for network failures or any negative impacts brought on by changes in the network topology. However, this ultimately adds another layer of complexity and increases the expense.

In order to address the problems with the conventional design, event-driven architecture adopts a “tell, don’t ask” philosophy. In the example above, Services B and C publish Continuous Streams of data, such as Events, and Service A subscribes to these Event Streams. Then, Service A may evaluate the facts, aggregate the outcomes, and locally cache them.

Utilizing Event Streams in this manner has various advantages, including:

Systems are capable of closely imitating actual processes.

Increased usage of scale-to-zero functions (serverless computing) as more services are able to stay idle until required.

Enhanced adaptability

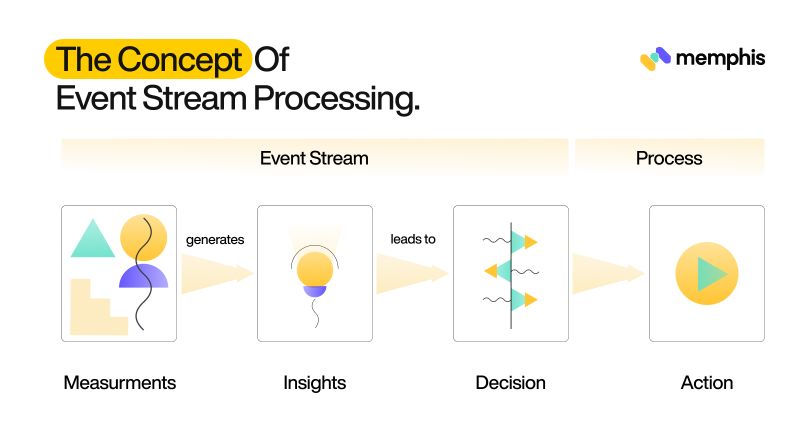

The Concept of Event Stream Processing

Event Stream Processing (ESP) is a collection of technologies that facilitate the development of an Event-driven architecture. As previously stated, Event Stream Processing is the process of reacting to Events created by an Event-driven architecture.

One may behave in a variety of ways, including:

Conducting Calculations

Transforming Data

Analyzing Data

Enriching Data

You may design a pipeline of actions to convert Event data, which will be detailed in the following part, which is the heart of Event Stream Processing.

The Basics of Event Stream Processing

Event Stream Processing consists of two separate technologies. The first form of technology is a system that logically stores Events, and the second type is software used to process Events.

The first component is responsible for data storage and saves information based on a timestamp. As an illustration of Streaming Data, recording the outside temperature every minute for a whole day is an excellent example. In this scenario, each Event consists of the temperature measurement and the precise time of the measurement. Stream Processors or Stream Processing Engines constitute the second component.

Most often, developers use Apache Kafka to store and process Events temporarily. It also enables the creation of Event Streams-based pipelines in which processed Events are transferred to further Event Streams for additional processing. Other Kafka use cases include activity tracking, log aggregation, and real-time data processing. Kafka enables software architects design scalable architectures for large systems due to the decoupling of different components in the system.

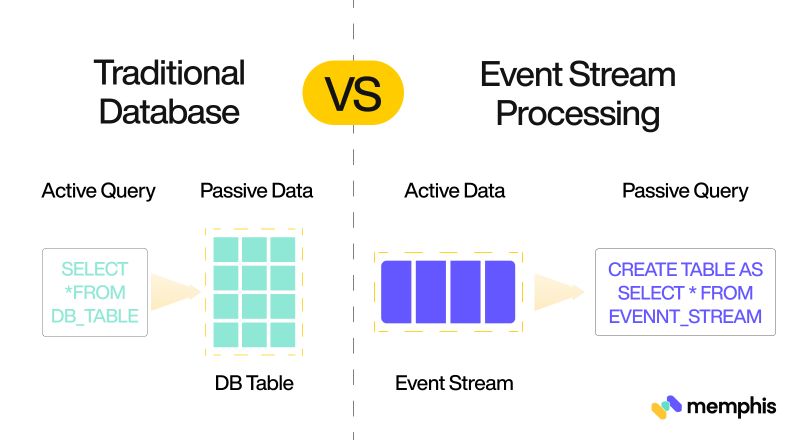

Event Stream Processing vs. Batch Processing

With the development of technology, businesses deal with a much bigger number of data than they did ten years ago. Therefore, more sophisticated data processing technologies are necessary to keep up with this rate of change. A conventional application is responsible for the collection, storage, and processing of data, as well as the storage of the processed outputs.

Typically, these procedures occur in batches, so your application must wait until it has sufficient data to begin processing. The amount of time your application may have to wait for data is unacceptable for time-sensitive or real-time applications that need quick data processing.

In order to solve this difficulty, Event Streams enter the fray. In Event Stream Processing, every single data point or Event is handled instantaneously, meaning there is no backlog of data points, making it perfect for real-time applications.

In addition, Stream Processing enables the detection of patterns, the examination of different degrees of attention, and the simultaneous examination of data from numerous Streams. Spreading the operations out across time, Event Stream Processing requires much less hardware than Batch Processing.

The benefits of using Event Stream Processing

Event Stream Processing is used when quick action must be taken on Event Streams. As a result, Event Stream Processing will emerge as the solution of choice for managing massive amounts of data. This will have the greatest impact on the prevalent high-speed technologies of today, establishing Event Stream Processing as the solution of choice for managing massive amounts of data. Several advantages of incorporating Event Stream Processing into your workflow are as follows:

Event Stream Pipelines can be developed to fulfill advanced Streaming use cases. For instance, using an Event Stream Pipeline, one may enhance Event data with metadata and modify such objects for storage.

Utilizing Event Stream Processing in your workflow enables you to make choices in real-time.

You can simply expand your infrastructure as the data volume grows.

Event Stream Processing offers continuous Event Monitoring, enabling the creation of alerts to discover trends and abnormalities.

You can examine and handle massive volumes of data in real-time, allowing you to filter, aggregate, or filter the data prior to storage.

Event Streams use cases

As the Internet of Things (IoT) evolves, so does the demand for real-time analysis. As data processing architecture becomes more Event-driven, ESP continues to grow in importance.

Event Streaming is used in a variety of application cases that span several sectors and organizations. Let’s examine a few industries that have profited from incorporating Event Stream Processing into their data processing methodologies.

Besides helping big sectors, it also addresses specific problems we face on a daily basis. Here are some examples of how this can be used.

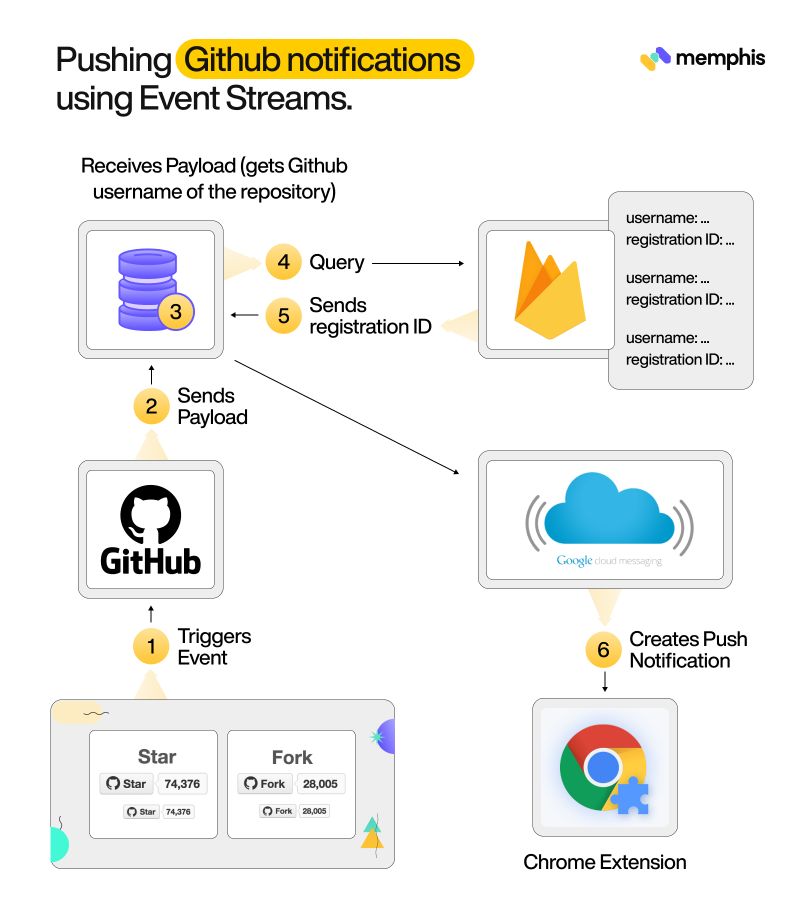

Use case 1: Pushing Github notifications using Event Streams

Event streams are a great way to stay up-to-date on changes to your codebase in real-time. By configuring an event stream and subscribing to the events you’re interested in, you can receive push notifications whenever there is an activity in your repository. We hope this use case has will help you understand how to use event streams in GitHub push notifications.

Here we are taking an example of creating a chrome extension that makes use of event dreams to provide real-time GitHub push notifications.

The GitHub Notifier extension for Google Chrome allows you to see notifications in real time whenever someone interacts with one of your GitHub repositories. This is a great way to stay on top of your project’s activity and be able to respond quickly to issues or pull requests. The extension is available for free from the Google Chrome store. Simply install it and then sign in with your GitHub account.

Once you’ve done that, you’ll start receiving notifications whenever someone mentions you, comments on one of your repositories, or even when someone stars one of your repositories. You can also choose to receive notifications for specific events such as new releases or new Pull Requests. Stay up-to-date on all the latest activity on your GitHub repositories with GitHub Notifier!

Use case 2: Internet of Things in Industry (IIoT)

In the context of automating industrial processes, businesses may incorporate an IIoT solution by including a number of sensors that communicate data streams in real-time. These sensors may be installed in the hundreds, and their data streams are often pooled by IoT gateways, which can deliver a continuous stream of data further into the technological stack. Enterprises would need to apply an event stream processing approach in order to make use of the data, analyze it to detect trends, and swiftly take action on them. This stream of events would be consumed by the event streaming platform, which would then execute real-time analytics.

For instance, we may be interested in tracking the average temperature over the course of 30 seconds. After that, we want the temperature only to be shown if it surpasses 45 °C. When this condition is satisfied, the warning may be utilized by other programs to alter their processes in real-time to prevent overheating.

There are many technologies that can help automate the processes. Camunda’s Workflow Engine is one of them which implements this process automation and executes processes that are defined in Business Process Model and Notation (BPMN), the global standard for process modeling. BPMN provides an easy-to-use visual modeling language for automating your most complex business processes. If you want to get started with Camunda workflow, the Camunda connectors is a good starting point.

Use case 3: Payment Processing

Rapid payment processing is an excellent use of event stream processing for mitigating user experience concerns and undesirable behaviours. For instance, if a person wishes to make a payment but encounters significant delays, they may refresh the page, causing the transaction to fail and leaving them uncertain as to whether their account has been debited. Similarly, when dealing with machine-driven payments, delay may have a large ripple impact, particularly when hundreds of payments are backed up. This might result in repeated attempts or timeouts.

To support the smooth processing of tens of thousands of concurrent requests, we may leverage event streaming processing to guarantee a consistent user experience throughout.

A payment request event may be sent from a topic to an initial payments processor, which then changes the overall amount of payments being processed at the moment. A subsequent event is then created and forwarded to a different processor, which verifies that the payment can be completed and changes the user’s balance. A final event is then generated, and the user’s balance is updated by another processor.

Use case 4: Cybersecurity

Cybersecurity systems collect millions of events in order to identify new risks and comprehend relationships between occurrences. For the purpose of reducing false positives, cybersecurity technologies use event streaming processing to augment threats and give context-rich data. They do this by following a sequence of processes, including:

Collect events from diverse data sources, such as consumer settings in real-time.

Filter event streams so that only relevant data enters the subjects to eliminate false positives or benign assaults.

Leverage streaming apps in real-time to correlate events across several source interfaces.

Forward priority events to other systems, such as security information and event management (SIEM) systems or security automation, orchestration, and response (SAO&R) systems (SOAR).

Use Case 5: Airline Optimization

We can create real-time apps to enhance the experience of passengers before, during, and after flights, as well as the overall efficiency of the process. We can effectively coordinate and react if we make crucial events, such as customers scanning their boarding passes at the gate, accessible across all the back-end platforms used by airlines and airports.

For example, based on this one sort of event, we can enable three distinct use cases, including:

Accurately predicting take-off times and predicting delays

Reduce the amount of assistance necessary for connecting passengers by giving real-time data

Reduce the impact of a single flight’s influence on the on-time performance of the other flights.

Use case 6: E-Commerce

Event stream processing can be used in an e-commerce application to facilitate “viewing through to purchasing.” To do this, we may build an initial event stream to capture the events made by shoppers, with 3 separate event kinds feeding the stream.

Customer sees item.

A customer adds an item to their shopping cart.

A customer puts an order.

We may assist our use cases by applying discrete processes or algorithms, such as:

An hourly sales calculator that parses the stream for ‘Customer puts order’ events and keeps a running tally of total revenues for each hour.

A product look-to-book tracker that reads “Customer sees item” from the stream and keeps track of the overall number of views for each product. Additionally, it parses ‘Customer puts order’ events from the stream and keeps track of the total number of units sold for each product.

A new ‘Customer abandons’ cart event is created and posted to a new topic when an abandoned cart detector – which reads all three kinds of events and uses the algorithm described previously to identify customers who have abandoned their shopping cart – detects abandoned carts.

Conclusion

In a world that is increasingly driven by events, Event Stream Processing (ESP) has emerged as a vital practice for enterprises. Event streams are becoming an increasingly important data source as more and more companies move to a streaming architecture. The benefits of using event streams include real-time analytics, faster response times, and improved customer experience. They offer many benefits over traditional batch processing.

In addition, there are a number of use cases for event streams that can help you solve specific business problems. If you’re looking for a way to improve your business performance, consider using event stream processing.

Join 4500+ others and sign up for our data engineering newsletter

Follow Us to get the latest updates!

Github • Docs • Discord

Originally published at memphis.dev by By Avraham Neeman Co-Founder & CPO @Memphis.dev.

Top comments (0)