Argonaut’s Kubernetes Environment Setup In AWS

When working with Argonaut, the heart and soul of it is behind how most cloud-native apps are deployed in today’s world, with Kubernetes. Luckily, you don’t have to do much engineering to get it up and running! Argonaut handles everything for you.

In this blog post, you’ll learn what Argonaut handles for you, how Kubernetes is set up with Argonaut when a new environment is created and dive deep into the internals of how it all works.

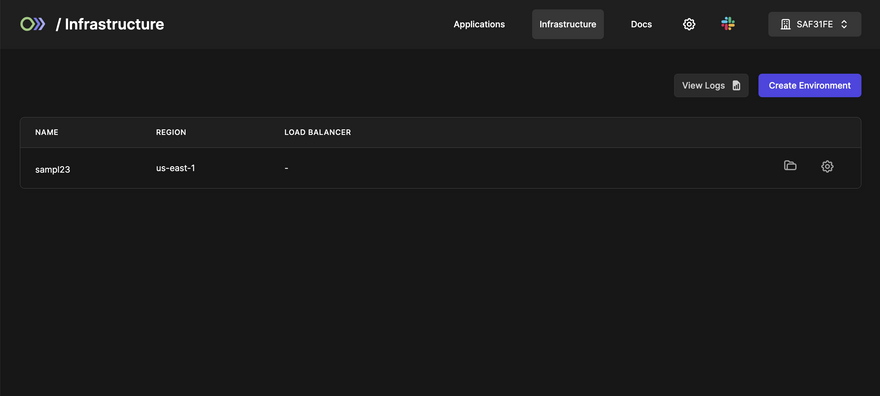

Creating A New Environment

To create a new environment, all you need is access to the UI at ship.argonaut.dev and a few minutes to spare.

First, log in and click the Infrastructure tab. Then click the purple Create Environment button.

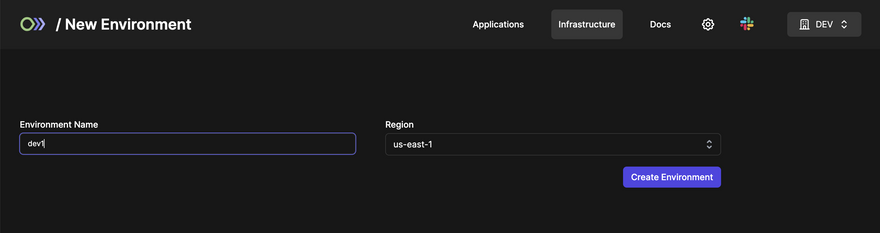

To create a new environment, you’ll have to input some information:

- The environment name

- Region

For the purposes of this blog post and in a dev environment, you can set up 1 node as shown in the screenshot below.

Click the purpose Create Environment button on the right side.

and just like that, a new environment is being created!

You can easily check the status of infra from the History page, which also includes logs.

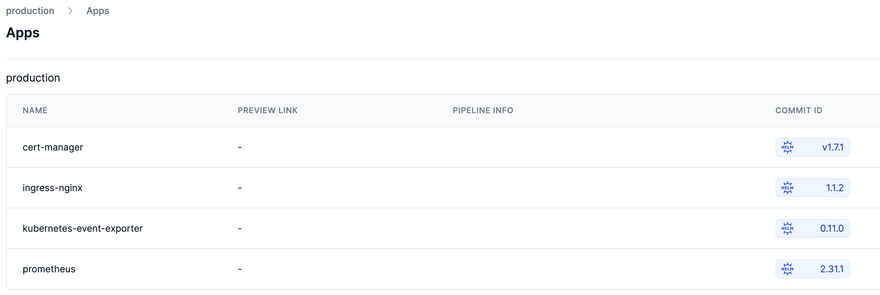

Once the environment is configured, there are some “out-of-the-box” applications created during the setup for the Kubernetes environment.

The apps include:

- cert-manager

- ingress-nginx

- kubernetes-event-exporter

- prometheus

All of the out-of-the-box applications are created via Kubernetes Helm charts.

Seems straightforward, right? That’s because it is! However, there’s a lot that happens under the hood and it’s important to understand from an engineering perspective what’s happening. That way, you know what’s being created and how to troubleshoot.

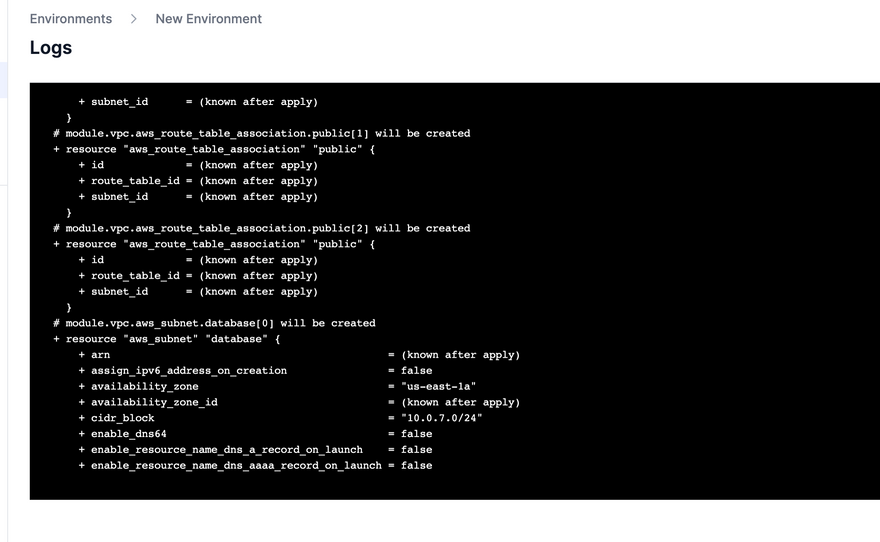

Seeing The Logs In A New Environment

The first thing that you’ll see in a new environment is the logs. Although you’ll learn about how the environment is created in the next section, it’s important to point out that the environment is created via Terraform, a popular Infrastructure-as-Code tool from HashiCorp.

The logs will show you everything from what’s being created to what fails to create, and for troubleshooting purposes, the logs are crucial.

Throughout the logs, you’ll see several resources that are created. All of the resources created are for the Kubernetes cluster to run properly in the environment you’re running Argonaut in. Resources include:

- The entire network stack (VPC, subnets, etc.)

- A load balancer

- The virtual machines/cloud servers (for example, EC2 instances in AWS) running as worker nodes

- Storage volumes for Kubernetes

What’s Created But Not Used?

Throughout the logs, you’ll see a few other resources that are created, for example, a subnet for ElastiCache and a database. However, when you go into your environment (for example, in AWS), you won’t see those resources created.

The reason why is because they’re created for later use.

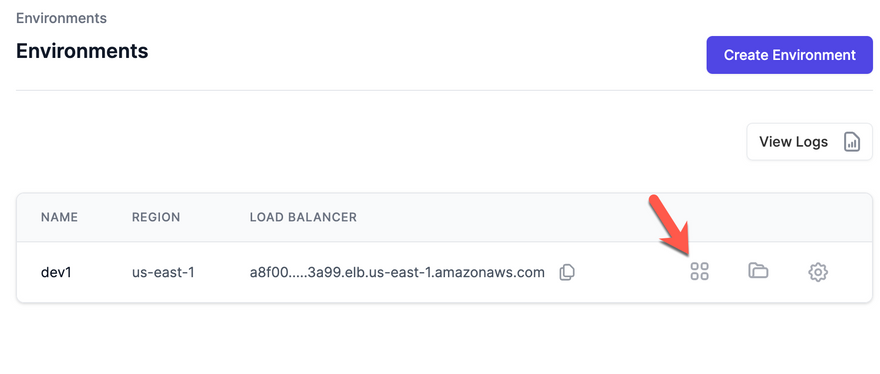

Inside of Argonaut, you’ll see a four-square-icon like in the screenshot below.

When you click on that icon, you’ll see several resources that can be managed by Argonaut.

The reason why the resources, like the ElastiCache subnet and DB subnet are created but not used, is so they can be used later for the resources under the Infrastructure tab per the playback above.

Terraform Modules For The Environment

Thinking about the previous section, Seeing The Logs In A New Environment, you may be wondering well, I know it’s all created via Terraform, but where is that code?

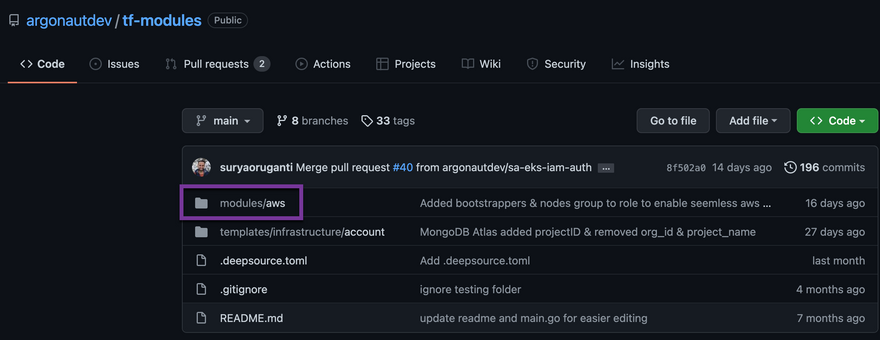

Luckily, the TF modules are open-source for anyone to view!

https://github.com/argonautdev/tf-modules

Click on the link to tf-modules above and then click on the module/aws directory.

AWS Modules

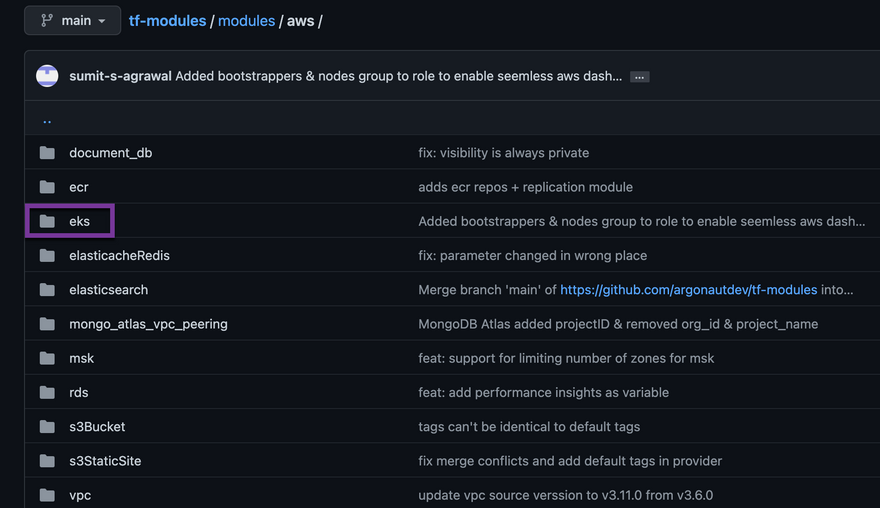

Inside of module/aws, you’ll see all of the Terraform modules that are used to create the resources that you saw being created in the Environment logs.

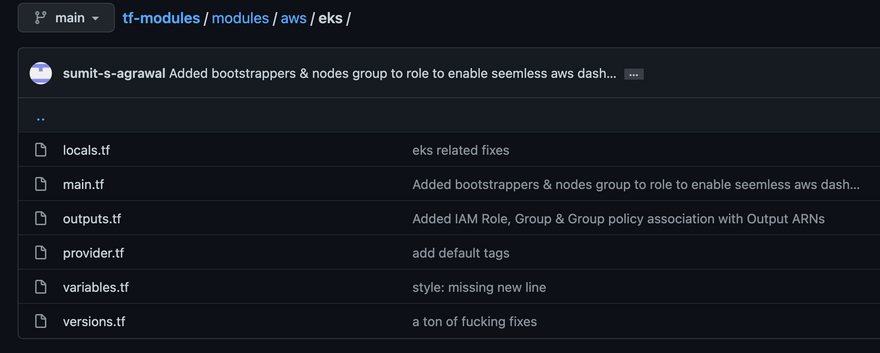

Click on the directory called eks.

Within the eks directory is where the magic happens! This is how the Kubernetes cluster gets created for you to use via Argonaut.

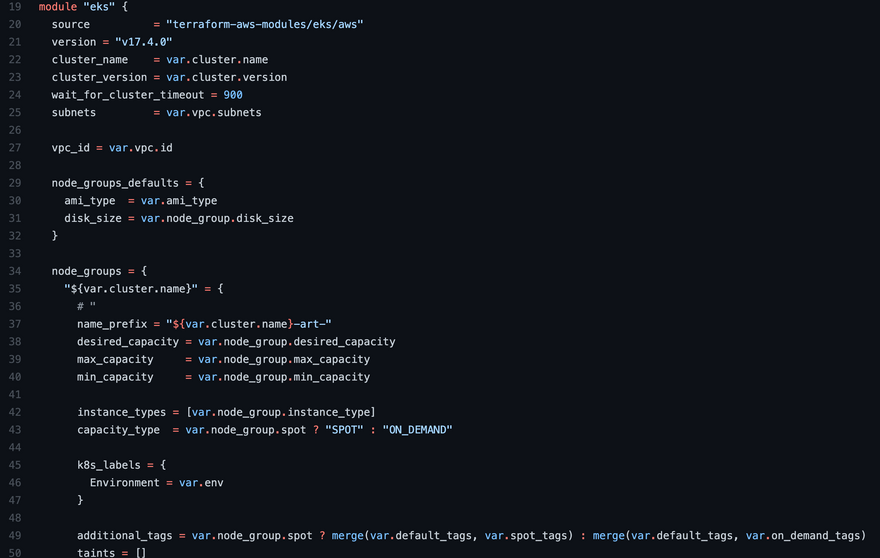

Clicking on the main.tf, which is the primary configuration file in Terraform, you can see exactly how the EKS cluster is created.

For example, on line 19, you can see how the worker nodes for Kubernetes are configured. When you input the number of instances you want to create via the Argonaut Environment setup, the code in the screenshot below (specifically starting on line 34) is how EKS knows via the variables how many worker nodes you want to create.

Taking A Look At The Created Resources In AWS

Now that the environment is created, which can take up to 20 minutes or so, it’s time to log into AWS and take a look at what exists. The two primary pieces that you should take a look at are:

- The VPC

- The EKS cluster

The VPC and EKS cluster are really how other resources, like apps, can be created and managed in Argonaut. Without the VPC and EKS cluster, things like deploying apps wouldn’t be possible.

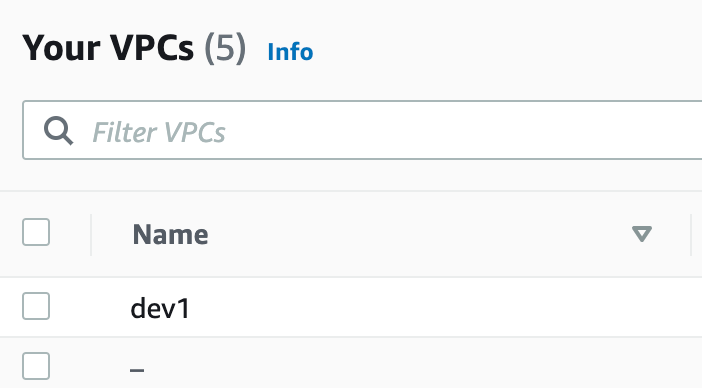

The VPC

Log into AWS and go to the VPC dashboard.

Within the VPCs, you’ll see a new VPC that’s named the same as you named your environment in the first section of this blog post.

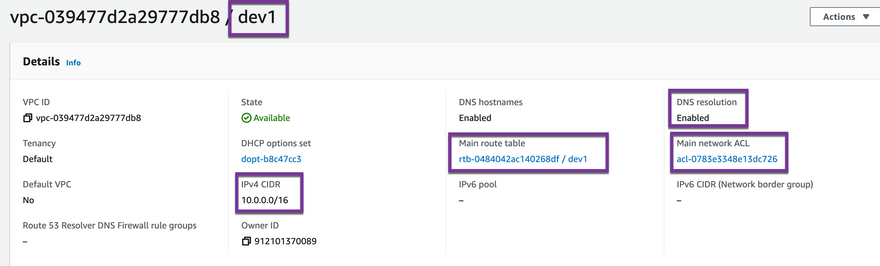

Within the VPC is where you can see things like the CIDR range, route tables, Access Control Lists (ACL), and DNS settings.

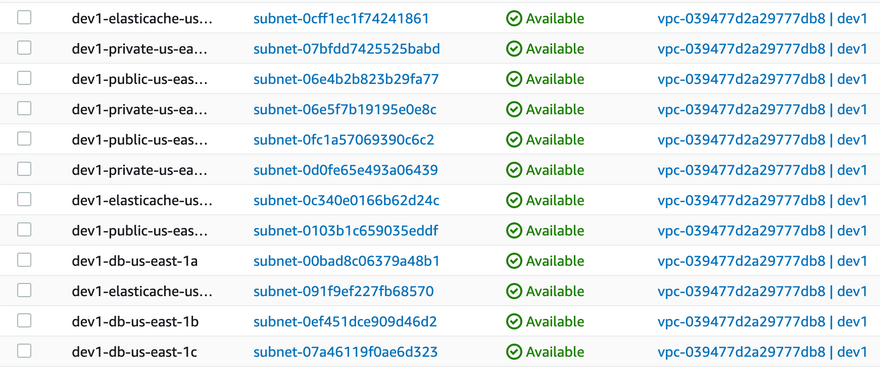

If you click on subnets in AWS and filter out your VPC that was created with Argonaut, you’ll see all of the subnets for EKS, ElastiCache, and databases.

The EKS Cluster

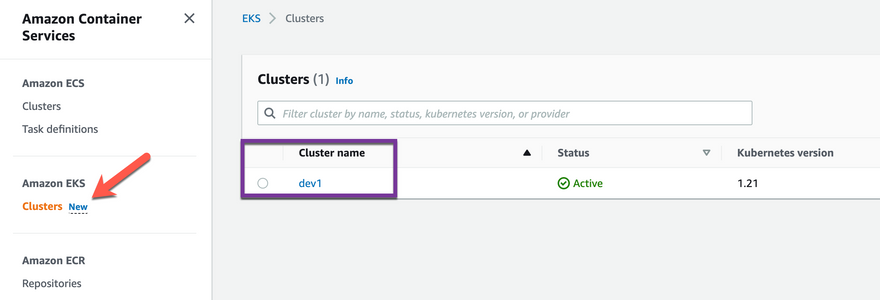

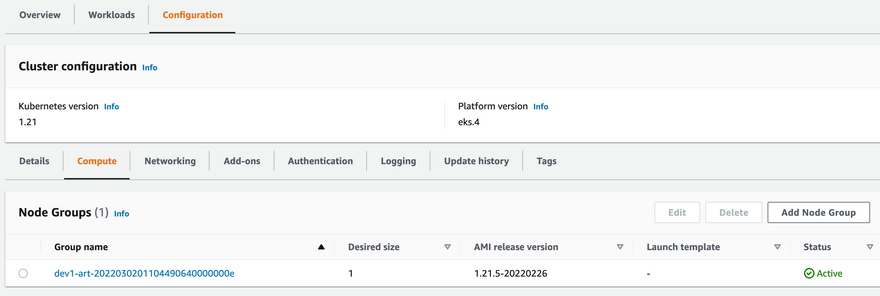

Going into the brain behind Argonaut is the Kubernetes cluster. From the AWS portal, go to the EKS page.

Within the EKS page, you’ll see the EKS cluster. Notice how it’s named the same as the VPC. That’s because it gets the same name as when you were setting up the Argonaut environment, just like the VPC.

If you click on the cluster, you’ll see all of the available resources including authentication, logging, networking, and compute for the Kubernetes worker nodes. Luckily there’s really not much you have to do here as Argonaut manages the entire configuration for you.

Wrapping Up

As you saw throughout this blog post, there are a lot of environment configurations that are set up. Everything from an entire network (the VPC) to a fully-functional Kubernetes cluster running in EKS. The amazing thing about this is even though it’s a complicated setup, you don’t have to worry about managing any of it yourself.

With cloud-native applications, there’s typically a ton of overhead and management. With Argonaut, it’s all abstracted for you. You don’t have to worry about creating the network, figuring out the CIDR’s, what subnets you need, permissions, the entire Kubernetes configuration, or anything else. You simply focus on your app while we take care of your deployments and help manage your cloud configurations.

Thanks to Michael Levan for helping put together the post!

Edited on 3rd June 2022.

Top comments (0)