This article is written in collaboration with Prashant R who built the ArgoCD integration.

In our recent announcement about adding the pipeline features to Argonaut, we mention using ArgoCD for our Continuous Delivery workflows. This article aims to shed more light on our implementation of ArgoCD by touching upon some of the major changes we’ve made. These changes might interest those curious about the backend changes that went in to this integration or if you’re looking to implement GitOps practices in your org using ArgoCD.

What is ArgoCD?

ArgoCD is an open-source, declarative, and Kubernetes-native Continuous Delivery (CD) tool designed to automate and simplify application deployment and management within Kubernetes clusters. It follows the GitOps methodology, using Git repositories as the single source of truth for maintaining the desired state of applications and infrastructure. ArgoCD monitors the Git repository for changes, automatically synchronizing the declared state with the live environment, ensuring consistency, and enabling faster and more reliable application delivery.

We choose to go with ArgoCD because it offers Argonaut greater flexibility to build features on top of our existing offerings. With its extensive feature set, including built-in support for Helm, Kustomize, and other configuration management tools, we found ArgoCD the right choice to implement our GitOps pipelines. In the coming months, we will be able to provide more functionality for our users to leverage based on these toolsets.

Our implementation

There are two major new components that we’ve introduced at Argonaut that enabled this shift to ArgoCD-based workflows. First, a new internal Git repo for every workspace that uses Argonaut. Second, a manager ArgoCD controller that runs in Argonaut’s environment. Before we get into the details, let’s touch upon what our setup looked like up until now.

Previous backend setup

(Pre-migration on 30th March 2023)

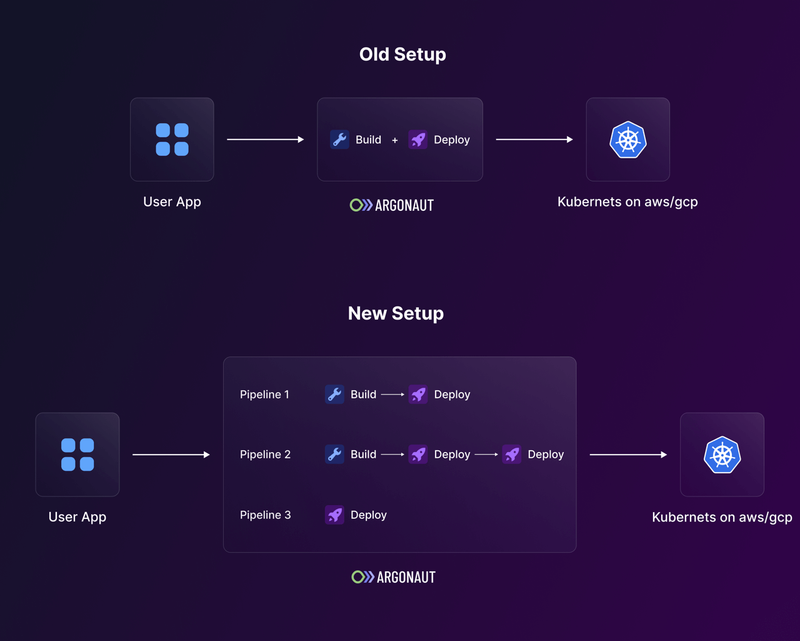

In our previous setup, we had a single workflow that combined the build and deploys steps. Though this was acceptable for most use cases, there were several of our customers that had more complex requirements. Such as deploying an image that was already built, building once and deploying to multiple environments, and so on. There was also an issue regarding custom apps such as Datadog, which do not require a build step. Currently, we’ve been handling that using the Add-ons flow and differentiating them from your Git apps (Git-apps).

These workflows were also triggered from Argonaut each time using Helm. This meant there was more involvement of Argonaut’s backend to complete the tasks and ensure that all your deployments are being carried on as expected. Our backend had to be stateful for the most part and directly involved in keeping up with user deployments.

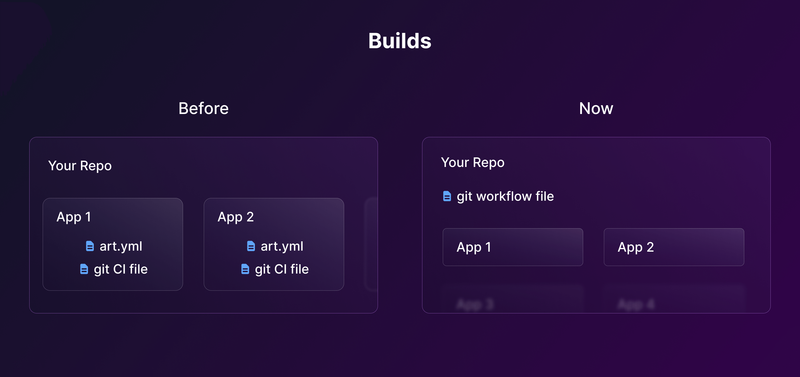

The Argonaut Bot on your Git repo had to install several workflow files on your Git repo to make it function. Small manual changes to these files could cause issues, and we even had to develop a three-way merge feature to fix such issues. This was a messy implementation, and many of our customers also requested a better way to do this.

New backend setup

The first major change we made was separating the build and deployment into two independent steps. This involved splitting the releases into the build and deploy steps, separating the build time and run time secrets while also ensuring they work well together without affecting the performance in any way.

Secondly, we now have an internal Argonaut GitHub with a unique repository for each workspace our user creates. This repo for your org will house all the config files and Helm values.yaml files. The ArgoCD instances for your org will watch for changes to this Git repo and automatically apply the changes when they notice them. By doing so, we can implement GitOps-powered deployment flows for your applications.

Third, we’ve reduced the number of config files we store in your repo. Each repo connected to an Argonaut org will now have just one workflow file. This file will be triggered in multiple ways based on the action you take from Argonaut’s UI.

Key decisions during migration

We’ve had to make several key decisions during the migration process and ensure a consistent user experience while also maintaining cost-effectiveness and planning for future scale from our end. Here we highlight a few key decisions.

Spinning up ArgoCD instances

All kinds of users visit and try out Argonaut. Some use it for their hobby projects, some power users use it for their work, and some are just exploring the product. We wanted to continue offering Argonaut as a free-to-use solution for all.

With the new ArgoCD instances approach, the initial costs would go up on Argonaut’s end as we spin up new ArgoCD instances for each user/org/environment. It was necessary to set up a milestone in a user’s journey where deploying an ArgoCD cluster for their app was viable. i.e., that they would actually use it, and we were confident that they would use Argonaut for their deployment.

We decided to spin up a new ArgoCD instance whenever users create their first k8s cluster in Argonaut. This would trigger a Helm install that would set up the ArgoCD instance in your own cluster.

Since every workspace has just one ArgoCD instance and one repo in Argonaut’s internal GitHub, creating the first Kubernetes cluster as a threshold point would be sufficient for all their future workflows and apps managed using Argonaut.

💡 Note: Though the ArgoCD instance is only installed on first cluster creation, the internal Git repo creation happens when a new org is created. The GitHub repo is essential to store all configs pertaining to the org.

Connecting ArgoCD and user clusters

Now that we have an ArgoCD manager app running on Argonaut’s cluster and an instance running in each of our users’ workspace, the next step is to ensure the right ArgoCD instances are watching the right repos and updating the changes as they come.

In our setup, the manager App is the one that runs in Argonaut’s cluster, and the rest of the apps are deployed directly in our user’s cluster during their first Kubernetes cluster creation.

The task of the Manager app is to make sure that all the child apps are in sync with the Git Repo. For example, a user wants to deploy the Battleships app. The user creates a new Kubernetes cluster, then Argonaut automatically creates a connection to the new cluster using ArgoCD. The Manager App on Argonaut’s cluster now spins up a child app (one for the Org) on the users’ cluster. And whenever there is a change to config in the Git repo, the child app watches it and ensures the cluster configs are in sync with the repo of that org.

The other issue we had to deal with was that the ArgoCD Manager runs on Argonaut’s cluster, but we need to deploy the apps with specified configs on the user’s selected cluster. To do this, we took the external cluster credentials approach.

Now, the cost for the ArgoCD controller running on our cluster and related components is all managed by Argonaut. This should show improved performance to our users at no additional cost.

Monitoring the deployment

For every app you deploy, Argonaut’s backend creates the values.yaml file, and commits it to the internal repo corresponding to your workspace. The ArgoCD instances on your clusters automatically pick up on these changes and deploy them to your cluster.

At this point, Argonaut doesn’t know the state of the deployment, as it is managed by ArgoCD. To get more visibility into this, we use ArgoCD’s Webhooks. As soon as the configuration is saved in our internal repo for your workspace, we listen to the callback from ArgoCD. This then continuously updates the status of your deployment in Argonaut UI. Everything happens in an async way. Each ArgoCD app has the same name as the Deployment ID, and we use these Deployment IDs to map the state of deployment and update it.

Managing secrets

Secrets are managed outside of the ArgoCD instance and Git. We use an internal secret manager for this, with plans to extend this implementation and integrate with other secret managers like Doppler and Hashicorp Vault in the future.

The container registry secrets are generated and stored outside of this as well, and are upserted as needed into the kubernetes cluster.

Conclusion

We’ve been working on this migration for a while now, and we’re excited to share it with you. This enables us to be truly GitOps-powered and also gives us the flexibility to serve scaling teams and infrastructure for our users as we grow.

Specifically, this new setup enables us to add functionality like:

- Deploying to multiple clouds, environments, and clusters

- Cloning apps and environments

- Managing secrets

- Managing custom build and deploy pipelines

- Preview environments on demand

- And more!

We’ve also been able to improve the user experience by making it easier to manage your deployments and secrets.

We’re excited to see what you build with Argonaut. If you have any questions or feedback, please reach out to us on Twitter.

Top comments (0)